Amazon Data Scientist Interview Questions

Being one of the biggest data-driven companies, Amazon is constantly looking for expert data scientists. If youâre preparing for a data scientist interview at Amazon, the following are some sample questions you can practice:

Recommended Reading:

What Do You Understand About True Positive Rate And False

- The True Positive Rate defines the probability that an actual positive will turn out to be positive.

The True Positive Rate is calculated by taking the ratio of the and .

The formula for the same is stated below –

TPR=TP/TP+FN

- The False Positive Rate defines the probability that an actual negative result will be shown as a positive one i.e the probability that a model will generate a false alarm.

The False Positive Rate is calculated by taking the ratio of the and .

The formula for the same is stated below –

FPR=FP/TN+FP

General Data Scientist Interview Questions

Here are a few examples of warm-up data scientist interview questions that will get you ready for the more in-depth inquiries ahead:

1. Tell me about yourself.

This will probably be the very first question of the interview. A very generic question, which is tougher than it sounds. You need to avoid telling the story of your life, but you dont want to pause after three sentences either. Given that it is the opening question of the interview, your answer becomes even more important, as it sets the tone for the rest of the conversation. The Hiring Manager wants to see if you can structure well the answer to a very broad question.

The secret for responding well to this question is scripting and practicing before every interview. What should you include in your response?

- Tell the interviewer only facts that you want him/her to know

- Give a hint about your personal life with one or two sentences. For example: I was born and raised in the UK. Or I moved to New York because it is a vibrant city and I like the dynamic environment.

- Show that you are perfect for the job under consideration you have the right education and that your previous work experiences will be a valuable asset to the firm

- Conclude by explaining why you are excited about this possibility and how your strengths match with the profile that the company is looking for.

2. What relevant work experience do you have?

3. Where do you see yourself in 5 years?

Also Check: How To Prepare For A Job Phone Interview

Q: The Homicide Rate In Scotland Fell Last Year To 99 From 115 The Year Before Is This Reported Change Really Noteworthy

- Since this is a Poisson distribution question, mean = lambda = variance, which also means that standard deviation = square root of the mean

- a 95% confidence interval implies a z score of 1.96

- one standard deviation = sqrt = 10.724

Therefore the confidence interval = 115+/- 21.45 = . Since 99 is within this confidence interval, we can assume that this change is not very noteworthy.

How To Get More Data Science Interview Prep Resources

Make sure to and also enroll in the video course, Ace the Data Job Hunt, which covers the resume, portfolio project, cold email networking, and behavioral interview aspects of landing your dream job in data!

You’ll probably also love the 30 SQL & Database questions we put together. While not as difficult as the stat/prob questions here, having a strong grasp of SQL and database design is crucial for any practicing Data Scientist or Data Analyst.

Read Also: How To Prepare For An Interior Design Interview

Is Having Large Amounts Of Data Always Preferable

This is a question that often comes up in data science interviews and aims to determine the applicantâs philosophy and general thinking when it comes to data. You can provide a balanced answer that discusses how the preferable amount of data typically depends on the context. Use the STAR method to illustrate your knowledge with specific professional experience.

Example:âA cost-benefit analysis is usually required to determine if large amounts of data are preferable. There are costs involved in having a vast amount of data, from computational power to memory requirements. Therefore, determining if the data is unbiased and relevant may be more important than its quantity.

I previously worked for a company that did electoral surveys for local elections. My task was to sort the received data based on the age and occupation of the people inquired. Upon analysis, I discovered that large numbers of citizens had many relevant similarities and concluded that, even though we had gathered data from a large number of subjects, a smaller number of subjects would have delivered similar results.â

Can You Explain The Concepts Of Overfitting And Underfitting

Overfitting is a modeling error where the machine learning model learns âtoo muchâ from the training data, paying attention to the points of data that are noisy or irrelevant. Overfitting negatively impacts the modelsâ ability to generalize.

Underfitting is a scenario where a statistical model or the machine learning model cannot accurately capture the relationships between the input and output variables. Underfitting occurs because the model is too simpleâinformed by not enough training time, too few features, or too much regularization.

Don’t Miss: How To Answer Phone Interview Questions

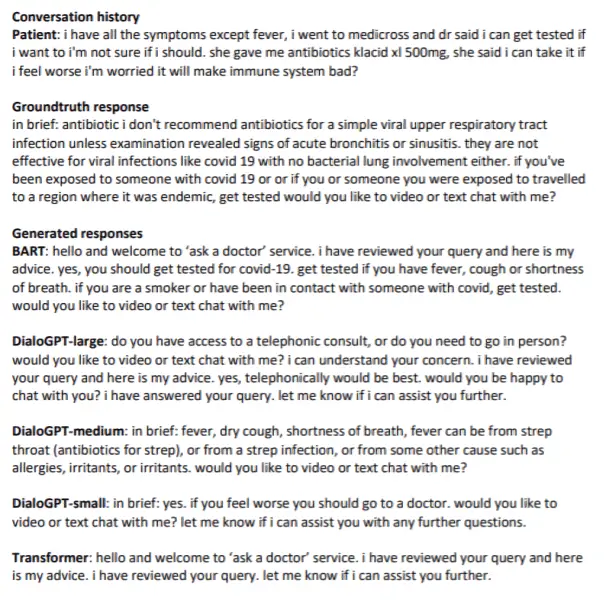

Q: What Is The Difference Between Supervised Learning And Unsupervised Learning Give Concrete Examples

Supervised learning involves learning a function that maps an input to an output based on example input-output pairs .

For example, if I had a dataset with two variables, age and height , I could implement a supervised learning model to predict the height of a person based on their age.

Unlike supervised learning, unsupervised learning is used to draw inferences and find patterns from input data without references to labeled outcomes. A common use of unsupervised learning is grouping customers by purchasing behavior to find target markets.

Check out my article All Machine Learning Models Explained in Six Minutes if youd like to learn more about this!

‘people Who Bought This Also Bought’ Recommendations Seen On Amazon Are A Result Of Which Algorithm

The recommendation engine is accomplished with collaborative filtering. Collaborative filtering explains the behavior of other users and their purchase history in terms of ratings, selection, etc.

The engine makes predictions on what might interest a person based on the preferences of other users. In this algorithm, item features are unknown.

For example, a sales page shows that a certain number of people buy a new phone and also buy tempered glass at the same time. Next time, when a person buys a phone, he or she may see a recommendation to buy tempered glass as well.

Recommended Reading: What Is Whiteboard Coding Interview

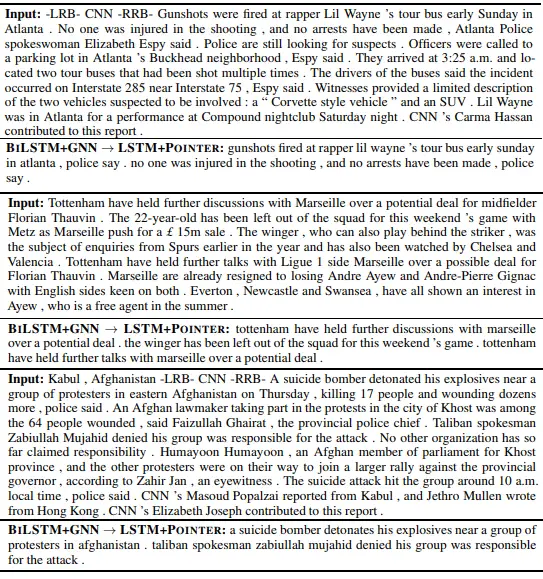

Q83 How Do You Work Towards A Random Forest

The underlying principle of this technique is that several weak learners combined to provide a keen learner. The steps involved are

- Build several decision trees on bootstrapped training samples of data

- On each tree, each time a split is considered, a random sample of mm predictors is chosen as split candidates, out of all pp predictors

- Rule of thumb: At each split m=pm=p

- Predictions: At the majority rule

Q58 What Is Machine Learning

Machine Learning explores the study and construction of algorithms that can learn from and make predictions on data. Closely related to computational statistics. Used to devise complex models and algorithms that lend themselves to a prediction which in commercial use is known as predictive analytics. Given below, is an image representing the various domains Machine Learning lends itself to.

Read Also: How To Stop Being Nervous For An Interview

Q76 How Can Outlier Values Be Treated

Outlier values can be identified by using univariate or any other graphical analysis method. If the number of outlier values is few then they can be assessed individually but for a large number of outliers, the values can be substituted with either the 99th or the 1st percentile values.

All extreme values are not outlier values. The most common ways to treat outlier values

To change the value and bring it within a range.

To just remove the value.

Q67 Explain Decision Tree Algorithm In Detail

A is a supervised machine learning algorithm mainly used for Regression and Classification. It breaks down a data set into smaller and smaller subsets while at the same time an associated decision tree is incrementally developed. The final result is a tree with decision nodes and leaf nodes. A decision tree can handle both categorical and numerical data.

Don’t Miss: Grokking Advanced System Design Interview

Data Science Interview Questions And Answers For 2022

The Indeed Editorial Team comprises a diverse and talented team of writers, researchers and subject matter experts equipped with Indeed’s data and insights to deliver useful tips to help guide your career journey.

Related: Top Interview Tips: Common Questions, Body Language & More

In this video, we dissect an entire job interview from start to finish. We analyze everything from common interview questions to etiquette and how to follow up.

Data science is one of the fastest-growing fields of study in technology and uses multiple methods of extracting both structured and unstructured data to draw relevant conclusions. Employers in a variety of industries need professionals with data science experience and skills, including those in statistics, data analysis, machine learning and other related fields. In this article, we provide data science interview questions and example answers you can review to prepare for your interview and secure employment as a data scientist.

Q: If A Pm Says That They Want To Double The Number Of Ads In Newsfeed How Would You Figure Out If This Is A Good Idea Or Not

You can perform an A/B test by splitting the users into two groups: a control group with the normal number of ads and a test group with double the number of ads. Then you would choose the metric to define what a good idea is. For example, we can say that the null hypothesis is that doubling the number of ads will reduce the time spent on Facebook and the alternative hypothesis is that doubling the number of ads wont have any impact on the time spent on Facebook. However, you can choose a different metric like the number of active users or the churn rate. Then you would conduct the test and determine the statistical significance of the test to reject or not reject the null.

Don’t Miss: How To Rock An Interview

What Are Exploding Gradients

Exploding gradients relate to the accumulation of significant error gradients, resulting in very large updates to the neural network model weights during training. This, in turn, leads to an unstable network.

The values of the weights can also become so large as to overflow and result in something called NaN values.

Q: Describe Markov Chains

Brilliant provides a great definition of Markov chains :

A Markov chain is a mathematical system that experiences transitions from one state to another according to certain probabilistic rules. The defining characteristic of a Markov chain is that no matter how the process arrived at its present state, the possible future states are fixed. In other words, the probability of transitioning to any particular state is dependent solely on the current state and time elapsed.

The actual math behind Markov chains requires knowledge on linear algebra and matrices, so Ill leave some links below in case you want to explore this topic further on your own.

Also Check: Best Algorithms Book For Interviews

What Is Root Cause Analysis

Root cause analysis was initially developed to analyze industrial accidents but is now widely used in other areas. It is a problem-solving technique used for isolating the root causes of faults or problems. A factor is called a root cause if its deduction from the problem-fault-sequence averts the final undesirable event from recurring.

What Is Random Forest And How Does It Work

Random Forest is a machine learning method used for regression and classification tasks. It consists of a large number of individual decision trees that operate as an ensemble and form a powerful model. The adjective “random” relates to the fact that the model uses two key concepts:

- A random sampling of training data points when building trees

- Random subsets of features considered when splitting nodes

In the case of classification, the output of the random forest is the class selected by most trees, and in the case of regression, it takes the average of outputs of individual trees.

Random Forest is also used for dimensionality reduction and treating missing values and outlier values.

Also Check: Nlp Data Scientist Interview Questions

Q100 What Are The Different Layers On Cnn

There are four layers inCNN:

Convolutional Layer the layer that performs a convolutional operation, creating several smaller picture windows to go over the data.

ReLU Layer it brings non-linearity to the network and converts all the negative pixels to zero. The output is a rectified feature map.

Pooling Layer pooling is a down-sampling operation that reduces the dimensionality of the feature map.

Fully Connected Layer this layer recognizes and classifies the objects in the image.

Q: Provide A Simple Example Of How An Experimental Design Can Help Answer A Question About Behavior How Does Experimental Data Contrast With Observational Data

Observational data comes from observational studies which are when you observe certain variables and try to determine if there is any correlation.

Experimental data comes from experimental studies which are when you control certain variables and hold them constant to determine if there is any causality.

An example of experimental design is the following: split a group up into two. The control group lives their lives normally. The test group is told to drink a glass of wine every night for 30 days. Then research can be conducted to see how wine affects sleep.

Also Check: Where To Watch Harry And Meghan Oprah Interview

Q: Infection Rates At A Hospital Above A 1 Infection Per 100 Person

Since we looking at the number of events occurring within a given timeframe, this is a Poisson distribution question.

Null : 1 infection per person-daysAlternative : > 1 infection per person-days

k = 10 infectionslambda = *1787p = 0.032372 or 3.2372% calculated using .poisson in excel or ppois in R

Since p-value < alpha , we reject the null and conclude that the hospital is below the standard.

What Is Pooling In Cnns

Pooling in convolutional neural networks is a form of non-linear down-sampling. The pooling layer is one of the building blocks of a CNN, and it is used to reduce the dimensions of the feature maps.

You are effectively squeezing patches of the images into compressed representations. Thereâs 3 common forms of poolingâMax, Average, and Sum:

During convolutions, you are pooling information from your original image. The size of your convolutional kernel can be tweaked for your dataâs needs.

The example below added 1 pixel of padding, for example.

Another parameter of your convolutions is the stride or the number of pixel-steps the kernel takes as it performs convolutions.

You May Like: Ci Cd Pipeline Jenkins Interview Questions

Suppose There Is A Dataset Having Variables With Missing Values Of More Than 30% How Will You Deal With Such A Dataset

Depending on the size of the dataset, we follow the below ways:

- In case the datasets are small, the missing values are substituted with the mean or average of the remaining data. In pandas, this can be done by using mean = df.mean where df represents the pandas dataframe representing the dataset and mean calculates the mean of the data. To substitute the missing values with the calculated mean, we can use df.fillna.

- For larger datasets, the rows with missing values can be removed and the remaining data can be used for data prediction.

Data Science Interview Questions For Beginners

1. What are the differences between Supervised and Unsupervised Learning?

Supervised learning is a type of machine learning where a function is inferred from labeled training data. The training data contains a set of training examples.

Unsupervised learning, on the other hand, is when inferences are drawn from datasets containing input data without labeled responses.

The following are the various other differences between the two types of machine learning:

|

Supervised Learning |

|

|

Prediction |

Analysis |

Weve already written about the difference between Supervised Learning vs Unsupervised Learning in detail, so check that out for more info.

2. What is Selection Bias and what are the various types?

Selection bias is typically associated with research that doesnt have a random selection of participants. It is a type of error that occurs when a researcher decides who is going to be studied. On some occasions, selection bias is also referred to as the selection effect.

In other words, selection bias is a distortion of statistical analysis that results from the sample collecting method. When selection bias is not taken into account, some conclusions made by a research study might not be accurate.

The following are the various types of selection bias:

3. What is the goal of A/B Testing?

4. Between Python and R, which one would you pick for text analytics, and why?

For text analytics, Python will gain an upper hand over R due for the following reasons:

Learn more about R vs Python here.

Read Also: How To Interview An Attorney