How Can I Pass Data Engineer Interview

You can pass a data engineer interview if you have the right skill set and experience necessary for the job role. If you want to crack the data engineer interview, acquire the essential skills like data modeling, data pipelines, data analytics, etc., explore resources for data engineer interview questions, and build a solid portfolio of big data projects. Practice real-world data engineering projects on ProjectPro, Github, etc. to gain hands-on experience.

How To Prepare For Amazon Data Engineer Interview

Knowing that Amazon Data Engineer’s interview process is quite a tasking one, you must equip yourself with all the necessary programming and communication skills.

Before digging into the technical topics asked at an Amazon data engineer interview, you can reach out to your point of contact and understand the skills/subjects you will most likely discuss.

Generally, to crack an Amazon data engineer interview, one must be literate in programming languages used for building data pipelines, developing data warehousing solutions, and statistical analysis and modeling.

Sample questionsVideo lecturesIdeal solutions

What Is Schema Evolution

One set of data can be kept in several files with various yet compatible schemas with schema evolution. The Parquet data source in Spark can automatically recognize and merge the schema of those files. Without automatic schema merging, the most common method of dealing with schema evolution is to reload past data, which is time-consuming.

Also Check: How To Write A Follow Up Letter After An Interview

Learn About The Company

Before diving into the Amazon data engineer interview preparation, take some time to know Amazon as a company. You can learn about the company’s customer-centricity, business teams, and the factors that make it special. Moreover, you can leverage this knowledge in the Amazon data engineer interview process.

What Is The Difference Between A Relational Database And A Non

There are two types of databases you are likely to encounter as an Amazon data engineer: relational, or SQL, and non-relational, or NoSQL. Its important to understand the differences between them, and when you would use each one.

A relational database is structured. It organizes data into tables containing columns and rows. The tables in a relational database can be dependent on one another. Relational databases use SQL, Structured Query Language, to navigate the database and retrieve data.

A non-relational database, usually called a NoSQL database, is unstructured. It does not use tables to categorize data. Instead, it stores data in what is essentially a long list, in which items do not have defined relationships to one another. The unstructured information can be more difficult to navigate, but it is more flexible in how it is manipulated.

The best database type to use will depend on the job. When the data you are working with is structured, with clear relationships and dependencies, a SQL database is likely the right tool. If the data is not structured in any clear way, and if different pieces of data have no clear relationships, a NoSQL database is a better choice.

You May Like: Good Clinical Practice Interview Questions

Amazon Data Engineer Roles And Responsibilities

Data engineers at Amazon tackle complex, large-scale data engineering challenges, with many different streams of data to incorporate. Although the responsibilities vary by vertical, data engineers at Amazon are responsible for:

- Building different types of data warehousing layers based on specific use cases.

- Building scalable data infrastructure and understanding distributed systems concepts from a data storage and compute perspective.

- Utilizing expertise in SQL and having a strong understanding of ETL and data modeling.

- Ensuring the accuracy and availability of data to customers and understanding how technical decisions can impact the businesss analytics and reporting.

- Proficiency in at least one scripting/programming language to handle large-volume data processing.

- Designing and implementing analytical data infrastructure.

- Interfacing with other technology teams to extract, transform and load data from a wide variety of data sources.

- Collaborating with various tech teams to implement advanced analytics algorithms.

Can You Work Well Under Pressure

Yes. A data engineer should always be ready to work under pressure, given the number of roles and deadlines in this profession. I have managed to handle pressure quite well in my former institutions, and I am convinced I will also succeed here. I normally plan my work to know what to expect and stay ahead at all times, which comes in handy in avoiding pressure. I am also good at multitasking, owing to my experience in this field, and can simultaneously perform several roles when the need arises.

Also Check: Should I Email After An Interview

How Would You Describe Your Communication Style

Culture fit is assessed in Amazon behavioral interviews. This question helps the interviewer understand how you communicate, gather information and collaborate with a team. With a question like this, you might incorporate any of the three principles of Disagree and Commit, Earn Trust or Learn and Be Curious.

What Are The Benefits Of Using Aws Identity And Access Management

-

AWS Identity and Access Management supports fine-grained access management throughout the AWS infrastructure.

-

IAM Access Analyzer allows you to control who has access to which services and resources and under what circumstances. IAM policies let you control rights for your employees and systems, ensuring they have the least amount of access.

-

It also provides Federated Access, enabling you to grant resource access to systems and users without establishing IAM Roles.

Also Check: Questions The Candidate Should Ask In An Interview

Mention Some Differences Between Substitute And Replace Functions In Excel

The SUBSTITUTE function in Excel is useful to find a match for a particular text and replace it. The REPLACE function replaces the text, which you can identify using its position.

SUBSTITUTE syntax

=SUBSTITUTE

Where

text refers to the text in which you can perform the replacements

instance_number refers to the number of times you need to replace a match.

E.g. consider a cell A5 which contains Bond007

=SUBSTITUTE gives the result Bond107

=SUBSTITUTE gives the result Bond117

=SUBSTITUTE gives the result Bond117

REPLACE syntax

=REPLACE

Where start_num – starting position of old_text to be replaced

num_chars – number of characters to be replaced

E.g. consider a cell A5 which contains Bond007

=REPLACE gives the result Bond9907

What Do You Mean By Collaborative Filtering

Collaborative filtering is a method used by recommendation engines. In the narrow sense, collaborative filtering is a technique used to automatically predict a user’s tastes by collecting various information regarding the interests or preferences of many other users. This technique works on the logic that if person 1 and person 2 have the same opinion on one particular issue, then person 1 is likely to have the same opinion as person 2 on another issue than another random person. In general, collaborative filtering is the process that filters information using techniques involving collaboration among multiple data sources and viewpoints.

Don’t Miss: What To Email Someone After An Interview

What Kind Of Projects Are Included As Part Of The Training

Intellipaat is offering you the most updated, relevant, and high-value real-world projects as part of the training program. This way, you can implement the learning that you have acquired in real-world industry setup. All training comes with multiple projects that thoroughly test your skills, learning, and practical knowledge, making you completely industry-ready.

You will work on highly exciting projects in the domains of high technology, ecommerce, marketing, sales, networking, banking, insurance, etc. After completing the projects successfully, your skills will be equal to 6 months of rigorous industry experience.

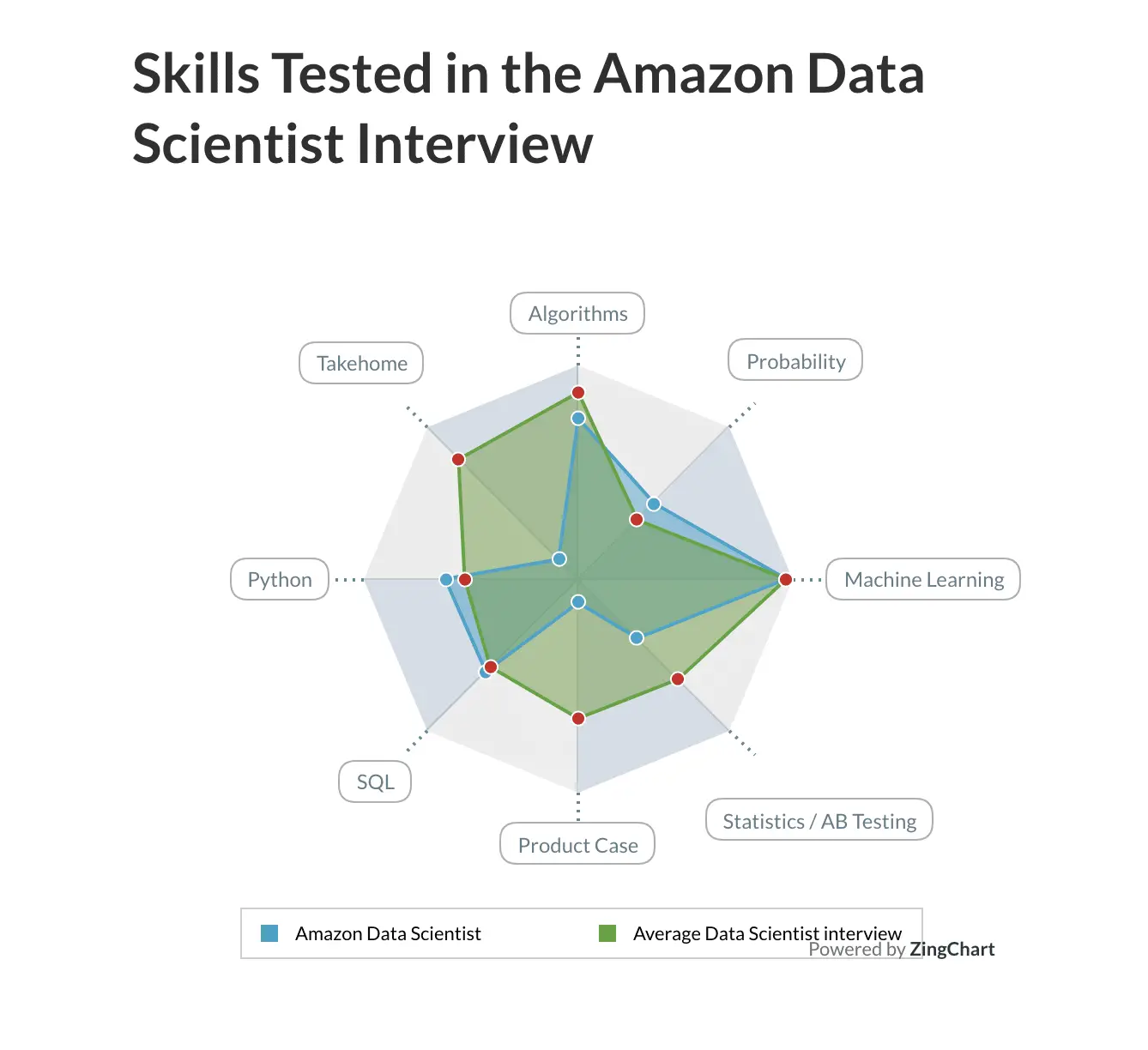

What Are The Skills Needed To Become A Data Engineer At Amazon

Data engineering is a confluence between data science and software engineering. In fact, many data engineers start as software engineers as data engineering relies heavily on programming.

Therefore, data engineers at Amazon are required to be familiar with:

à Building database systems.

à Maintaining database systems

à Finding warehousing solutions

Customer obsession is a part of Amazon’s DNA, making this FAANG company one of the world’s most beloved brands. Naturally, Amazon hires the best technological minds to innovate on behalf of its customers. The company calls for its data engineers to solve complex problems that impact millions of customers, sellers, and products using cutting-edge technology.

As a result, they are extremely selective about hiring prospective Amazonians. The company requires data engineers to have a keen interest in creating new products, services, and features from scratch.

Therefore, during the data engineer interview, your skills in the following areas will be assessed:

- Big data technology

- Summary statistics

- SAS and R programming languages

Since Amazon incorporates a collaborative workspace, data engineers work closely with chief technical officers, data analysts, etc. Therefore, becoming a data engineer at this FAANG firm also requires you to have soft skills, such as collaboration, leadership, and communication skills.

Read Also: How To Speak In Interview

What Are Freeze Panes In Ms Excel

Freeze panes are used in MS Excel to lock a particular row or column. The rows or columns you lock will be visible on the screen even when scrolling the sheet horizontally or vertically.

To freeze panes on Excel:

First, select the cell to the right of the columns and below the rows to be kept visible.

Select View > Freeze Panes > Freeze Panes.

Learn About Amazons Culture

Many candidates skip this step. However, its important to take the time to learn more about Amazon and whether its the right company for you.

Amazon is a prestigious company, so its tempting to apply without doing your research first. In our experience, the prestige alone won’t make you happy in your day-to-day work. Its the company culture, people you work with, and type of work that will.

This is also an important step to take in order to prepare for the interviews. Amazon is looking for engineers who will fit in with their culture and are passionate about the company. Coming in with an understanding of the company strategy and your teams work will show that youve done your research.

Here are some resources to help you get started:

Also Check: How Should I Answer Interview Questions

Talk Your Way Through Your Thought Process

It is natural for candidates to get focused when coding or facing a schema design problem. As a result, they go silent, which makes them inscrutable to the interviewer. Although it is odd to talk your way through the problem-solving process, it is vital to do so. So, explicitly practice and develop a habit of being communicative while solving a problem.

Mention A Time That You Failed In This Role And The Lesson You Learned

I have had a few bad experiences in this role that have fortunately shaped me into a better data engineer. I failed in my first experience as a team leader. The team members were poorly chosen, and the coordination was not apt, making us miss all the deadlines and earn a severe reprimand from the managers. They were easy on me, given that it was my first experience leading a team. This experience taught me the importance of proper team build-up and coordination, which I have stuck to till today.

Don’t Miss: What Is A Spark Hire Interview

List Some Of The Essential Features Of Hadoop

-

Hadoop is a user-friendly open source framework.

-

Hadoop is highly scalable. Hadoop can handle any sort of dataset effectively, including unstructured , semi-structured , and structured .

-

Parallel computing ensures efficient data processing in Hadoop.

-

Hadoop ensures data availability even if one of your systems crashes by copying data across several DataNodes in a Hadoop cluster.

What Is The Relevance Of Apache Hadoop’s Distributed Cache

Hadoop Distributed Cache is a Hadoop MapReduce Framework technique that provides a service for copying read-only files, archives, or jar files to worker nodes before any job tasks are executed on that node. To minimize network bandwidth, files are usually copied only once per job. Distributed Cache is a program that distributes read-only data/text files, archives, jars, and other files.

Also Check: What Are Some Common Questions Asked In A Job Interview

What Happens When A Block Scanner Detects A Corrupted Data Block

Block Scanner is a data engineers friend since it detects corrupted data that may have escaped a data professional. In such an instance, the DataNode will first report to NameNode when a corrupted data block is found. The NameNode then begins to create a new data replication using the corrupted block replica. The last step is matching the replication count of the exact replicas with the replication factor. The corrupted data block is not deleted when a match is found.

What Tools Did You Use In Your Recent Projects

Interviewers seek to analyze your decision-making abilities as well as your understanding of various tools. As a result, utilize this question to describe why you chose certain tools over others. Tell the interviewer about the tools you used and why you used them. You can also mention the features and drawbacks of the tool you used. Also, try to use this opportunity to tell the interviewer how you can use the tool for the companys benefit.

Recommended Reading: Qa Scenario Based Interview Questions

Discuss The Different Windowing Options Available In Azure Stream Analytics

Stream Analytics has built-in support for windowing functions, allowing developers to quickly create complicated stream processing jobs. Five types of temporal windows are available: Tumbling, Hopping, Sliding, Session, and Snapshot.

-

Tumbling window functions take a data stream and divide it into discrete temporal segments, then apply a function to each. Tumbling windows often recur, do not overlap, and one event cannot correspond to more than one tumbling window.

-

Hopping window functions progress in time by a set period. Think of them as Tumbling windows that can overlap and emit more frequently than the window size allows. Events can appear in multiple Hopping window result sets. Set the hop size to the same as the window size to make a Hopping window look like a Tumbling window.

-

Unlike Tumbling or Hopping windows, Sliding windows only emit events when the window’s content changes. As a result, each window contains at least one event, and events, like hopping windows, can belong to many sliding windows.

-

Session window functions combine events that coincide and filter out periods when no data is available. The three primary variables in Session windows are timeout, maximum duration, and partitioning key.

-

Snapshot windows bring together events having the same timestamp. You can implement a snapshot window by adding System.Timestamp to the GROUP BY clause, unlike most windowing function types that involve a specialized window function ).

What Is An Ndarray In Numpy

In NumPy, an array is a table of elements, and the elements are all of the same types and you can index them by a tuple of positive integers. To create an array in NumPy, you must create an n-dimensional array object. An ndarray is the n-dimensional array object defined in NumPy to store a collection of elements of the same data type.

Read Also: What Questions To Ask The Employer In An Interview

What Is The Difference Between A Star Schema And A Snowflake Schema

In data modeling, there are two main schemas used to develop data warehouses: Star schemas and snowflake schemas. Its important to understand the two schemas and their different use-cases.

Star schemas are the simplest and most common method of developing data warehouses. They consist of fact tables, containing data for a business process, connected by dimension tables, which structure the data in the way that users can navigate. They are generally used for simple database queries. They are called star schemas because they look like a star shape when represented visually. Star schemes are a subset of snowflake schemas.

Snowflake schemas are an arrangement of tables in which there is a single central fact table connected by multiple dimension tables. The dimension tables are connected to other dimension tables, creating a complex snowflake of relationships throughout the database. Snowflake schemas are useful for complex queries and large, complex databases.

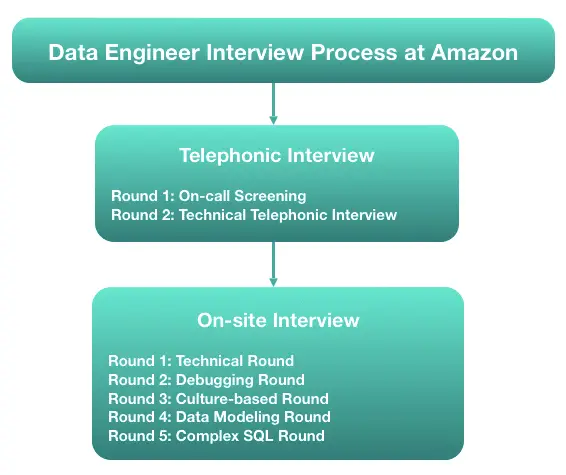

What Interviews To Expect

Lets take a look at these steps in more detail.

1.1.1 Resume screen

First, recruiters will look at your resume and assess if your experience matches the open position. This is the most competitive step in the process, as millions of candidates do not make it past this stage.

If youre looking for expert feedback on your resume, you can get input from our team of ex-FAANG recruiters, who will cover what achievements to focus on , how to fine tune your bullet points, and more.

If you do have a connection to someone at Amazon, it can be really helpful to get an employee referral to the internal recruiting team, as it may increase your chances of getting into the interview process.

1.1.2 Online assessment

Some candidates will receive an invitation for an online test before moving on to the first-round calls. These are more common for internship and junior positions, but may appear in experienced positions as well.

This assessment will focus your technical skills, requiring a strong understanding of SQL querying and some coding. There may be a question on data modeling as well. Youll likely have a deadline by which you have to complete the assessment, but the test itself is not timed.

If you pass the online assessment, youll move on to your first calls with Amazon interviewers.

1.1.3 First-round interviews

1.1.4 Onsite interviews

Read Also: How To Get Prepared For An Interview

What Are The Components Of Hadoop

Hadoop has the following components:

- Hadoop Common: A collection of Hadoop tools and libraries.

- Hadoop HDFS: Hadoop’s storage unit is the Hadoop Distributed File System . HDFS stores data in a distributed fashion. HDFS is made up of two parts: a name node and a data node. While there is only one name node, numerous data nodes are possible.

- Hadoop MapReduce: Hadoop’s processing unit is MapReduce. The processing is done on the slave nodes in the MapReduce technique, and the final result is delivered to the master node.

- Hadoop YARN: Hadoop’s YARN is an acronym for Yet Another Resource Negotiator. It is Hadoop’s resource management unit, and it is included in Hadoop version 2 as a component. It’s in charge of managing cluster resources to avoid overloading a single machine.