What Is Azure Synapse Runtime

Apache Spark pools in Azure Synapse use runtimes to tie together essential component versions, Azure Synapse optimizations, packages, and connectors with a specific Apache Spark version. These runtimes will be upgraded periodically to include new improvements, features, and patches.These runtimes have the following advantages:

- Faster session startup times

- Tested compatibility with specific Apache Spark versions

- Access to popular, compatible connectors and open-source packages

How To Connect Azure Data Factory To Github

Azure data factory can connect to GitHub using the GIT integration. You probably have using the Azure DevOps which has git repo. We can configure the GIT repository path into the Azure data factory. So that all the changes we do in the Azure data factory get automatically sync with the GitHub repository.

What Purpose Does The Adf Service Serve

The main function of an ADF is to coordinate data copying among numerous relational and non-relational sources of data that are hosted locally, in data centers, or the cloud. Additionally, you can use the ADF Service to transform the information that has been ingested to meet business needs. ADF Service is utilized as an ETL or ELT tool for loading data in the majority of Big Data solutions.

Recommended Reading: Example Of Elevator Pitch For Interview

What Are The Differences Between The Azure Storage Queue And The Azure Service Bus Queue

The main difference between Azure Storage Queue and the Azure Service Bus Queue is given below:

| Azure Storage Queue | |

|---|---|

| The size of the message is 64KB. | The size of the message is 256KB. |

| Supports one-to-one delivery of messages. | Supports both one to one and one-to-many deliveries of messages. |

| The transaction is not supported. | The transaction is supported here. |

| This queue supports only batch receive. | This supports both batch send and batch receive of messages. |

| The behavior of receiving messages is non-blocking. | The behavior can be either blocking or non-blocking based on the configuration. |

What Are Some Issues You Can Face With Azure Databricks

You might face cluster creation failures if you dont have enough credits to create more clusters. Spark errors are seen if your code is not compatible with the Databricks runtime. You can come across network errors if it’s not configured properly or if youre trying to get into Databricks through an unsupported location.

Also Check: Best Common Interview Questions And Answers

What Are The Different Types Of Storage Offered By Azure

Storage questions are very commonly asked during an Azure Interview. Azure has four different types of storage. They are:

Azure Blob Storage

Blob Storage enables users to store unstructured data that can include pictures, music, video files, etc. along with their metadata.

- When an object is changed, it is verified to ensure it is of the latest version.

- It provides maximum flexibility to optimize the users storage needs.

- Unstructured data is available to customers through REST-based object storage

Azure Table Storage

Table Storage enables users to perform deployment with semi-structured datasets and a NoSQL key-value store.

- It is used to create applications requiring flexible data schema

- It follows a strong consistency model, focusing on enterprises

Azure File Storage

File Storage provides file-sharing capabilities accessible by the SMB protocol

- The data is protected by SMB 3.0 and HTTPS

- Azure takes care of managing hardware and operating system deployments

- It improves on-premises performance and capabilities

Azure Queue Storage

Queue Storage provides message queueing for large workloads

- It enables users to build flexible applications and separate functions

- It ensures the application is scalable and less prone to individual components failing

- It enables queue monitoring which helps ensure customer demands are met

How To Create A New Storage Account And Container Using Power Shell

$storageName = "st" + New-AzureRmStorageAccount -ResourceGroupName "myResourceGroup" -AccountName $storageName -Location "West US" -SkuName "Standard_LRS" -Kind Storage$accountKey = .Value$context = New-AzureStorageContext -StorageAccountName $storageName -StorageAccountKey $accountKeyNew-AzureStorageContainer -Name "templates" -Context $context -Permission Container

Recommended Reading: How Do You Prioritize Your Work Interview

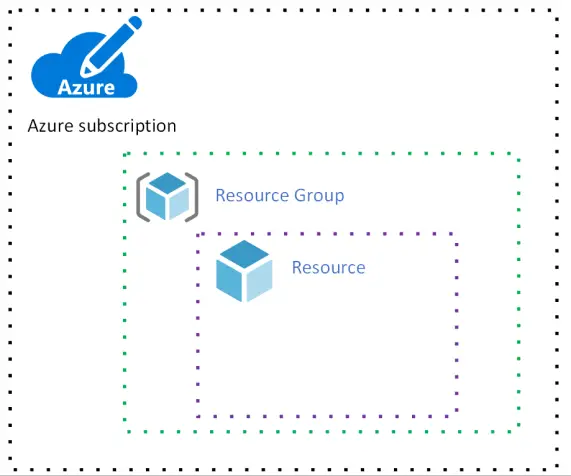

What Are The Advantages Of The Azure Resource Manager

Azure Resource Manager enables users to manage their usage of application resources. Few of the advantages of Azure Resource Manager are:

- ARM helps deploy, manage and monitor all the resources for an application, a solution or a group

- Users can be granted access to resources they require

- It obtains comprehensive billing information for all the resources in the group

- Provisioning resources is made much easier with the help of templates

What Is Azure Search

Explanation: Azure Search is a cloud search-as-a-service solution that delegates server and infrastructure management to Microsoft, leaving you with a ready-to-use service that you can populate with your data and then use to add search to your web or mobile application. Azure Search allows you to easily add a robust search experience to your applications using a simple REST API or .NET SDK without managing search infrastructure or becoming an expert in search.

Also Check: How To Give A Perfect Interview

What Are Instance Sizes Of Azure

Windows Azure will handle the load balancing for all the instances that are created. The VM sizes are as follows:Compute Instance Size CPU Memory Instance Storage I/O Performance Extra Small 1.0 Ghz 768 MB 20 GB Low Small 1.6 GHz 1.75 GB 225 GB Moderate Medium 2 x 1.6 GHz 3.5 GB 490 GB High Large 4 x 1.6 GHz 7 GB 1,000 GB High Extra large 8 x 1.6 GHz 14 GB 2,040 GB High

Elaborate More On The Get Metadata Activity In Azure Data Factory

The Get Metadata activity is used to retrieve the metadata of any data in the Azure Data Factory or a Synapse pipeline. We can use the output from the Get Metadata activity in conditional expressions to perform validation or consume the metadata in subsequent activities.

It takes a dataset as an input and returns metadata information as output. Currently, the following connectors and the corresponding retrievable metadata are supported. The maximum size of returned metadata is 4 MB.

Please refer to the snapshot below for supported metadata which can be retrieved using the Get Metadata activity.

Don’t Miss: How To Do An Online Video Interview

Q29 Specify The Two Levels Of Security In Azure Data Lake Storage Gen2

- Azure Access Control Lists: This specifies the data object a user may read, write or execute. ACLs are familiar to Linux or Unix users, as it is POSIX- compliant.

- Azure Role-Based Access Control: This comprises various built-in Azure roles like contributor, owner, reader, and more. It gets assigned for two reasons – to state who can monitor the service and to allow the use of built-in data explorer tools.

What Is The If Activity In The Azure Data Factory

If activity is used in the Azure data factory as a control activity. For example in some cases, you want to check whether steps to take or not based upon some condition. Then you can use if activity. You can pass the Boolean condition in the if activity and if it is true then it will take the step accordingly and if it is false then otherwise it will take the step accordingly.

Read Also: How To Prepare Facebook Interview

Tell Me About A Problem You Solved At Your Prior Job

This is something to spend some time on when youre preparing responses to possible Azure interview questions. As a cloud architect, you need to show that you are a good listener and problem solver, as well as a good communicator. Yes, you need to know the technology, but cloud computing does not usually involve sitting isolated in a cubicle. Youll have stakeholders to listen to, problems to solve, and options to present. When you answer questions like these, try to convey that you are a team player and a good communicator, in addition to being a really good Azure architect.

Is It Possible To Design Applications That Handle Connection Failure In Azure

Yes, it is possible and is done by means of the Transient Fault Handling Block. There can be multiple causes of transient failures while using the cloud environment:

- Due to the presence of more load balancers, we can see that the application to database connections fail periodically.

- While using multi-tenant services, the calls get slower and eventually time out because other applications are using resources to hit the same resource heavily.

- The last cause can be we ourselves as the user trying to hit the resource very frequently which causes the service to deliberately deny the connection to us to support other tenants in the architecture.

Instead of showing errors to the user periodically, the application can recognize the errors that are transient and automatically try to perform the same operation again typically after some seconds with the hope of establishing the connection. By making use of the Transient Fault Handling Application Block mechanism, we can generate the retry intervals and make the application perform retries. In the majority of the cases, the error would be resolved on the second try and hence the user need not be made aware of these errors unnecessarily.

Following is the sample code that can be used for the retry policy. Here, if the connection is not successful, then the action is retried based on the retry policy defined. There are 3 retry strategies – Fixed Interval, Incremental Interval, Exponential Backoff Strategy.

You May Like: How To Prepare For Net Interview

Is Nested Looping Possible With Azure Data Factory

The data factory does not directly support nested looping for any looping action . One for each and until loop activities, on the other hand, contain execute pipeline activities that may contain loop activities. In this manner, we can achieve nested looping because when we call the loop activity, it will inadvertently call another loop activity.

What Is The Purpose Of Linked Services Inazure Data Factory

Linked services are used majorly for two purposes in Data Factory:

For a Data Store representation, i.e., any storage system like Azure Blob storage account, a file share, or an Oracle DB/ SQL Server instance.

For Compute representation, i.e., the underlying VM will execute the activity defined in the pipeline.

Also Check: How To Give Best Interview

Q9 How Can You Make Azure Functions

An Azure Function is a solution to implement small function lines or code within a cloud environment. Using Azure Functions, you can choose programming languages of your choice. Users only pay for the first time they run the code, meaning a pay-per-use model is implemented. Functions support a diverse range of languages such as C#, F#, Java, PHP, Python and Node.JS. Additionally, Azure Functions also support consistent integration and deployment. Businesses can develop applications that dont need servers by using the applications of Azure Functions.

Is It Possible To Have Nested Looping In Azure Data Factory

There is no direct support for nested looping in the data factory for any looping activity . However, we can use one for each/until loop activity which will contain an execute pipeline activity that can have a loop activity. This way, when we call the loop activity it will indirectly call another loop activity, and well be able to achieve nested looping.

Get confident to build end-to-end projects.

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Also Check: Women’s Interview Outfit Ideas

How Is Lookup Activity Useful In The Azure Data Factory

In the ADF pipeline, the Lookup activity is commonly used for configuration lookup purposes, and the source dataset is available. Moreover, it is used to retrieve the data from the source dataset and then send it as the output of the activity. Generally, the output of the lookup activity is further used in the pipeline for taking some decisions or presenting any configuration as a result.

In simple terms, lookup activity is used for data fetching in the ADF pipeline. The way you would use it entirely relies on your pipeline logic. It is possible to obtain only the first row, or you can retrieve the complete rows depending on your dataset or query.

Does Big Data sound difficult to work with? Work on end-to-end solved Big Data Projects using Spark, and you will know how easy it is!

Q18 Why Is Azure Data Factory Necessary

-

Data comes from many different sources thus, the amount of data gathered can be overwhelming. In such cases, we need Azure Data Factory to carry out the process of storing data and transforming it in an efficient and organised way.

-

Traditional data warehouses can carry out this process. However, there can be many disadvantages to this process.

-

Data comes in many different formats from different sources, and processing and transforming this data needs to be structured.

Read Also: Home Depot Software Engineer Interview

What Are The Various Models Available For Cloud Deployment

There are 3 models available for cloud deployment:

- Public Cloud: In this model, the cloud infrastructure is owned publicly by the cloud provider and there are chances that the server resources could be shared between multiple applications.

- Private Cloud: Here, the cloud infrastructure is owned exclusively by us or exclusive service is provided by the cloud provider to us.

- This includes hosting our applications on our own on-premise servers or hosting the application on a dedicated server provided by the cloud provider.

Where Can I Find A List Of Applications That Are Pre

Explanation: Azure AD has around 2600 pre-integrated applications. All pre-integrated applications support single sign-on . SSO let you use your organizational credentials to access your apps. Some of the applications also support automated provisioning and de-provisioning.

Apart from this Azure Interview Questions Blog, if you want to get trained from professionals on this technology, you can opt for a structured training from edureka! Click below to know more.

Read Also: When To Send Follow Up Email After Interview

Q11 List The Step Through Which You Can Access Data Using The 80 Types Of Datasets In Azure Data Factory

In its current version, the MDF functionality permits SQL Data Warehouse, SQL Database, and Parquet and text files stored in Azure Blob Storage and Data Lake Storage Gen2 natively for source and sink. You can use the Copy Activity functionality to access data from any supplementary connector. After this, you must also run an Azure Data Flow activity to transform the data efficiently after the staging is complete.

How To Achieve Security In Hadoop

Perform the following steps to achieve security in Hadoop:

1) The first step is to secure the authentication channel of the client to the server. Provide time-stamped to the client.2) In the second step, the client uses the received time-stamped to request TGS for a service ticket.3) In the last step, the client use service ticket for self-authentication to a specific server.

You May Like: What To Say In A Email After An Interview

What Is The Azure Traffic Manager

Azure Traffic Manager is a traffic load balancer that enables users to provide high availability and responsiveness by distributing traffic in an optimal manner across global Azure regions.

- It provides multiple automatic failover options

- It helps reduce application downtime

- It enables the distribution of user traffic across multiple locations

- It enables users to know where customers are connecting from

Launch Your Career into the Clouds!

What Are The Different Types Of Synapse Sql Pools Available

Azure Synapse Analytics is an analytics service that brings together enterprise data warehousing and Big Data analytics. Dedicated SQL pool refers to the enterprise data warehousing features that are available in Azure Synapse Analytics. There are two types of Synapse SQL pool

- Serverless SQL pool

- Dedicated SQL pool

You May Like: Interview Questions For Qa Engineer

Why Hadoop Uses Context Object

Hadoop framework uses Context object with the Mapper class in order to interact with the remaining system. Context object gets the system configuration details and job in its constructor.

We use Context object in order to pass the information in setup, cleanup and map methods. This object makes vital information available during the map operations.

How Can I Schedule A Pipeline

You can use the time window trigger or scheduler trigger to schedule a pipeline. The trigger uses a wall-clock calendar schedule, which can schedule pipelines periodically or in calendar-based recurrent patterns .

Currently, the service supports three types of triggers:

-

Tumbling window trigger: A trigger that operates on a periodic interval while retaining a state.

-

Schedule Trigger: A trigger that invokes a pipeline on a wall-clock schedule.

-

Event-Based Trigger: A trigger that responds to an event. e.g., a file getting placed inside a blob.Pipelines and triggers have a many-to-many relationship . Multiple triggers can kick off a single pipeline, or a single trigger can kick off numerous pipelines.

Recommended Reading: What Should I Prepare For An Interview

What Do You Understand By Namenode In Hdfs

NameNode is one of the most important parts of HDFS. It is the master node in the Apache Hadoop HDFS Architecture and is used to maintain and manage the blocks present on the DataNodes .

NameNode is used to store all the HDFS data, and at the same time, it keeps track of the files in all clusters as well. It is a highly available server that manages the File System Namespace and also controls access to files by clients. Here, we must know that the data is stored in the DataNodes and not in the NameNodes.