What Is An Rnn

A recurrent neural network is a kind of artificial neural network where the connections between nodes are based on a time series. RNNs are the only form of neural networks with internal memory and are often used for speech recognition applications.

Get To Know Other Data Science Students

Sam Fisher

What Does A Degree Of Freedom Represent In Statistics

The t-distribution is used to calculate degrees of freedom and not the z-distribution. When speaking about degrees of freedom, we are referring to the number of options at our disposal when conducting an analysis.

The t-distribution will shift closer to a normal distribution as DF increases. If DF is greater than 30, this means that the t-distribution at hand has all of the characteristics of a normal distribution.

What Is An Outlier How Can Outliers Be Determined In A Dataset

Outliers are data points that vary in a large way when compared to other observations in the dataset. Depending on the learning process, an outlier can worsen the accuracy of a model and decrease its efficiency sharply.

Outliers are determined by using two methods:

- Standard deviation/z-score

Master Most in Demand Skills Now !

Don’t Miss: What Are The Most Frequent Questions Asked In An Interview

What Is A Linear Regression Model List Its Drawbacks

A linear regression model is a model in which there is a linear relationship between the dependent and independent variables.

Here are the drawbacks of linear regression:

- Only the mean of the dependent variable is taken into consideration.

- It assumes that the data is independent.

- The method is sensitive to outlier data values.

Solutions To Statistics Interview Questions

Problem #2 Solution:

There will be two main problems. The first is that the coefficient estimates and signs will vary dramatically, depending on what particular variables you include in the model. In particular, certain coefficients may even have confidence intervals that include 0 . The second is that the resulting p-values will be misleading – an important variable might have a high p-value and deemed insignificant even though it is actually important.

You can deal with this problem by either removing or combining the correlated predictors. In removing the predictors, it is best to understand the causes of the correlation . For combining predictors, it is possible to include interaction terms . Lastly, you should also 1) center data, and 2) try to obtain a larger sample size .

Problem #9 Solution:

For X ~U we have the following:

Therefore we can calculate the mean as:

\ = \int_^xf_Xdx = \int_^\fracdx = \frac \Big|_a^b = \frac\]

Similarly for variance we want:

\ – E^2\]

And we have:

\ = \int_^x^2f_Xdx = \int_^\fracdx = \frac \Big|_a^b = \frac\]

Therefore:

Problem #12 Solution:

Since X is normally distributed, we can look at the cumulative distribution function of the normal distribution:

To check the probability X is at least 2, we can check :

\ = \frac = \frac \approx 43 \space \text\]

Problem #14 Solution:

and the variance is given by:

Problem #20 Solution:

Assume we have n Bernoulli trials each with a success probability of p:

\ = \frac = p\]

Read Also: How To Prepare For A Modeling Interview

What Is Random Sampling Give Some Examples Of Some Random Sampling Techniques

Random sampling is a sampling method in which each sample has an equal probability of being chosen as a sample. It is also known as probability sampling.

Let us check four main types of random sampling techniques

- Simple Random Sampling technique In this technique, a sample is chosen randomly using randomly generated numbers. A sampling frame with the list of members of a population is required, which is denoted by n. Using Excel, one can randomly generate a number for each element that is required.

- Systematic Random Sampling technique -This technique is very common and easy to use in statistics. In this technique, every kth element is sampled. For instance, one element is taken from the sample and then the next while skipping the pre-defined amount or n.

In a sampling frame, divide the size of the frame N by the sample size to get k, the index number. Then pick every kth element to create your sample.

- Cluster Random Sampling technique -In this technique, the population is divided into clusters or groups in such a way that each cluster represents the population. After that, you can randomly select clusters to sample.

- Stratified Random Sampling technique In this technique, the population is divided into groups that have similar characteristics. Then a random sample can be taken from each group to ensure that different segments are represented equally within a population.

Amazon Data Scientist Interview Questions

Being one of the biggest data-driven companies, Amazon is constantly looking for expert data scientists. If youâre preparing for a data scientist interview at Amazon, the following are some sample questions you can practice:

Recommended Reading:

Recommended Reading: How To Crack Data Science Interview

Mean Variance Standard Deviation

The concept of measure of center and measure of spread are the very first concepts that you should master before delving deep into statistics.

This is why questions about these concepts are very popular in a data science interview. Companies want to know whether you have a basic knowledge of statistics or not. Below is an example that asks about these concepts.

Question from :

âIn Mexico, if you take the mean and the median age, which one will be higher and why?â

This question tests your knowledge about the concept of measure of center. To find out which one between mean and median that will be higher, we need to find out the age distribution in Mexico.

According to Statista, Mexico has a right-skewed distribution in terms of its age distribution. If you take a look at the below image, a right-skewed distribution has a higher mean compared to the median.

Thus, the mean age is higher than the median age in Mexico.

Question from Microsoft:

âWhat is the definition of the variance?â

As the concept says, the variance measures the spread of data points of a dataset with respect to its mean value. Below is the general equation of a variance:

where S is the variance, x is the sample, x bar is the sample mean, and n is the total number of samples.

Airbnb Data Scientist Interview Questions

Being heavily dependent on tech and data, Airbnb is a great place to work for software engineers and data scientists. You can practice the following interview questions for your data scientist interview at Airbnb.

Read Also: What Questions Are Asked In Citizenship Interview

Technical Data Scientist Interview Questions

Statistics and machine learning are important technical skills for data scientists. These questions help measure knowledge, plus the ability to explain complex topics. Some of the questions are also designed to bring out the art and science of data science.

Can You Avoid Overfitting Your Model If Yes Then How

Yes, it is possible to overfit data models. The following techniques can be used for that purpose.

- Bring more data into the dataset being studied so that it becomes easier to parse the relationships between input and output variables.

- Use feature selection to identify key features or parameters to be studied.

- Employ regularization techniques, which reduce the amount of variance in the results that a data model produces.

- In rare cases, some noisy data is added to datasets to make them more stable. This is known as data augmentation.

Don’t Miss: Best Interview Questions For Employers To Ask Applicants

Why Must You Update An Algorithm Regularly How Frequently Should You Update It

It is important to keep tweaking your machine learning algorithms regularly. The frequency with which you update them will depend on the business use case. For example, fraud detection algorithms need to be updated regularly. But if you need to study manufacturing data using machine learning, then those models need to be updated much less regularly.

What Is Deep Learning

Deep Learning is one of the essential factors in Data Science, including statistics. Deep Learning makes us work more closely with the human brain and reliable with human thoughts. The algorithms are sincerely created to resemble the human brain. In Deep Learning, multiple layers are formed from the raw input to extract the high-level layer with the best features.

You May Like: User Interviews Vs User Testing

What Is The Difference Between Descriptive And Inferential Statistics

Descriptive and inferential statistics are two different branches of the field. The former summarizes the characteristics and distribution of a dataset, such as mean, median, variance, etc. You can present those using tables and data visualization methods, like box plots and histograms.

In contrast, inferential statistics allows you to formulate and test hypotheses for a sample and generalize the results to a wider population. Using confidence intervals, you can estimate the population parameters.

You must be able to explain the mechanisms behind these concepts, as entry-level statistics questions for a data analyst interview often revolve around sampling, the generalizability of results, etc.

Using The Sample Superstore Dataset Display The Top 5 And Bottom 5 Customers Based On Their Profit

- Drag Customer Name field on to Rows, and Profit on to Columns.

- Right-click on the Customer Name column to create a set

- Give a name to the set and select the top tab to choose the top 5 customers by sum

- Similarly, create a set for the bottom five customers by sum

- Select both the sets, right-click to create a combined set. Give a name to the set and choose All members in both sets.

- Drag top and bottom customers set on to Filters, and Profit field on to Colour to get the desired result.

Recommended Reading: How To Conduct A Stay Interview

How Do You Determine The Statistical Significance Of An Insight

- The p-value is used to determine whether the null hypothesis is true or false. To put it another way, the null hypothesis states that there is no difference between the conditions, and the alternate hypothesis states that there is a difference. The p-value is then calculated.

- Once the p-value has been calculated, the null hypothesis is accepted and the sample values are determined. The alpha value, which indicates the significance of the result, is adjusted to fine-tune the result. If the p-value is lower than the alpha, the null hypothesis is rejected and the result is statistically significant.

Q103 How Does An Lstm Network Work

Long-Short-Term Memory is a special kind of recurrent neural network capable of learning long-term dependencies, remembering information for long periods as its default behaviour. There are three steps in an LSTM network:

- Step 1: The network decides what to forget and what to remember.

- Step 2: It selectively updates cell state values.

- Step 3: The network decides what part of the current state makes it to the output.

Also Check: Grokking Advanced System Design Interview

Q: Theres One Box Has 12 Black And 12 Red Cards 2nd Box Has 24 Black And 24 Red If You Want To Draw 2 Cards At Random From One Of The 2 Boxes Which Box Has The Higher Probability Of Getting The Same Color Can You Tell Intuitively Why The 2nd Box Has A Higher Probability

The box with 24 red cards and 24 black cards has a higher probability of getting two cards of the same color. Lets walk through each step.

Lets say the first card you draw from each deck is a red Ace.

This means that in the deck with 12 reds and 12 blacks, theres now 11 reds and 12 blacks. Therefore your odds of drawing another red are equal to 11/ or 11/23.

In the deck with 24 reds and 24 blacks, there would then be 23 reds and 24 blacks. Therefore your odds of drawing another red are equal to 23/ or 23/47.

Since 23/47 > 11/23, the second deck with more cards has a higher probability of getting the same two cards.

How Can The Outlier Values Be Treated

Ans: We can identify the outlier values by using the graphical analysis method or by using the Univariate method. It becomes easier and can be assessed individually when the outlier values are few but when the outlier values are more in number then these values required to be substituted either with the 1st or with the 99th percentile values.

Below are the common ways to treat outlier values.

- To bring down and change the value

- To remove the value

Recommended Reading: Questions To Ask During Real Estate Interview

What Is The P

A p-value is the probability of obtaining given results if the null hypothesis is correct. To reject it, the p-value must be lower than a predetermined significance level .

The most commonly used significance level is 0.05. This means that if the p-value is below 0.05, we can reject the null hypothesis and accept the alternative one.

In that case, we say that the results are statistically significant.

This is a fundamental part of data analysis, hence a common statistics interview question.

Lets Look At Some Statisticsinterview Questions

But wait, are you already a data analyst, data scientist or looking to change your career path to become one? If you are looking to become a data analyst, this field of work is right for you if:

- You always analyse things

- You enjoy mathematics and statistics

- You appreciate practical business thinking

- You enjoy building logics & algorithms

- You want to make an impact on the decision making of your company

Its good to test the waters before diving in. At least you will be prepared for what is to come in the future.

You May Like: What Are Some Great Interview Questions

What Distinguishes A Data Analyst From A Data Scientist

Finding trends or patterns in data to show new ways for firms to make better operational decisions can make the work of data analysts and data scientists appear to be identical. Data scientists, on the other hand, are generally regarded as having more authority and being more senior than data analysts.

Data scientists are frequently expected to develop their own inquiries into the data, whereas data analysts may assist groups working toward predetermined goals. A data scientist may spend more time building models, leveraging machine learning, or deploying sophisticated programming in order to collect and analyse data.

What Are Some Of The Techniques To Reduce Underfitting And Overfitting During Model Training

Underfitting refers to a situation where data has high bias and low variance, while overfitting is the situation where there are high variance and low bias.

Following are some of the techniques to reduce underfitting and overfitting:

For reducing underfitting:

- Increase the number of features

- Remove noise from the data

- Increase the number of training epochs

For reducing overfitting:

- Use random dropouts

Don’t Miss: What Are My Weaknesses Job Interview

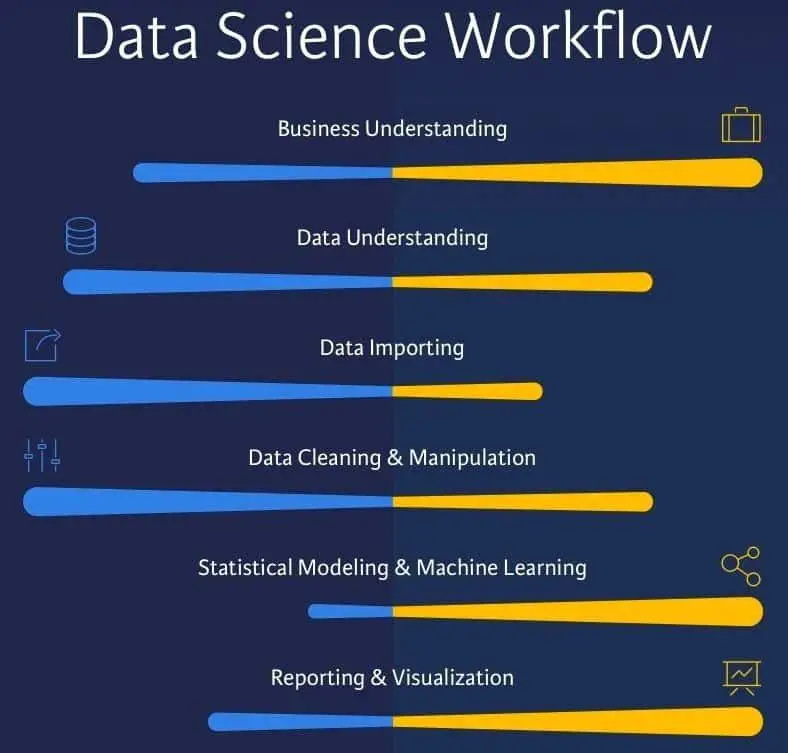

Why Is Data Cleaning Crucial For Analysis

It takes time to combine data from diverse sources into a format that data scientists and analysts can use. This process is known as data cleaning. This is because the amount of data that needs to be cleaned grows along with the number of data sources as a result of the volume of data generated by each source. Data cleaning is an essential phase in the analytical process because it might consume up to 80% of the time.

What Is Data Science

Ans: Data science is defined as a multidisciplinary subject used to extract meaningful insights out of different types of data by employing various scientific methods such as scientific processes and algorithms. Data science helps in solving analytically complex problems in a simplified way. It acts as a stream where you can utilize raw data to generate business value.

Also Check: Interview Questions For Records Management