Describe The Features Of A Database Management System

-

A multi-user environment is enabled by DBMS, allowing users to explore and process the data simultaneously.

-

It adheres to the ACID principle .

-

It ensures security and eliminates redundancy.

-

It enables multiple data views.

-

Tables are built by DBMS by combining entities and their relationships.

-

Data sharing and multiuser transaction processing is easier using DBMS.

You Have Mentioned Data Mart Several Times In This Interview What Is It

Data is grouped into datasets and then stored in data marts. Therefore, a data mart is a condensed warehouse version, making it a subset of the data warehouse. They are generally made for different units and departments. The marketing, sales, finance, and HR departments will have their data marts in an organization. However, some data marts can also be empowered to interact with a given data warehouse for data processing purposes. This can only happen in given departments.

What Is The Key Difference Between A Data Mart And A Data Warehouse

As we know, both Data Mart and Data Warehouse are used to store the data. The main difference between Data Mart and Data Warehouse is that Data Warehouse is the type of database which is data-oriented. On the other hand, Data Mart is the type of database that is project-oriented. Let’s see the key differences between a Data Mart and a Data Warehouse in the following table:

Data Mart A data mart is a subset of a data warehouse. It is small in size. A Data Warehouse is a superset of a Data Mart. It is huge in size. Data marts are used to provide specific data access to users. So, it is easy for users to fetch data quickly. Data Warehouse is very big in size, so it may be complicated and time-consuming to retrieve specific data from here. Generally, a Data Mart is less than 100 GB. A Data Warehouse is usually larger than 100 GB and often a terabyte or more. It mainly focuses on a single subject area of business. A Data Warehouse is spread very wide and ranges across multiple areas and multiple areas of businesses. Data Mart follows the bottom-up model. Data Warehouse follows a top-down model. In Data Mart, the data comes from one data source. In Data Warehouse, data comes from more than one heterogeneous data source. A Data Mart is used to make tactical decisions for business growth. A Data Warehouse helps business owners to take strategic decisions. A Data Mart is limited in scope. A Data Warehouse is large in scope. Data Mart is project-oriented.

You May Like: How To Get Podcast Interviews

What Are The Four Different Types Of Data Models

The four different types of data models are-

-

Relational Model- The relational model, which is the most popular, organizes data into tables, or relations, which consist of columns and rows.

-

Hierarchical Model- The hierarchical model contains data in a tree-like layout with a single parent or root for each record.

-

Network Model- The network model extends the hierarchical model by enabling many-to-many links between connected records, indicating the presence of several parent records.

-

Entity Relationship Model- This model, like the network model, represents relationships between real-world entities, although it doesnt closely link to the database’s physical design.

What Do You Understand By Surrogate Key What Are The Benefits Of Using The Surrogate Key

A surrogate key is a unique key in the database used for an entity in the client’s business or an object within the database. This is used when we cannot use natural keys to create a unique primary table key. In this case, the data modeler or architect decides to use surrogate or helping keys for a table in the LDM. That’s why surrogate keys are also known as helping keys. A surrogate key is a substitute for natural keys.

Following are some benefits of using surrogate keys:

- Surrogate keys are useful for creating SQL queries, uniquely identifying a record and good performance.

- Surrogate keys consist of numeric data types that provide excellent performance during data processing and business queries.

- Surrogate keys do not change while the row exists.

- Natural keys can be changed in the source. For example, migration to a new system, making them useless in the data warehouse. That’s why surrogate keys are used.

- If we use surrogate keys and share them across tables, we can automate the code, making the ETL process simpler.

You May Like: Where Can I Watch Oprah Interview With Meghan And Harry

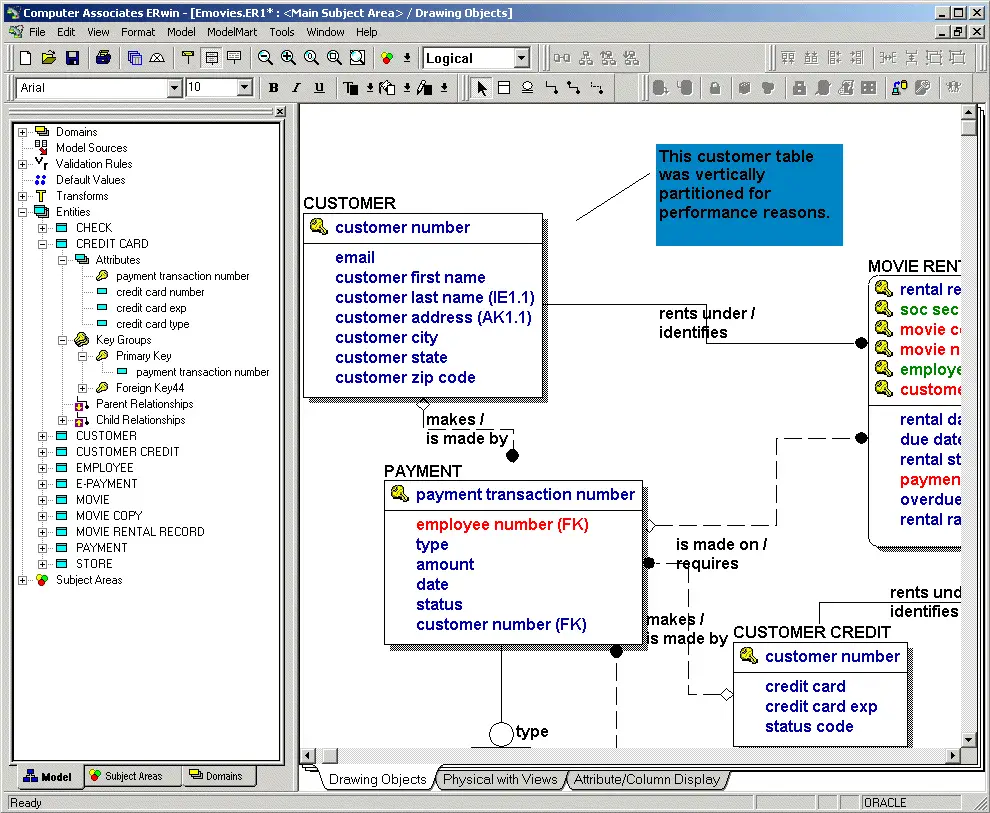

What Is The Use Of Erwin Data Modeler

This ERwin tool interview question examines whether you are up to date with the latest software and if you know of its different capabilities. It vets for a modelers ability to normalize a data model and help a client get the most authentic information.

For an effective answer, explain what you find to be its strongest feature or function, what makes it unique and in what capacity you have used it.

Example: In my position as team-lead on our last project, I was introduced to ERwin, a software used for data modeling. Our client needed a solution to lower data management costs. We used it to create an actual database from the physical mode, streamlining the entire process. A bonus to ERwin is that it has options for colors, fonts, layouts and more. However, I found it particularly useful that it can be used to reverse engineer.

Data Modeling Interview Questions:

What is your APPROACH to start a Data Model?

What inputs do you need for BUILDING a data model?

What are 5 differences between OLTP and OLAP?

What are various differences between CONCEPTUAL data model and LOGICAL data model?

What is the difference between LOGICAL and PHYSICAL data model?

What are the activities carried out in a Physical Data Modeling?

How are SUPER TYPE SUB TYPE relations resolved in Physical Model?

What type of STANDARDS need to followed in Naming table/columns?

What are DOMAINS in Data Modeling?

How to VALIDATE a Data Model once it is completed?

What is difference between ODS-Operation Data Store and Data Warehouse?

What is Data Mart?

Explain difference between Primary key and Unique key constraint?

Suggest few Audit Trail columns?

What information is available in a Mapping Document?

Orders table doesnt have a PI/PK and has Duplicates. What is the best way to eliminate them?

What are Functional Requirements?

What are Non Functional Requirement?

You May Like: How To Test Aptitude In An Interview

What Are The Qualifications Of The Teachers And How Are They Chosen

Our highly skilled Data Science professors are all industry specialists with years of expertise. Before they are qualified to train for us, they have undergone a thorough selection procedure that includes profile screening, technical Examination, and a training demo. We also ensure that only trainers with a high alumni rating stay on our staff.

What Do You Mean By A Non

A non-identifying relationship is a relationship between two entities in which an instance of the child entity is not identified through its association with a parent entity. In this case, the child entity is not dependent on the parent entity and can exist without it. This relationship is drawn by dotted lines by connecting these two tables.

You May Like: What Do I Need For An Interview

Common Data Modeling Interview Questions And Answers

As you consider the following questions, try to format your answers using the STAR interview answer technique. This strategy helps you craft answers that illustrate your knowledge and qualifications through specific experiences.

Using the STAR method, discuss an applicable situation, identify the task you needed to complete, outline the actions you took and reveal the results of your efforts.

Here are five common interview questions about data modeling you may be asked during your interview:

What is the use of ERwin data modeler?

What is data mart?

What is an artificial key and when should it be used?

Should all databases be in third normal form?

Differentiate Sql And Nosql

SQL and NoSQL differ in flexibility, models, data types, and transactions. SQL has a relational data model, deals with structured data, and follows a strict schema and ACID properties in their transactions which fully means atomicity, consistency, isolation, and durability. On the other hand, NoSQL has non-relational data models that deal with semi-structured data and dynamic schema, making them very flexible. They follow BASE properties. BASE fully means basic availability, soft state, and eventual consistency.

Don’t Miss: What Kind Of Questions To Ask In An Interview

What Do You Understand By Database Normalization

Database normalization is the process of structuring and designing the database to reduce data redundancy without losing integrity. It usually works on a relational database according to so-called normal forms. The main motive of database normalization is to reduce data redundancy and improve data integrity. Edgar F. Codd first proposed the process of database normalization as part of his relational model.

How Many Types Of Normalization Exist Mention The Rules For The Second Normal Form And Third Normal Form

Normalizations are classified as follows:

1) first normal form,

4) Boyce-Codd fourth normal form, and

5) fifth normal form.

Second-normal form rules are as follows:

-

It must already be in the first normal form.

-

It doesn’t have non-prime attributes that functionally depend on any subset of the table relation’s candidate keys.

Third-normal-form rules are as follows:

-

It must already be in the second normal form.

-

There are no transitive functional dependencies.

Recommended Reading: How To Rock An Interview

What Are The Most Common Errors You Can Potentially Face In Data Modeling

These are the errors most likely encountered during data modeling.

- Building overly broad data models: If tables are run higher than 200, the data model becomes increasingly complex, increasing the likelihood of failure

- Unnecessary surrogate keys: Surrogate keys must only be used when the natural key cannot fulfill the role of a primary key

- The purpose is missing: Situations may arise where the user has no clue about the businesss mission or goal. Its difficult, if not impossible, to create a specific business model if the data modeler doesnt have a workable understanding of the companys business model

- Inappropriate denormalization: Users shouldnt use this tactic unless there is an excellent reason to do so. Denormalization improves read performance, but it creates redundant data, which is a challenge to maintain.

Mention Some Of The Fundamental Data Models

-

Fully-Attributed : This is a third normal form model that provides all the data for a specific implementation approach.

-

Transformation Model : Specifies the transformation of a relational model into a suitable structure for the DBMS in use. The TM is no longer in the third normal form in most cases. The structures are optimized depending on the DBMS’s capabilities, data levels, and projected data access patterns the structures are optimized.

-

DBMS Model: The DBMS Model contains the database design for the system. The DBMS Model can be at the project or area level for the complete integrated system.

Recommended Reading: Tips On How To Interview An Applicant

What Is Data Modeling

Data modeling is creating data models to store in a database. It is a conceptual representation of data objects, the association between different data objects, and the rules. It also represents how the data flows. In other words, data modeling is creating a simplified diagram that contains data elements in the form of texts and symbols.

Learner Reviews Of Big Data Analytics

Sandeep Kumar Singh

I have opted for the Big Data & Hadoop course. And really the course content is awesome and all the lectures provided by the trainers is really in-line with industry solutions. All the recorded sessions and other recorded video content are brilliant. I truly say Intellipaat is one of the leaders in providing technological sessions and I am highly impressed with them.

I am working as a Digital Specialist Engineer. Being a fresher I didn’t know much about Big Data and I was assigned a Big Data-related project. So, I enrolled in this course. And ever since I have started it, I can see improvement in my performance. Previously, I used to struggle a lot but after doing this course I feel confident enough to work on any task related to Big Data.

Without any experience, I got a job in the EDW project with SQL and Informatica. So, I wanted to upskill myself in order to perform well in my current role. Fortunately, the EDW project in the company started migrating to Spark & Hadoop. The experience and the knowledge I received in the Big Data Hadoop class helped me a lot to swiftly migrate to Hadoop. I am happy that I joined the course.

Intellipaat offers a practical and authentic platform to learn latest technologies. The course is flexible and can be managed along with work and home. Also, the trainers carry a good experience.

Don’t Miss: How To Prepare For A Teaching Job Interview

Can You Compare And Contrast Star Schema And Snowflake Schema

The way this question is answered will show your strong understanding of data organization. The data schema of a database is its structure described in formal language supported by the management system.

Schema refers to a blueprint of how that data is constructed. Star schema contains a single fact table surrounded by dimension tables.

Snowflake schema typically stores the same data as the star schema, but the information is structured due to normalization. When answering, indicate when it is appropriate to use each schema.

Example:Star schema and snowflake schema are the most popular multidimensional models used in a data warehouse and are similar in the data they can store but have one main difference: star schema is denormalized and snowflake schema is normalized. I had a client using star schema to analyze data and because of redundancy, it was inaccurate. Central to star schema is a fact table or several fact tables.

These fact tables index a series of dimension tables. Star schema separate fact data, things like price, percentage and weight from dimensional data that includes things like color, names and geographic locations. They function well for quick queries and can provide access to basic information on a wide scale. However, the denormalized structure does not enforce data integrity well despite efforts to prevent anomalies from happening. This aspect made data analysis for my client very difficult.

What Do You Understand By A Data Mart Discuss The Various Types Of Data Marts Available In Data Modeling

A data mart is a subject-oriented database, usually a split portion of a giant data warehouse. The data subset in a data mart usually relates to a specific business area, such as sales, finance, or marketing. Data marts speed up business operations by allowing users to access essential data from a data warehouse significantly less time. A data mart is a cost-effective solution to efficiently acquire meaningful insights since it only comprises data relevant to a specific business area.There are three data marts in data modeling depending on their relationship to the data warehouse and the data source –

-

Dependent Data Mart- An existing enterprise data warehouse helps establish a dependent data mart. It’s a top-down method that starts with storing all enterprise data in a single area, then extracting a well-defined piece of the data when it’s time to analyze it.

-

Independent Data Mart- An independent data mart is a stand-alone system that concentrates on a specific subject or business operation without using a data warehouse. These data marts store processed data from internal or external data sources until you need that data for business analytics.

-

Hybrid Data Mart- A hybrid data mart is an integration of an existing data warehouse and different functional data systems. It combines the features of the bottom-up method’s enterprise-level integration with the speed and end-user emphasis of a top-down approach.

Recommended Reading: Technical Interview Questions For Freshers

What Is Metadata What Are Its Different Types

Metadata is data that provides information about other data. It gives information about other data but not the content of the data, for example, the text of a message or the image itself.

It describes the data about data and shows what type of data is stored in the database system.

Descriptive metadata: The descriptive metadata provides descriptive information about a resource. It is mainly used for discovery and identification. The main elements of descriptive metadata are title, abstract, author, keywords, etc. Following is a list of several distinct types of metadata:

Administrative metadata: Administrative metadata is used to provide information to manage a resource, like a resource type, permissions, and when and how it was created.

Structural metadata: The structural metadata specifies data containers and indicates how compound objects are put together. It also describes the types, versions, relationships, and other characteristics of digital materials. For example, how pages are ordered to form chapters.

Reference metadata: The reference metadata provides information about the contents and quality of statistical data.

Statistical metadata: Statistical metadata describes processes that collect, process, or produce statistical data. It is also called process data.

Legal metadata: The legal metadata provides information about the creator, copyright holder, and public licensing.

What Is The Primary Key / What Is A Primary Key Constraint

The primary key or primary key constraint is a column or group that unequally identifies every row in the table. The primary key constraint is imposed on the column data to avoid null and duplicate values. The primary key value must not be null. Every table must contain one primary key.

For example, Social security number, bank account number, bank routing number, phone number, Aadhar number, etc.

You May Like: How To Prepare For Coding Interview

What Is The Definition Of A Primary Key

A primary key is a column or set of columns in a relational database management system table that uniquely identifies each record. To avoid null values and duplicate entries, the primary key constraint is applied to the column data. Unique + Not Null is the primary key. For instance, a social security number, a bank account number, and a bank routing number are all examples of a primary key.