What Is Tokenization In Nlp

Natural Language Processing aims to program computers to process large amounts of natural language data. Tokenization in NLP means the method of dividing the text into various tokens. You can think of a token in the form of the word. Just like a word forms into a sentence. It is an important step in NLP to slit the text into minimal units.

What Is Parsing In The Context Of Nlp

Parsing in NLP refers to the understanding of a sentence and its grammatical structure by a machine. Parsing allows the machine to understand the meaning of a word in a sentence and the grouping of words, phrases, nouns, subjects, and objects in a sentence. Parsing helps analyze the text or the document to extract useful insights from it. To understand parsing, refer to the below diagram:

In this, Jonas ate an orange is parsed to understand the structure of the sentence.

What Is Naive Bayes Algorithm When We Can Use This Algorithm In Nlp

Naive Bayes algorithm is a collection of classifiers which works on the principles of the Bayes theorem. This series of NLP model forms a family of algorithms that can be used for a wide range of classification tasks including sentiment prediction, filtering of spam, classifying documents and more.

Naive Bayes algorithm converges faster and requires less training data. Compared to other discriminative models like logistic regression, Naive Bayes model it takes lesser time to train. This algorithm is perfect for use while working with multiple classes and text classification where the data is dynamic and changes frequently.

Recommended Reading: Where To Buy Women’s Interview Clothes

How Do You Build A Random Forest Model

A random forest is built up of a number of decision trees. If you split the data into different packages and make a decision tree in each of the different groups of data, the random forest brings all those trees together.

Steps to build a random forest model:

What Is Nltk How Is It Different From Spacy

NLTK or Natural Language Toolkit is a series of libraries and programs that are used for symbolic and statistical natural language processing. This toolkit contains some of the most powerful libraries that can work on different ML techniques to break down and understand human language. NLTK is used for Lemmatization, Punctuation, Character count, Tokenization, and Stemming. The difference between NLTK and Spacey are as follows:

- While NLTK has a collection of programs to choose from, Spacey contains only the best-suited algorithm for a problem in its toolkit

- NLTK supports a wider range of languages compared to Spacey

- While Spacey has an object-oriented library, NLTK has a string processing library

- Spacey can support word vectors while NLTK cannot

Also Check: What Kind Of Questions Do You Ask In An Interview

What Is Part Of Speech Tagging

According to The Stanford Natural Language Processing Group :

A Part-Of-Speech Tagger is a piece of software that reads text in some language and assigns parts of speech to each word , such as noun, verb, adjective, etc.

PoS taggers use an algorithm to label terms in text bodies. These taggers make more complex categories than those defined as basic PoS, with tags such as noun-plural or even more complex labels. Part-of-speech categorization is taught to school-age children in English grammar, where children perform basic PoS tagging as part of their education.

Lemmatization generally means to do the things properly with the use of vocabulary and morphological analysis of words. In this process, the endings of the words are removed to return the base word, which is also known as Lemma.

Example: boys = boy, cars= car, colors= color.

So, the main attempt of Lemmatization as well as of stemming is to identify and return the root words of the sentence to explore various additional information.

How To Get An Interview

-

First and foremost, develop the necessary skills and be sound with the fundamentals, these are some of the horizons you should be extremely comfortable with –

- Business Understanding

- SQL and Databases

- Programming Skills

- Mathematics –

- Machine Learning and Model building

- Data Structures and Algorithms

- Domain Understanding

- Literature Review : Being able to read and understand a new research paper is one of the most essential and demanding skills needed in the industry today, as the culture of Research and Development, and innovation grows across most good organizations.

- Communication Skills – Being able to explain the analysis and results to business stakeholders and executives is becoming a really important skill for Data Scientists these days

- Some Engineering knowledge – Being able to develop a RESTful API, writing clean and elegant code, Object Oriented programming are some of the things you can focus on for some extra brownie points.

- Big data knowledge – Spark, Hive, Hadoop, Sqoop.

Build a personal Brand

Recommended Reading: How To Email A Thank You For An Interview

What Is Regular Grammar

Regular grammar is used to represent a regular language.

A regular grammar comprises rules in the form of A -> a, A -> aB, and many more. The rules help detect and analyze strings by automated computation.

Regular grammar consists of four tuples:

Most Challenging Data Science Interview Questions

The simple but tricky data science questions that most people struggle to answer.

If you ask me, the hiring managers are not looking for the correct answers. They want to evaluate your work experience, technical knowledge, and logical thinking. Furthermore, they are looking for data scientists who understand both the business and technical sides.

For example, during an interview with a top telecommunication company, I was asked to come up with a new data science product. I suggested an open-source solution and let the community contribute to the project. I explained my thought process and how we can monetize the product by providing premium service to paid customers.

I have collected the 12 most challenging data science interview questions with answers. They are divided into three parts: situational, data analysis, and machine learning to cover all the bases.

You can also check out the complete collection of data science interviews: part 1 and part 2. The collection consists of hundreds of questions on all sub categories of data science.

Recommended Reading: What To Write In An Interview Thank You Note

What Is The Difference Between A Box Plot And A Histogram

The frequency of a certain features values is denoted visually by both box plots

and histograms.

Boxplots are more often used in comparing several datasets and compared to histograms, take less space and contain fewer details. Histograms are used to know and understand the probability distribution underlying a dataset.

The diagram above denotes a boxplot of a dataset.

The Data Scientist Role At Ibm

A data scientists role in any enterprise analytics team ranges from identifying opportunities that offer the greatest insights to analyzing data to identify trends and patterns, building pipelines and personalized machine learning models for understanding customers needs, and making better business decisions.

At IBM, the term data science covers a wide scope of data science-related related jobs and roles can include uncovering insights from data collection, organization, and analysis, laying the foundations for information infrastructure, and building and training models with significant results. Roles are sometimes specific to teams and products assigned, and sometimes they can be more specialized, like the IBM Analytics Consulting Service for internal and external clients.

IBMs data scientists are placed in teams working on IBM products and services such as IBM Watson Studio, IBM Cloud Pak, IBM Db2, IBM SPSS, IBM Infosphere, etc.

Required Skills:

IBM is a data-driven organization, and data science is a big deal. Data scientist roles at IBM require field specialization, and so IBM hires only highly qualified individuals with at least 3 years of industry experience in data analysis, quantitative research, and machine learning applications.

Other basic qualifications include:

Read Also: How To Get Interviews For Your Book

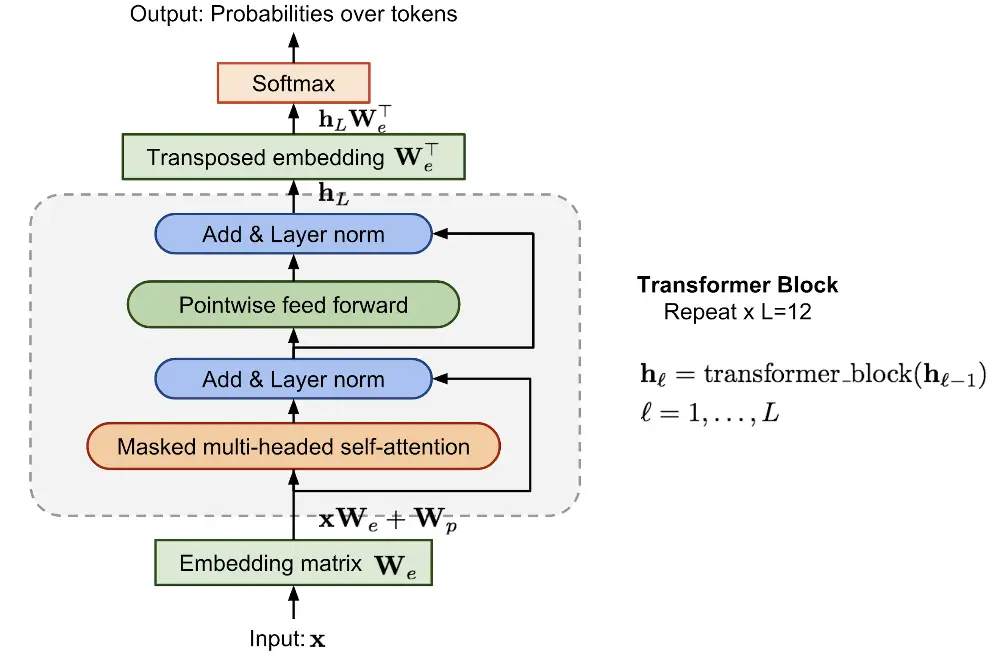

Why Do We Use Convolutions For Images Rather Than Just Fc Layers

This one was pretty interesting since its not something companies usually ask. As you would expect, I got this question from a company focused on computer vision. This answer has two parts to it. Firstly, convolutions preserve, encode, and actually use the spatial information from the image. If we used only FC layers we would have no relative spatial information. Secondly, Convolutional Neural Networks have a partially built-in translation in-variance, since each convolution kernel acts as its own filter/feature detector.

Explain Named Entity Recognition By Implementing It

Named Entity Recognition is an information retrieval process. NER helps classify named entities such as monetary figures, location, things, people, time, and more. It allows the software to analyze and understand the meaning of the text. NER is mostly used in NLP, Artificial Intelligence, and Machine Learning. One of the real-life applications of NER is chatbots used for customer support.Lets implement NER using the spacy package.Importing the spacy package:

import spacy nlp = spacy.load Text = "The head office of Google is in California" document = nlpfor ent in document.ents: print

Output:

Office 9 15 Place Google 19 25 ORG California 32 41 GPE

Recommended Reading: How To Interview For A Job

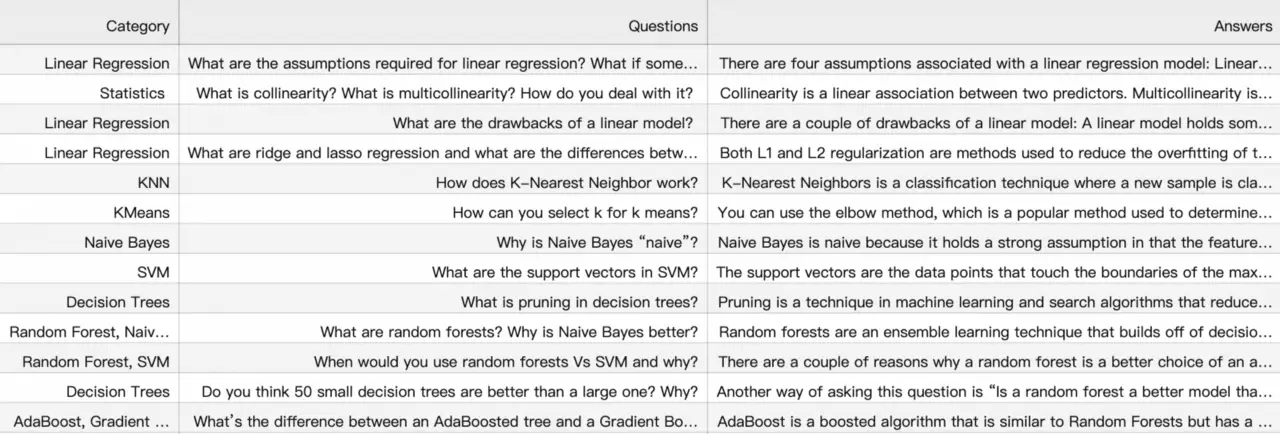

Nlp Part Ii: Data Visualization

In terms of data visualization, I used word clouds to demo my findings.

The idea of word cloud is to find top most frequent n-gram words inside the interview questions & answers. For each category from EDA, Methodology, Statistics and Model, I made each a pair of word cloud graphs to compare. And its interesting to see what are the most frequent words being asked & answered.

For a bi-gram word cloud, I used the CountVectorizer first to generate a frequency dictionary for the bi-gram words and used generate_from_frequencies for the plot. You can check the detailed code and plots as following:

What Are The Stages In The Lifecycle Of A Natural Language Processing Project

Following are the stages in the lifecycle of a natural language processing project:

- Data Collection: The procedure of collecting, measuring, and evaluating correct insights for research using established approved procedures is referred to as data collection.

- Data Cleaning: The practice of correcting or deleting incorrect, corrupted, improperly formatted, duplicate, or incomplete data from a dataset is known as data cleaning.

- Data Pre-Processing: The process of converting raw data into a comprehensible format is known as data preparation.

- Feature Engineering: Feature engineering is the process of extracting features from raw data using domain expertise.

- Data Modeling: The practice of examining data objects and their relationships with other things is known as data modelling. It’s utilised to look into the data requirements for various business activities.

- Model Evaluation: Model evaluation is an important step in the creation of a model. It aids in the selection of the best model to represent our data and the prediction of how well the chosen model will perform in the future.

- Model Deployment: The technical task of exposing an ML model to real-world use is known as model deployment.

- Monitoring and Updating: The activity of measuring and analysing production model performance to ensure acceptable quality as defined by the use case is known as machine learning monitoring. It delivers alerts about performance difficulties and assists in diagnosing and resolving the core cause.

Recommended Reading: How To Cite A Personal Interview

Why Do You Want To Work At This Company As A Data Scientist

The purpose of this question is to determine the motivation behind the candidates choice of applying and interviewing for the position. Their answer should reveal their inspiration for working for the company and their drive for being a data scientist. It should show the candidate is pursuing the position because they are passionate about data and believe in the company, two elements that can determine the candidates performance. Answers to look for include:

- Interest in data mining

- Respect for the companys innovative practices

- Desire to apply analytical skills to solve real-world issues with data

Example:

I have a passion for working for data-driven, innovative companies. Your firm uses advanced technology to address everyday problems for consumers and businesses alike, which I admire. I also enjoy solving issues using an analytical approach and am passionate about incorporating technology into my work. I believe that my skills and passion match the companys drive and capabilities.

Which Of The Following Machine Learning Algorithms Can Be Used For Inputting Missing Values Of Both Categorical And Continuous Variables

- K-means clustering

The K nearest neighbor algorithm can be used because it can compute the nearest neighbor and if it doesn’t have a value, it just computes the nearest neighbor based on all the other features.

When you’re dealing with K-means clustering or linear regression, you need to do that in your pre-processing, otherwise, they’ll crash. also have the same problem, although there is some variance.

| Looking forward to becoming a Data Scientist? Check out the Data Science Course and get certified today. |

Don’t Miss: How To Prepare For A Paraprofessional Interview

What Are The Steps Involved In Preprocessing Data For Nlp

Here are some common pre-processing steps used in NLP software:

- Preliminaries: This includes word tokenization and sentence segmentation.

- Common Steps: Stop word removal, stemming and lemmatization, removing digits/punctuation, lowercasing, etc.

- Processing Steps: Code mixing, normalization, language detection, transliteration, etc.

- Advanced Processing: Parts of Speech tagging, coreference resolution, parsing, etc.

What Is Precision And Recall

The metrics used to test an NLP model are precision, recall, and F1. Also, we use accuracy for evaluating the models performance. The ratio of prediction and the desired output yields the accuracy of the model.

Precision is the ratio of true positive instances and the total number of positively predicted instances.

Recall is the ratio of true positive instances and the total actual positive instances.

Read Also: When To Send Follow Up Email After Interview

What Are Unigrams Bigrams Trigrams And N

When we parse a sentence one word at a time, then it is called a unigram. The sentence parsed two words at a time is a bigram.

When the sentence is parsed three words at a time, then it is a trigram. Similarly, n-gram refers to the parsing of n words at a time.

Example: To understand unigrams, bigrams, and trigrams, you can refer to the below diagram:

Therefore, parsing allows machines to understand the individual meaning of a word in a sentence. Also, this type of parsing helps predict the next word and correct spelling errors.

Are you interested in learning Artificial Intelligence from experts? Enroll in our AI Course in Bangalore now!

What Is Sentiment Analysis

The business organization wants to understand the opinion of customers and the public. For example, what went wrong with their latest products? what users and the general public think about the latest feature? Quantifying the users content, idea, belief, and opinion are known as sentiment analysis. It is not only limited to marketing, but it can also be utilized in politics, research, and security. The sentiment is more than words, it is a combination of words, tone, and writing style.

Read Also: What They Ask In Interview

What Is Pragmatic Analysis

Pragmatic analysis is an important task in NLP for interpreting knowledge that is lying outside a given document. The aim of implementing pragmatic analysis is to focus on exploring a different aspect of the document or text in a language. This requires a comprehensive knowledge of the real world. The pragmatic analysis allows software applications for the critical interpretation of the real-world data to know the actual meaning of sentences and words.

Example:

Consider this sentence: Do you know what time it is?

This sentence can either be asked for knowing the time or for yelling at someone to make them note the time. This depends on the context in which we use the sentence.

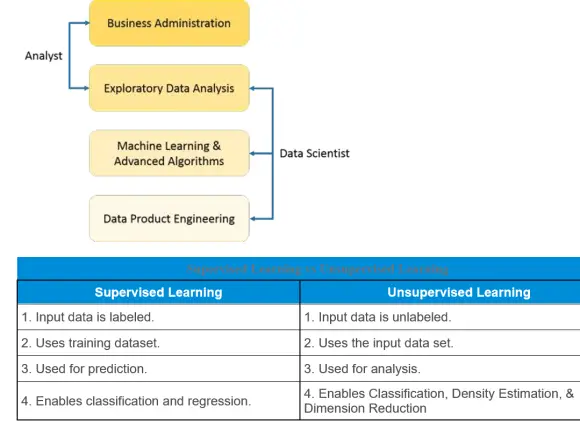

What Is Machine Learning

Machine learning arises from this question: could a computer go beyond what we know how to order it to perform and learn on its own how to perform a specified task? Could a computer do things or learn as human being does? Rather than programmers crafting data-processing rules by hand, could a computer automatically learn these rules by looking at data?

A machine-learning system is trained rather than explicitly programmed. Its presented with many examples relevant to the task, and it finds statistical structure in these examples that eventually allows the system to come up with rules for automating the task. For instance, if you wished to automate the task of tagging your vacation pictures, you could present a machine-learning system with many examples of pictures already tagged by humans, and the system would learn statistical rules for associating specific pictures to specific tags.

Also Check: What Do You Do At A Job Interview

Difference Between An Error And A Residual Error

The difference between a residual error and error are defined below –

Error |

|

|

The difference between the actual value and the predicted value is called an error. Some of the popular means of calculating data science errors are –

|

The difference between the arithmetic mean of a group of values and the observed group of values is called a residual error. |

|

An error is generally unobservable. |

A residual error can be represented using a graph. |

|

A residual error is used to show how the sample population data and the observed data differ from each other. |

An error is how actual population data and observed data differ from each other. |