Q73 What Is The Difference Between Regression And Classification Ml Techniques

Both Regression and classification machine learning techniques come under Supervised machine learning algorithms. In Supervised machine learning algorithm, we have to train the model using labelled data set, While training we have to explicitly provide the correct labels and algorithm tries to learn the pattern from input to output. If our labels are discrete values then it will a classification problem, e.g A,B etc. but if our labels are continuous values then it will be a regression problem, e.g 1.23, 1.333 etc.

Q100 What Are The Different Layers On Cnn

There are four layers in CNN:

Convolutional Layer the layer that performs a convolutional operation, creating several smaller picture windows to go over the data.

ReLU Layer it brings non-linearity to the network and converts all the negative pixels to zero. The output is a rectified feature map.

Pooling Layer pooling is a down-sampling operation that reduces the dimensionality of the feature map.

Fully Connected Layer this layer recognizes and classifies the objects in the image.

Q81 Describe In Brief Any Type Of Ensemble Learning

Ensemble learning has many types but two more popular ensemble learning techniques are mentioned below.

Bagging

Bagging tries to implement similar learners on small sample populations and then takes a mean of all the predictions. In generalised bagging, you can use different learners on different population. As you expect this helps us to reduce the variance error.

Boosting

Boosting is an iterative technique which adjusts the weight of an observation based on the last classification. If an observation was classified incorrectly, it tries to increase the weight of this observation and vice versa. Boosting in general decreases the bias error and builds strong predictive models. However, they may over fit on the training data.

Q82. What is a Random Forest? How does it work?

Random forest is a versatile machine learning method capable of performing both regression and classification tasks. It is also used for dimensionality reduction, treats missing values, outlier values. It is a type of ensemble learning method, where a group of weak models combine to form a powerful model.

In Random Forest, we grow multiple trees as opposed to a single tree. To classify a new object based on attributes, each tree gives a classification. The forest chooses the classification having the most votes and in case of regression, it takes the average of outputs by different trees.

Read Also: Assessment Interview Questions And Answers

Q67 Explain Decision Tree Algorithm In Detail

A is a supervised machine learning algorithm mainly used for Regression and Classification. It breaks down a data set into smaller and smaller subsets while at the same time an associated decision tree is incrementally developed. The final result is a tree with decision nodes and leaf nodes. A decision tree can handle both categorical and numerical data.

Statistic And Probability Interview Questions For Data Scientists

Data scientist probability interview questions and answers for practice in 2021 to help you nail your next data science interview.

As a data science aspirant, you would have probably come across the following phrase more than once:

A data scientist is a person who is better at statistics than any programmer and better at programming than any statistician.

Before data science became a well-known career path, companies would hire statisticians to process their data and develop insights based on trends observed. Over time, however, as the amount of data increased, statisticians alone were not enough to do the job. Data pipelines had to be created to munge large amounts of data that couldnt be processed by hand.

The term data science suddenly took off and became popular, and data scientists were individuals who possessed knowledge of both statistics and programming. Today, many libraries are available in languages like Python and R that help cut out a lot of the manual work. Running a regression algorithm on thousands of data points only takes a couple of seconds to do.

However, it is still essential for data scientists to understand statistics and probability concepts to examine datasets. Data scientists should be able to create and test hypotheses, understand the intuition behind statistical algorithms they use, and have knowledge of different probability distributions.

You May Like: It Help Desk Technical Interview Questions

What Is The Law Of Large Numbers In Statistics

The law of large numbers in statistics is a theory that states that the increase in the number of trials performed will cause a positive proportional increase in the average of the results becoming the expected value.

Example: The probability of flipping a fair coin and landing heads is closer to 0.5 when it is flipped 100,000 times when compared to 100 flips.

Q110 What Are The Variants Of Back Propagation

-

Stochastic Gradient Descent: We use only a single training example for calculation of gradient and update parameters.

-

Batch Gradient Descent: We calculate the gradient for the whole dataset and perform the update at each iteration.

-

Mini-batch Gradient Descent: Its one of the most popular optimization algorithms. Its a variant of Stochastic Gradient Descent and here instead of single training example, mini-batch of samples is used.

| Tensorflow, Pytorch |

You May Like: How To Code Qualitative Interviews

Q: You Are Compiling A Report For User Content Uploaded Every Month And Notice A Spike In Uploads In October In Particular A Spike In Picture Uploads What Might You Think Is The Cause Of This And How Would You Test It

There are a number of potential reasons for a spike in photo uploads:

The method of testing depends on the cause of the spike, but you would conduct hypothesis testing to determine if the inferred cause is the actual cause.

What Is The Central Limit Theorem Explain It Why Is It Important

Statistics How To provides the best definition of CLT, which is:

The central limit theorem states that the sampling distribution of the sample mean approaches a normal distribution as the sample size gets larger no matter what the shape of the population distribution.

The central limit theorem is important because it is used in hypothesis testing and also to calculate confidence intervals.

Recommended Reading: How To Write A Thank You Email After An Interview

Q83 How Do You Work Towards A Random Forest

The underlying principle of this technique is that several weak learners combined to provide a keen learner. The steps involved are

- Build several decision trees on bootstrapped training samples of data

- On each tree, each time a split is considered, a random sample of mm predictors is chosen as split candidates, out of all pp predictors

- Rule of thumb: At each split m=pm=p

- Predictions: At the majority rule

What General Conditions Must Be Satisfied For The Central Limit Theorem To Hold

Here are the conditions that must be satisfied for the central limit theorem to hold

- The data must follow the randomization condition which means that it must be sampled randomly.

- The Independence Assumptions dictate that the sample values must be independent of each other.

- Sample sizes must be large. They must be equal to or greater than 30 to be able to hold CLT. Large sample size is required to hold the accuracy of CLT to be true.

You May Like: How To Give A Technical Interview

What Do You Understand By Covariance

Covariance is a measure that specifies how much two random variables vary together. It indicates how two variables move in sync with each other. It also specifies the direction of the relationship between two variables. There are two types of Covariance: positive and negative Covariance. The positive Covariance specifies that both variables tend to be high or low simultaneously. On the other hand, the negative Covariance specifies that the other tends to be below when one variable is high.

What Information Is Gained In A Decision Tree Algorithm

Information gain is the expected reduction in entropy. Information gain decides the building of the tree. Information Gain makes the decision tree smarter. Information gain includes parent node R and a set E of K training examples. It calculates the difference between entropy before and after the split.

Recommended Reading: Google Product Manager Technical Interview

What Can I Do To Find Out Whether This Data Science Certification Is Good For Me

It’s always beneficial to learn new talents and broaden your knowledge. This Data Science certification was created in collaboration with Purdue University and is an excellent combination of a world-renowned curriculum and industry-aligned training, making this certification in data science a superb choice.

Explain The Steps In Making A Decision Tree

For example, let’s say you want to build a to decide whether you should accept or decline a job offer. The decision tree for this case is as shown:

It is clear from the decision tree that an offer is accepted if:

- Salary is greater than $50,000

- The commute is less than an hour

- Incentives are offered

You May Like: Best Way To Record Video Interviews

Data Science Interview Questions 101

Effective performance is preceded by painstaking preparation Brian Tracy

Preparing for a Data Science interview can be tricky as well as haunting as its multidimensional. It involves so many different facets probability and statistics, machine learning, software development and of course deep learning. Hence it is very important to know what role are you going after. This will highly influence your preparation.

In this blog, Ill be sharing some amazing resources that I have come across while preparing for my DS Interview. They cover almost every bit of what one may encounter in a Data Science Interview

Interview Preparation for a job role like DATA SCIENTIST isnt the same as preparing for college placements, its much more than that. There are hundreds of concepts and each concept is vast enough for one to cover end to end.

Yes I know I know it sounds very overwhelming. But please dont freak out! Once you get the hang of a specific concept, rest all becomes a lot easier. You just need to TAKE THE FIRST STEP AHEAD and the REST WILL FOLLOW 🙂

Here is the link to a compiled list of 600+ Questions and their respective answers. This indeed is the top most favorite one on my list

Topics included :

Here is a github repo I found with segregated interview resources for Data Science just in case you wish to refer! Credits- rbhatia

Q7 What Is The Difference Between 1

You can answer this question, by first explaining, what exactly T-tests are. Refer below for an explanation of T-Test.

T-Tests are a type of hypothesis tests, by which you can compare means. Each test that you perform on your sample data, brings down your sample data to a single value i.e. T-value. Refer below for the formula.

Fig 7: Formula to calculate t-value Data Analyst Interview Questions

Now, to explain this formula, you can use the analogy of the signal-to-noise ratio, since the formula is in a ratio format.

Here, the numerator would be a signal and the denominator would be the noise.

So, to calculate 1-Sample T-test, you have to subtract the null hypothesis value from the sample mean. If your sample mean is equal to 7 and the null hypothesis value is 2, then the signal would be equal to 5.

So, we can say that the difference between the sample mean and the null hypothesis is directly proportional to the strength of the signal.

Now, if you observe the denominator which is the noise, in our case it is the measure of variability known as the standard error of the mean. So, this basically indicates how accurately your sample estimates the mean of the population or your complete dataset.

So, you can consider that noise is indirectly proportional to the precision of the sample.

Now, the ratio between the signal-to-noise is how you can calculate the T-Test 1. So, you can see how distinguishable your signal is from the noise.

Don’t Miss: How To Conduct An Effective Job Interview

What Are Descriptive Statistics

Descriptive statistics are used to summarize the basic characteristics of a data set in a study or experiment. It has three main types

- Distribution refers to the frequencies of responses.

- Central Tendency gives a measure or the average of each response.

- Variability shows the dispersion of a data set.

How Can You Select K For K

We use the elbow method to select k for k-means clustering. The idea of the elbow method is to run k-means clustering on the data set where ‘k’ is the number of clusters.

Within the sum of squares , it is defined as the sum of the squared distance between each member of the cluster and its centroid.

Don’t Miss: Javascript Interview Questions For 5 Years Experience

Take A Few Minutes To Explain How You Would Estimate How Many Shoes Could Potentially Be Sold In New York City Each June

Many interviewers pose questions that let them see an analysts thought process without the aid of computers and data sets. After all, technology is only as good and reliable as the people behind it. What to look for in an answer:

- Ability to identify variables/data segments

- Ability to communicate thought process

Example:

First, I would gather data on how many people live in New York City, how many tourists visit in June and the average length of stay. Id break down the numbers by age, gender and income, and find the numbers on how many shoes they may already have. Id also figure out why they might need new shoes and what would motivate them to buy.

Read Also: Basic Phone Interview Screening Questions

How Do You Use A Confusion Matrix To Calculate Accuracy

There are four terms to be aware of related to confusion matrices. They are:

True positives : When a positive outcome was predicted and the result came positive

True negatives : What a negative outcome was predicted and the result turned out negative

False positives : When a positive outcome was predicted but the result is negative

False negative : When a negative outcome was predicted but the result is positive

The accuracy of a model can be calculated using a confusion matrix using the formula:

Accuracy = TP + TN / TP + TN + FP + FN

Read Also: Interview Questions For Assistant Professor

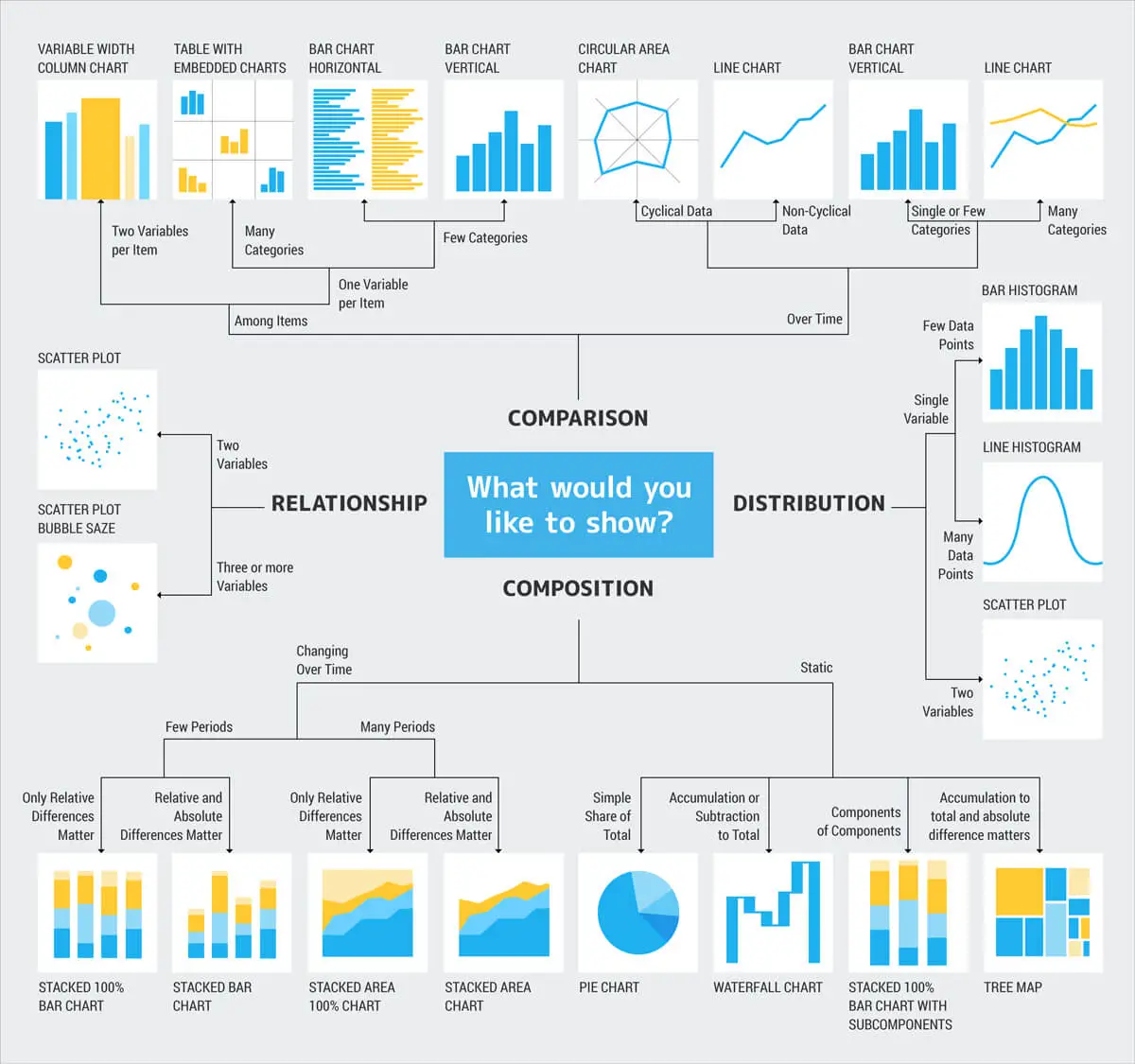

Why R Is Mostly Used In Data Visualization

For the following reasons, R is frequently used in data visualizations:

- R allows us to make practically any type of graph.

- Lattice, ggplot2, Leaflet, and other libraries are just a few of the many built-in functions in R.

- In comparison to Python, R makes it simpler to personalize graphics.

- R is used for both exploratory data analysis and feature engineering.

Q24 What Do You Understand By The Term Normal Distribution

Data is usually distributed in different ways with a bias to the left or to the right or it can all be jumbled up.

However, there are chances that data is distributed around a central value without any bias to the left or right and reaches normal distribution in the form of a bell-shaped curve.

Figure: Normal distribution in a bell curve

The random variables are distributed in the form of a symmetrical, bell-shaped curve.

Properties of Normal Distribution are as follows

Unimodal -one mode

Symmetrical -left and right halves are mirror images

Bell-shaped -maximum height at the mean

Mean, Mode, and Median are all located in the center

Read Also: Is An Interview With God Based On A True Story

What Is An Outlier How Can Outliers Be Determined In A Dataset

Outliers are data points that vary in a large way when compared to other observations in the dataset. Depending on the learning process, an outlier can worsen the accuracy of a model and decrease its efficiency sharply.

Outliers are determined by using two methods:

- Standard deviation/z-score

Master Most in Demand Skills Now !

Q62 What Is Naive In A Naive Bayes

The Naive Bayes Algorithm is based on the Bayes Theorem. Bayes theorem describes the probability of an event, based on prior knowledge of conditions that might be related to the event.

The Algorithm is naive because it makes assumptions that may or may not turn out to be correct.

Q63. How do you build a random forest model?

A random forest model combines many decision tree models together. The decision trees chosen have high bias and low variance. These decision trees are placed parallelly. Each decision tree takes a sample subset of rows and columns with replacement. The result of each decision tree is noted and the majority, which is mode in case of classification problem, or mean and median in case of a regression problem is taken as the answer.

Also Check: Top 10 Coding Interview Questions