There Are So Many Algorithms That Can Be Used In The Design Of A Rate Limiter

I had always assumed that rate limiters only allowed requests to be made at a fixed rate over a time period and learnt that there is actually a name for this algorithm – Token Bucket. Aside from that, there are various other algorithms that can be used.

The popular ones include:

I will not be covering how each algorithm works in this post.

What Are The Various Consistency Patterns Available In System Design

Consistency from the CAP theorem states that every read request should get the most recently written data. When there are multiple data copies available, there arises a problem of synchronizing them so that the clients get fresh data consistently. Following are the consistency patterns available:

- Weak consistency: After a data write, the read request may or may not be able to get the new data. This type of consistency works well in real-time use cases like VoIP, video chat, real-time multiplayer games etc. For example, when we are on a phone call, if we lose network for a few seconds, then we lose information about what was spoken during that time.

- Eventual consistency: Post data write, the reads will eventually see the latest data within milliseconds. Here, the data is replicated asynchronously. These are seen in DNS and email systems. This works well in highly available systems.

- Strong consistency: After a data write, the subsequent reads will see the latest data. Here, the data is replicated synchronously. This is seen in RDBMS and file systems and are suitable in systems requiring transactions of data.

There Is No Absolute Answer To Where The Rate Limiter Should Be Placed

Prior to reading this book, I would have thought that a rate limiter would always be placed on the server. I have since realised that my assumption was wrong and it ultimately depends on your current stack, resources, and priorities.

Some guidelines include:

- Is the language you are using efficient in implementing rate limiting?

- Do you need full control of the algorithm?

- Do you have resources to build a rate limiter at this time?

- Can your servers support the rate limiter you want to build?

- Could you use a 3rd party service for rate limiting?

You May Like: Interview Attire For Plus Size

Interviewer: How Would You Modify This For A Distributed Environment

This system right now it’s based on a single data center environment. All the requests are coming in through the same rate limiter. They’re going through the same set of web servers.

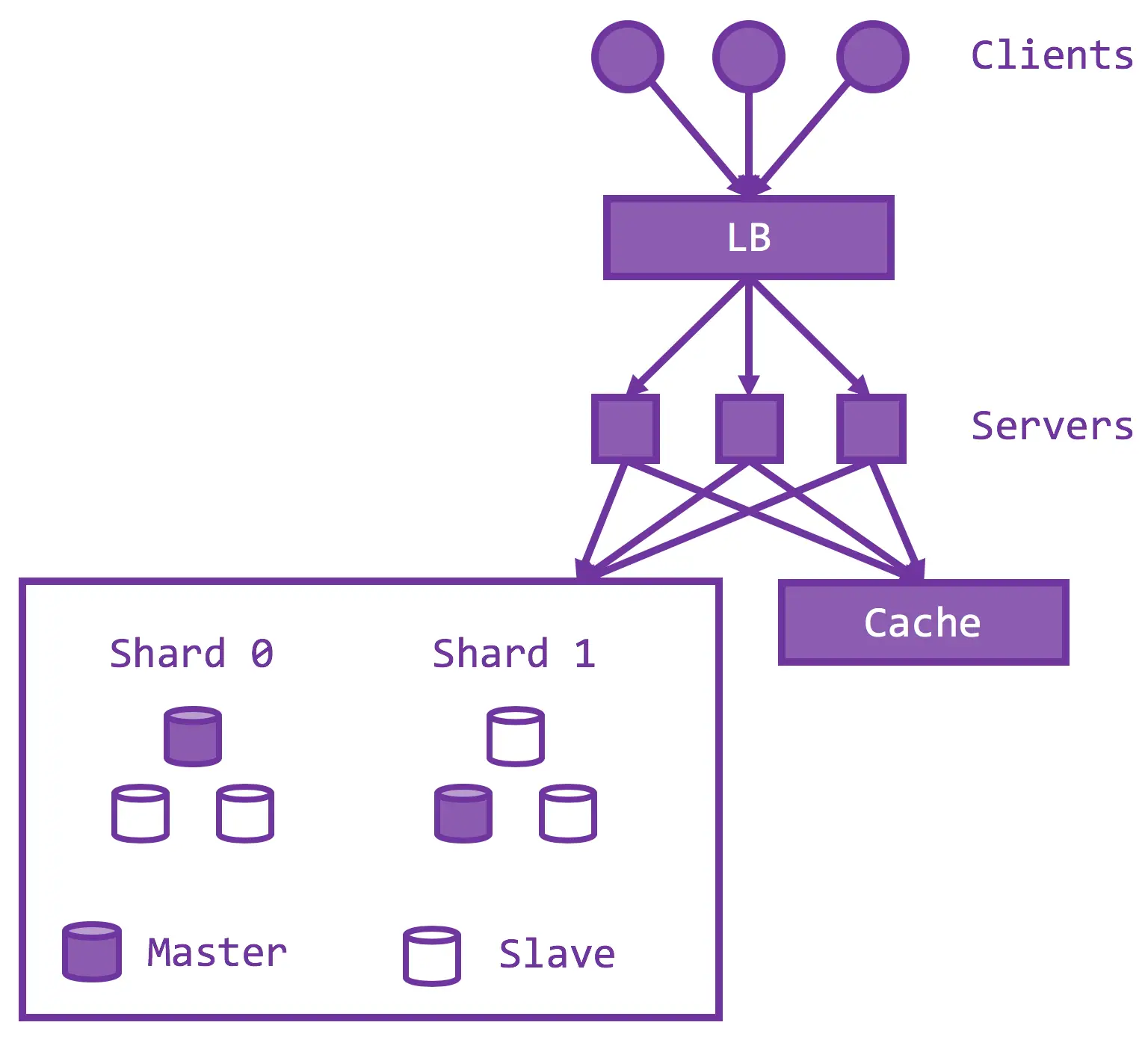

In most big systems, we have a distribute environment with multiple data centers. What we need is a load balancer.

The load balancer is managing requests across multiple multiple servers and multiple data centers. We have multiple implementations of the rate limiter as every data center has their own implementation of this middleware.

What remains constant or needs to scale horizontally is your cache. You need one common cache across a distributed network.

different caches,

You could look at different configurations where you can have one read cache and one write cache.How do you sync between them?

Or have one common read/write cache which is shared across all the data centers.

Q2 How Do You Design A Traffic Control System

This system design interview question has been popular for a while now. While answering this question, your ideal solution should:

- Consider all phase transitions

- Be clear on the conditions in which a certain transition will take place

- Consider pedestrian crossing requirements

- Determine clearance time

- Apportion green light time appropriately

The traffic control systemâs behavior will depend on the state of the traffic control system. Explain all your considerations when stating your solution and reasons for trade-offs made, if any.

Read Also: How Would You Live And Defend Our Values Interview Question

What Is Cap Theorem

CAP theorem says that a distributed system cannot guarantee C, A and P simultaneously. It can at max provide any 2 of the 3 guarantees. Let us understand this with the help of a distributed database system.

- Consistency: This states that the data has to remain consistent after the execution of an operation in the database. For example, post database updation, all queries should retrieve the same result.

- Availability: The databases cannot have downtime and should be available and responsive always.

- Partition Tolerance: The database system should be functioning despite the communication becoming unstable.

The following image represents what databases guarantee what aspects of the CAP Theorem simultaneously. We see that RDBMS databases guarantee consistency and Availability simultaneously. Redis, MongoDB, Hbase databases guarantee Consistency and Partition Tolerance. Cassandra, CouchDB guarantees Availability and Partition Tolerance. Complete Video Tutorial.

Tips To Crack Amazon System Design Interview Questions

As you may have noticed, the Amazon system design interview questions can be tricky. The questions are ambiguous, and you will have to deal with the unstructured nature of the discussion. However, with extra practice, youâll be prepared to handle anything the interviewers throw at you. Hereâs what you should do before and during the interview to make the best impression.

You May Like: What To Know For A Phone Interview

Definition Of The System:

System design is such a vast topic if we dont narrow it down to a specific purpose, it will become complicated to design the system, especially for newbies. In this article, we are designing a rate limiter service that will limit the number of client requests to a server within a time frame.

How Do We Integrate All

- There are two options. And they are pretty standard. We can run Rate Limiter as a part of the service process or as its own process .

- In the first option, Rate Limiter is distributed as a collection of classes, a library that should be integrated with the service code.

- In the second option we have two libraries: the daemon itself and the client, that is responsible for inter-process communication between the service process and the daemon. Client is integrated with the service code.

- What are the pros for the first approach?

- It is faster, as we do not need to do any inter-process call.

- It is also resilient to the inter-process call failures, because there are no such calls. -The second approach is programming language agnostic. It means that Rate Limiter daemon can be written on a programming language that may be different from the language we use for the service implementation. As we do not need to do integration on the code level. Yes, we need to have Rate Limiter client compatible with the service code language. But not the daemon itself.

Read Also: How To Relax Before An Interview

What Is Caching What Are The Various Cache Update Strategies Available In Caching

Caching refers to the process of storing file copies in a temporary storage location called cache which helps in accessing data more quickly thereby reducing site latency. The cache can only store a limited amount of data. Due to this, it is important to determine cache update strategies that are best suited for the business requirements. Following are the various caching strategies available:

- Cache-aside: In this strategy, our application is responsible to write and read data from the storage. Cache interaction with the storage is not direct. Here, the application looks for an entry in the cache, if the result is not found, then the entry is fetched from the database and is added to the cache for further use. Memcached is an example of using this update strategy.

Cache-aside strategy is also known as lazy loading because only the requested entry will be cached thereby avoiding unnecessary caching of the data. Some of the disadvantages of this strategy are:

The main disadvantage of this method is that there are chances of data loss if the cache goes down before the contents of the cache are written into the database.

- Refresh-ahead: Using this strategy, we can configure the cache to refresh the cache entry automatically before its expiration.

This cache strategy results in reduced latency if it can predict accurately what items are needed in future.

Q3 How Would You Design A Search Typeahead

This is another Amazon systems design interview question that frequently features in Amazon systems design interviews. In order to answer this question, you should consider the following aspects –

- Store previous search queries in the database

- Keep the data fresh

- Find the appropriate matches to the entered string

- Handle the queries per second – to be automatically handled by the system

- DIsplay the best matches from strings contained in the database

Also Check: Product Management Interview Case Study

How Do You Answer System Design Interview Questions

- Ask questions to the interviewer for clarification: Since the questions are purposefully vague, it is advised to ask relevant questions to the interviewer to ensure that both you and the interviewer are on the same page. Asking questions also shows that you care about the customer requirements.

- Gather the requirements: List all the features that are required, what are the common problems and system performance parameters that are expected by the system to handle. This step helps the interviewer to see how well you plan, expect problems and come up with solutions to each of them. Every choice matters while designing a system. For every choice, at least one pros and cons of the system needs to be listed.

- Come up with a design: Come up with a high-level design and low-level design solutions for each of the requirements decided. Discuss the pros and cons of the design. Also, discuss how they are beneficial to the business.

The primary objective of system design interviews is to evaluate how well a developer can plan, prioritize, evaluate various options to choose the best possible solution for a given problem.

Things To Do During The Interview

To ace the Amazon system design interview, you must keep four key things in mind:

- What kind of books? Is it going to be e-books or just regular books?

- How many users are we looking at?

- What will be the transactions per second?

You must ask questions related to scaling, performance, API, etc.

Read Also: Sample Rejection Email After Interview

Things To Do Before The Interview

Design A Global Chat Service Like Whatsapp Or A Facebook Messenger

- What are some of the required features?

- Allow users to chat over the internet.

- Provide support for one-on-one and group chats.

- Messages need to be stored for better viewing.

- Messages need to be encrypted for security purposes.

You May Like: Special Events Coordinator Interview Questions

How Is Sharding Different From Partitioning

- Database Sharding – Sharding is a technique for dividing a single dataset among many databases, allowing it to be stored across multiple workstations. Larger datasets can be divided into smaller parts and stored in numerous data nodes, boosting the systems total storage capacity. A sharded database, similarly, can accommodate more requests than a single system by dividing the data over numerous machines. Sharding, also known as horizontal scaling or scale-out, is a type of scaling in which more nodes are added to distribute the load. Horizontal scaling provides near-limitless scalability for handling large amounts of data and high-volume tasks.

- Database Partitioning – Partitioning is the process of separating stored database objects into distinct portions. Large database items are partitioned to improve controllability, performance, and availability. Partitioning can enhance performance when accessing partitioned tables in specific instances. Partitioning can act as a leading column in indexes, reducing index size and increasing the likelihood of finding the most desired indexes in memory. When a large portion of one area is used in the resultset, scanning that region is much faster than accessing data scattered throughout the entire table by index. Adding and deleting sections allows for large-scale data uploading and deletion, which improves performance. Data that are rarely used can be uploaded to more affordable data storage devices.

Examples Of Rate Limiter

Amazon EC2 throttles EC2 API requests for each AWS account on a per-Region basis.It uses the token bucket algorithm to implement API throttling.

For most APIs, Stripe allows up to 100 read operations per second and 100 write operations per second in live mode, and 25 operations per second for each in test mode.

Twitter has various rate limits to manage tweets and search tweets

Read Also: Head Of Product Interview Questions

Algorithms For Rate Limiting

There are numerous rate-limiting algorithms available. The use case, we suppose, is to limit the number of queries sent to a server.

Consider the case where we need to create an API Rate Limiter that limits API calls based on the user . A fixed number of requests can be sent per user in a fixed time range. Lets look at the algorithms that are utilised for API rate limiting:

Token Bucket

Assume a bucket has a few tokens. When a request comes in, a token from the bucket must be taken and processed. The request will be refused if no token is available in the bucket, and the requester will have to try again later. As a result, the token bucket gets refreshed every time unit.

- There is a limit of 3 requests per minute per user.

- User 1 makes the first request at 10:00:00 the available token is 3, therefore the request is approved, and the available token count is reduced to 2.

- At 10:00:10, the users second request, the available token is 2, the request is permitted, and the token count is decremented to 1.

- The third request arrives at 10:00:35, with token count 1 available, therefore the request is granted and decremented.

- The 4th request will arrive at 10:00:45, and the available count is already zero, hence API is denied.

- Now, at 10:01:00, the count will be refreshed with the available token 3 for the third time.

Pros:

- Its simple and straightforward to use.

- Memory-friendly

Cons:

Leaky Bucket

Pros:

- Its simple and straightforward to use.

- Memory-friendly

Cons:

Pros:

Pros:

Problems In Distributed Environment:

In the case of distributed systems, the algorithms we discussed will have some problems. You may check the figure below to get the problem. A user is allowed to request 3 times within a minute. The scenario is that the user already made 2 requests and made two new requests within 2 seconds. And the requests from the user went to two different load balancers, then to two different rate limiter services. As the DB already contains the count value 2, both the Rate Limiter service gets the value below the threshold and permits the requests. So, we are now allowing 4 requests from the same user within a minute, which breaks the limit of the rate limiter. This is the inconsistency problem.

As a solution, we may use sticky session load balancing to ensure that one users request will always go to the same rate limiter service. But the problem here is that its not a fault-tolerant design. Because if the Rate limiter service is down, user requests can not be served by the system.

We can solve this problem using locks. Once a service uses counter data from the database, it will lock it. So, other services can not use it before the counter data is updated. But this procedure also has its own share of problems. It will add an extra latency for the locking. So, we need to decide between availability and performance trade-offs.

You May Like: How To Ask About Remote Work In An Interview