Suggested Answers By Data Scientists For Open

How can you ensure that you dont analyze something that ends up producing meaningless results?

Understanding whether the model chosen is correct or not. Start understanding from the point where you did Univariate or Bivariate analysis, analyzed the distribution of data and correlation of variables, and built the linear model. Linear regression has an inherent requirement that the data and the errors in the data should be normally distributed. If they are not then we cannot use linear regression. This is an inductive approach to find out if the analysis using linear regression will yield meaningless results or not.

Another way is to train and test data sets by sampling them multiple times. Predict on all those datasets to determine whether the resultant models are similar and are performing well.

– Gaganpreet Singh, Data Scientist

So, there you have over 120 data science interview questions and answers for most of them too. These are some of the more common interview questions for data scientists around data, statistics, and data science that can be asked in the interviews. We will come up with more questions specific to language, Python/ R, in the subsequent articles, and fulfill our goal of providing 120 data science interview questions PDF with answers to our readers.

What Is A Bias

Bias: Due to an oversimplification of a Machine Learning Algorithm, an error occurs in our model, which is known as Bias. This can lead to an issue of underfitting and might lead to oversimplified assumptions at the model training time to make target functions easier and simpler to understand.

Some of the popular machine learning algorithms which are low on the bias scale are –

Support Vector Machines , K-Nearest Neighbors , and Decision Trees.

Algorithms that are high on the bias scale –

Logistic Regression and Linear Regression.

Variance: Because of a complex machine learning algorithm, a model performs really badly on a test data set as the model learns even noise from the training data set. This error that occurs in the Machine Learning model is called Variance and can generate overfitting and hyper-sensitivity in Machine Learning models.

While trying to get over bias in our model, we try to increase the complexity of the machine learning algorithm. Though it helps in reducing the bias, after a certain point, it generates an overfitting effect on the model hence resulting in hyper-sensitivity and high variance.

Bias-Variance trade-off: To achieve the best performance, the main target of a supervised machine learning algorithm is to have low variance and bias.

The following things are observed regarding some of the popular machine learning algorithms –

Tell Me About A Time You Had To Communicate Something Technical To A Non

The key to answering a question like this is specificity. Explain a technical project you worked on, and describe the exact process you used to ensure all stakeholders understood what you were conveying.

For example, you might say, In a previous job, I was asked to recommend predictive models the company could use for credit-risk analysis. I created a presentation looking at the three most common types.

Using visualizations, I was able to design approachable models of the science and math behind random forest, k-nearest neighbor , and decision trees. For each visualization, I outlined the benefits and potential risks, and ultimately recommended k-nearest neighbor as the most appropriate predictive model for the analysis.

Also Check: How To Study For A Job Interview

What Is Star Schema

It is a traditional database schema with a central table. Satellite tables map IDs to physical names or descriptions and can be connected to the central fact table using the ID fields these tables are known as lookup tables and are principally useful in real-time applications, as they save a lot of memory. Sometimes, star schemas involve several layers of summarization to recover information faster.

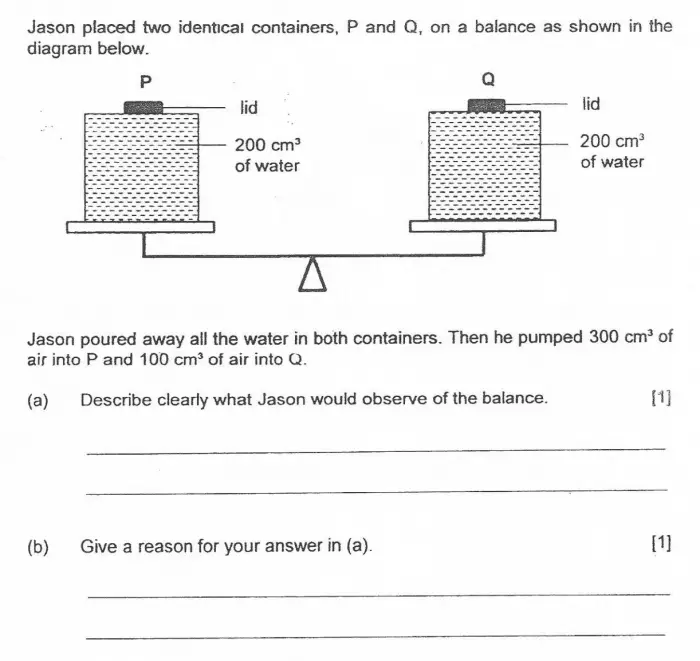

Explain The Steps In Making A Decision Tree

For example, let’s say you want to build a to decide whether you should accept or decline a job offer. The decision tree for this case is as shown:

It is clear from the decision tree that an offer is accepted if:

- Salary is greater than $50,000

- The commute is less than an hour

- Incentives are offered

Free Course: Python for Beginners

Don’t Miss: How To Prepare For Med School Interview

What Are The Assumptions Required For A Linear Regression

There are four major assumptions.

1. There is a linear relationship between the dependent variables and the regressors, meaning the model you are creating actually fits the data.

2. The errors or residuals of the data are normally distributed and independent from each other. 3. There is minimal multicollinearity between explanatory variables

4. Homoscedasticitythe variance around the regression lineis the same for all values of the predictor variable.

Q90 What In Your Opinion Is The Reason For The Popularity Of Deep Learning In Recent Times

Now although Deep Learning has been around for many years, the major breakthroughs from these techniques came just in recent years. This is because of two main reasons:

-

The increase in the amount of data generated through various sources

-

The growth in hardware resources required to run these models

GPUs are multiple times faster and they help us build bigger and deeper deep learning models in comparatively less time than we required previously.

Q91. Explain Neural Network Fundamentals

A neural network in data science aims to imitate a human brain neuron, where different neurons combine together and perform a task. It learns the generalizations or patterns from data and uses this knowledge to predict output for new data, without any human intervention.

The simplest neural network can be a perceptron. It contains a single neuron, which performs the 2 operations, a weighted sum of all the inputs, and an activation function.

More complicated neural networks consist of the following 3 layers-

The figure below shows a neural network-

Also Check: How To Crack Technical Interview

What Is A Decision Tree

are a tool used to classify data and determine the possibility of defined outcomes in a system. The base of the tree is known as the root node. The root node branches out into decision nodes based on the various decisions that can be made at each stage. Decision nodes flow into lead nodes, which represent the consequence of each decision.

Suppose There Is A Dataset Having Variables With Missing Values Of More Than 30% How Will You Deal With Such A Dataset

Depending on the size of the dataset, we follow the below ways:

- In case the datasets are small, the missing values are substituted with the mean or average of the remaining data. In pandas, this can be done by using mean = df.mean where df represents the pandas dataframe representing the dataset and mean calculates the mean of the data. To substitute the missing values with the calculated mean, we can use df.fillna.

- For larger datasets, the rows with missing values can be removed and the remaining data can be used for data prediction.

Recommended Reading: What To Say In Post Interview Email

What Is Normal Distribution

A normal distribution is a set of a continuous variable spread across a normal curve or in the shape of a bell curve. You can consider it as a continuous probability distribution which is useful in statistics. It is useful to analyze the variables and their relationships when we are using the normal distribution curve.

What Is Data Science

Data science is a discipline that organizes, analyzes and discovers insights from data to be used in making informed decisions. Companies and industries accumulate large amounts of data, and they use the results in multiple ways to determine the best ways to improve their business processes. For example, organizations can gather customer feedback from multiple platforms and get valuable information on the needs and desires of their customers. They may use this information to revise their marketing strategy or create a new product.

Being such a diverse field, applicants for data scientist positions need to have strong technical knowledge in fields such as mathematics or computer science, but also possess soft skills like the ability to work under pressure and good communication.

Don’t Miss: Cracking The Coding Interview In Javascript

What Are Exploding Gradients And Vanishing Gradients

- Exploding Gradients: Let us say that you are training an RNN. Say, you saw exponentially growing error gradients that accumulate, and as a result of this, very large updates are made to the neural network model weights. These exponentially growing error gradients that update the neural network weights to a great extent are called Exploding Gradients.

- Vanishing Gradients: Let us say again, that you are training an RNN. Say, the slope became too small. This problem of the slope becoming too small is called Vanishing Gradient. It causes a major increase in the training time and causes poor performance and extremely low accuracy.

What Hurdles Or Obstacles Have You Overcome

The interviewer may be asking this question if there are any areas of concern on your resume, or if they need further information. They may also simply be looking to get further clues into your personality as well as how you handle conflict.

A sample answer could be:

One of the biggest hurdles that I had to overcome at my internship was the fact that I didnt have a lot of experience. I had big shoes to fill in order to prove myself, joining a team of seasoned professionals. This challenge helped me push myself harder, however, and I quickly became a valuable asset to the team and business.

You May Like: Software Engineer Interview With Product Manager

Q121 What Is A Generative Adversarial Network

Suppose there is a wine shop purchasing wine from dealers, which they resell later. But some dealers sell fake wine. In this case, the shop owner should be able to distinguish between fake and authentic wine.

The forger will try different techniques to sell fake wine and make sure specific techniques go past the shop owners check. The shop owner would probably get some feedback from wine experts that some of the wine is not original. The owner would have to improve how he determines whether a wine is fake or authentic.

The forgers goal is to create wines that are indistinguishable from the authentic ones while the shop owner intends to tell if the wine is real or not accurately

Let us understand this example with the help of an image.

There is a noise vector coming into the forger who is generating fake wine.

Here the forger acts as a Generator.

The shop owner acts as a Discriminator.

The Discriminator gets two inputs one is the fake wine, while the other is the real authentic wine. The shop owner has to figure out whether it is real or fake.

So, there are two primary components of Generative Adversarial Network named:

The generator is a CNN that keeps keys producing images and is closer in appearance to the real images while the discriminator tries to determine the difference between real and fake images The ultimate aim is to make the discriminator learn to identify real and fake images.

What Was Your Most Successful/most Challenging Data Analysis Project

What theyâre really asking: What are your strengths and weaknesses?

When an interviewer asks you this type of question, theyâre often looking to evaluate your strengths and weaknesses as a data analyst. How do you overcome challenges, and how do you measure the success of a data project?

Getting asked about a project youâre proud of is your chance to highlight your skills and strengths. Do this by discussing your role in the project and what made it so successful. As you prepare your answer, take a look at the original job description. See if you can incorporate some of the skills and requirements listed.

If you get asked the negative version of the question , be honest as you focus your answer on lessons learned. Identify what went wrongâmaybe your data was incomplete or your sample size was too smallâand talk about what youâd do differently in the future to correct the error. Weâre human, and mistakes are a part of life. Whatâs important here is your ability to learn from them.

Interviewer might also ask:

-

Walk me through your portfolio.

-

What is your greatest strength as a data analyst? How about your greatest weakness?

-

Tell me about a data problem that challenged you.

Don’t Miss: What Questions Should You Ask Employer In Interview

Why Did You Opt For A Data Science Career

Tell them how you got passionate about data science. You can share a quick story or talk about a specific area that served as your gateway to data science, such as statistical analysis or Python programming.

Then, talk about your backgroundyour college degree, previous companies youve worked at, and data science courses that youve completed.

Finally, relate your interests to the organizations needs, and explain how your expertise in data science can help the company solve its challenges.

When Are Behavioral Interview Questions Asked In Data Science Interviews

When most people think of behavioral questions, they are often thinking of the kinds of questions asked by recruiters, not hiring managers. This includes recruiter questions about being OK with a certain location or commute. Its not a particularly tough question to pass .

But most behavioral questions that actually matter occur during the hiring manager screen and the onsite interview. With data science behavioral interview questions, candidates have to:

- Defend their resumes.

- Build a case they can be a good culture fit.

Don’t Miss: Best Interview Outfits For Women

Situational Question Based On The Resume

If you have a gap on your resume, recruiters will often ask about it. Theres no need to panic. Just be honest about why you took a professional break, and explain how youve gotten reacquainted with the industry.

Candidates without an academic background in computer science or math might get asked why they didnt pursue those fields. Answer this question by explaining why you chose an unconventional route.

Further reading: here is a guide with data science interview preparation tips to know what to expect from your data science interview

Difference Between Point Estimates And Confidence Interval

Confidence Interval: A range of values likely containing the population parameter is given by the confidence interval. Further, it even tells us how likely that particular interval can contain the population parameter. The Confidence Coefficient is denoted by 1-alpha, which gives the probability or likeness. The level of significance is given by alpha.

Point Estimates: An estimate of the population parameter is given by a particular value called the point estimate. Some popular methods used to derive Population Parameters Point estimators are – Maximum Likelihood estimator and the Method of Moments.

To conclude, the bias and variance are inversely proportional to each other, i.e., an increase in bias results in a decrease in the variance, and an increase in variance results in a decrease in bias.

You May Like: What Is A Behavioral Interview

What Data Analytics Software Are You Familiar With

What theyâre really asking: Do you have basic competency with common tools? How much training will you need?

This is a good time to revisit the job listing to look for any software emphasized in the description. As you answer, explain how youâve used that software in the past. Show your familiarity with the tool by using associated terminology.

Mention software solutions youâve used for various stages of the data analysis process. You donât need to go into great detail here. What you used and what you used it for should suffice.

Interviewer might also ask:

-

What data software have you used in the past?

-

What data analytics software are you trained in?

Tip: Gain experience with data analytics software through a Guided Project on Coursera. Get hands-on learning in under two hours, without having to download or purchase software. Youâll be ready with something to talk about during your next interview for analysis tools like:

-

What is your knowledge of statistics?

-

How have you used statistics in your work as a data analyst?

Understanding Of Soft Skills

Once interviewers get an understanding of candidates technical abilities and project knowledge, it is time for them to analyse the soft skills of candidates. Although soft skills are often not taken seriously by data scientists, in reality, soft skills are the skill sets that will help data scientists simplify their work and make it more understandable for the rest of the organisations. In fact, in many cases, soft skills turn out to be more important than expertise in technical skills. Google has done an internal survey across its teams and has noted that the best teams werent the technical specialists, instead, they were the ones that brought strong, soft skills to the collaborative process.

Having soft skills are critical for data to communicate with other teammates of organisations as well as sharing data insights to stakeholders. Some of the essential soft skills include communication, curiosity, business acumen, data storytelling, critical thinking and adaptability and product understanding. Alongside, enhancing soft skills will also help data scientists to understand the ethics of using data and its business value. And, analysing these factors would help interviewers to understand the vision, imagination and creativity of candidates.

Also Read: The 7 Habits Of Highly Effective Data Scientists

Read Also: Megan Thee Stallion Interview With Gayle King Full Interview Cbs