What Are The Differences Between The Azure Table Storage And The Azure Sql Service

The difference between Azure Table Storage and Azure SQL Service are as follows:

|

Table Storage Service |

|

|

1. It has a NoSQL type of storage on Azure. |

1. It has a relational storage structure on Azure. |

|

2. The data is stored in key-value format and is called Entity. |

2. The data is stored in rows and columns combination in an SQL table. |

|

3. The data schema is not enforced for storage. |

3. The data schema is enforced for storing data. Error occurs if schema is violated. |

|

4. The partition and row key combination are unnique for each identity. |

4. With the help of primary or unique key, uniqueness can be defined. |

|

5. There is no relationship between tables. |

5. Relationship between tables can be created with the help of foreign keys. |

|

6. The table storage service can be used gor storing log information or diagnostics data. |

6. The Azure SQL table can be used for transaction-based applications. |

What Are The Instance Types Presented By Azure

There are different types of instances on the basis of different needs they are useful in:

- General purpose: CPU to memory ratio is balanced. It provides low to medium traffic servers, small to medium databases. It is the best for testing and development. Largest instance size: Standard_D64_v3 256 GB Memory and 1600 GB SSD Temp Storage

- Compute Optimized: High CPU to memory ratio. It is the best for medium traffic web servers, application servers, and network appliances. Largest instance size: Standard_F72s_V2 144 GB Memory and 576 GB SSD Temp Storage

- Memory-Optimized: High memory to CPU ratio. It works the best for relational database servers, in-memory analytics and medium to large sized caches. Largest instance size: Standard_M128m 3892 GB Memory and 14,336 SSD Temp Storage

- Storage-Optimized: It provides high disk IO and throughput. It is the best suited for Big Data, NoSQL and SQL databases. Largest size instance: Standard_L32s 256 GB Memory and 5630 GB SSD Temp Storage

- GPU: These are the virtual machines that have the heavy graphic rendering and video editing. It helps with model training and developing inferences with deep learning. Largest instance size: Standard_ND24rs 448 GB Memory and 2948 GB SSD Temp Storage 4 GPUs and 96 GB Memory

- High-Performance Compute: It provides Azures fastest and most powerful CPU virtual machine with optional high throughput interfaces. Largest instance size: Standard_L32s 224 GB Memory and 2000 GB Temp storage

Mention About Three Types Of Triggers That Azure Data Factory Supports

Don’t Miss: What To Answer During Job Interview

Can I Request For A Support Session If I Need To Better Understand The Topics

Intellipaat is offering 24/7 query resolution, and you can raise a ticket with the dedicated support team at any time. You can avail of email support for all your queries. If your query does not get resolved through email, we can also arrange one-on-one sessions with our support team. However, 1:1 session support is provided for a period of 6 months from the start date of your course.

Mentions The Steps For Creating Etl Process In Azure Data Factory

When attempting to retrieve some data from Azure SQL server database, if anything needs to be processed, it would be processed and saved in the Data Lake Store. Here are the steps for creating ETL:

- Firstly, create a Linked Service for source data store i.e. SQL Server Database

- Suppose that we are using a cars dataset

- Now create a Linked Service for a destination data store that is Azure Data Lake Store

- After that, create a dataset for Data Saving

- Setup the pipeline and add copy activity

- Finally, schedule the pipeline by inserting a trigger

Read Also: What Will I Be Asked In An Interview

Is It Possible To Have Nested Looping In Azure Data Factory

There is no direct support for nested looping in the data factory for any looping activity . However, we can use one for each/until loop activity which will contain an execute pipeline activity that can have a loop activity. This way, when we call the loop activity it will indirectly call another loop activity, and well be able to achieve nested looping.

Get confident to build end-to-end projects.

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

What Are The Key Differences Between Azure Data Lake And Azure Data Warehouse

Azure Data Lake and Azure Data Warehouse are widely used to store big data, but they are not synonymous, and we can’t use them interchangeably. Azure Data Lake is a huge pool of raw data. On the other hand, Azure Data Warehouse is a repository for structured, processed, and filtered data already processed for a specific purpose.

Following is a list of key differences between Azure Data Lake and Azure Data Warehouse:

Azure Data Lake Azure Data Warehouse Azure Data Lake has a raw data structure. Raw data means data that has not yet been processed for a specific purpose. Azure Data Warehouse has a processed data structure. The processed data means the data that a larger audience can easily understand. It is primarily used to store raw and unprocessed data. It is primarily used to store only processed data, saving storage space by not maintaining data that may never be used. Azure Data Lake is complementary to Azure Data Warehouse. In other words, we can say that if you have your data at a data lake, it can be stored in the data warehouse as well, but you must have to follow certain rules. Azure Data Warehouse is a traditional way of storing data. It is one of the most widely used storage for big data. The purpose of data storing in the Azure Data Lake is not yet determined. The purpose of data storing in the Azure Data Warehouse is worthy because it is currently in use. It uses one language to process data of any format. It uses SQL because data is already processed.

Also Check: What Questions To Ask A Company During An Interview

What Are The Password Requirements When Creating A Vm

Explanation: Passwords must be 12 123 characters in length and meet 3 out of the following 4 complexity requirements:

- Have lower characters

- Have a special character

The following passwords are not allowed:

Apart from this Azure Interview Questions Blog, if you want to get trained from professionals on this technology, you can opt for a structured training from edureka! Click below to know more.

Q11 List The Step Through Which You Can Access Data Using The 80 Types Of Datasets In Azure Data Factory

In its current version, the MDF functionality permits SQL Data Warehouse, SQL Database, and Parquet and text files stored in Azure Blob Storage and Data Lake Storage Gen2 natively for source and sink. You can use the Copy Activity functionality to access data from any supplementary connector. After this, you must also run an Azure Data Flow activity to transform the data efficiently after the staging is complete.

You May Like: How To Schedule A Job Interview

How Does Azure Data Factory Work

Azure Data Factory processes the data from the pipeline. It basically works in the three stages:

Connect and Collect:

Connects to various SaaS services, or FTP or File sharing servers. Once the Azure Data Factory secures the connection, it starts collecting the Data from therein. There are on-premise sources and cloud storage. Azure Data Factory collects the information from all the sources available to make it available to one centralized source.

Transform and Enrich:

Once the collection of Data is done, all the data is transformed. The transformation could be done using various methods like HDInsight Hadoop, Spark, Data Lake Analytics, and Machine Learning.

Publish:

The transformed data is then available on the local storage or local cloud space in the form of SQL. The data is in centralized storage, to be accessed and processed by BI and analytical teams.

Related Page: Azure Stack

Data migration activities with Data Factory

Data Migration takes place in one of the two forms: From one data storage to another. From on-premise to cloud storage or vise versa.

Azure Data Migration Copy Activities:

The Copy Activities in Azure Data Migration are responsible for copying the source data to the sink data store.

The data stores – both source and sink, that Azure supports are – Azure Blob storage, Azure Cosmos DB , Azure Data Lake Store, Oracle, Cassandra, and a few others.

Azure Data Migration Transformation Activities:

Related Page: Azure Logic Apps

What Is Azure Service Fabric

Explanation: Azure Service Fabric is a distributed systems platform that makes it easy to package, deploy, and manage scalable and reliable micro-services. Service Fabric also addresses the significant challenges in developing and managing cloud applications. Developers and administrators can avoid complex infrastructure problems and focus on implementing mission-critical, demanding workloads that are scalable, reliable, and manageable. Service Fabric represents the next-generation middleware platform for building and managing these enterprise-class, tier-1, cloud-scale applications.

You May Like: Anti Money Laundering Interview Questions

What Is The Meaning Of Application Partitions

Explanation: The application partitions are a part of the Active Directory system and having said so, they are directory partitions which are replicated to domain controllers. Usually, domain controllers that are included in the process of directory partitions hold a replica of that directory partition. The attributes and values of application partitions is that you can replicated them to any specific domain controller in a forest, meaning that it could lessen replication traffic. While the domain directory partitions transfer all their data to all of the domains, the application partitions can focus on only one in the domain area. This makes application partitions redundant and more available.

What Are The Exclusive Prosperous Cross

The Azure Data Factory V2 affords a rich set of SDKs that we can use to write, manage, and monitor pipelines via the usage of our favored IDE. Some popular cross-platform SDKs for advanced users in Azure Data Factory are as follows:

- Users can also use the documented REST APIs to interface with Azure Data Factory V2.

Recommended Reading: How To Send A Rejection Letter After An Interview

Tell Me About A Problem You Solved At Your Prior Job

This is something to spend some time on when youre preparing responses to possible Azure interview questions. As a cloud architect, you need to show that you are a good listener and problem solver, as well as a good communicator. Yes, you need to know the technology, but cloud computing does not usually involve sitting isolated in a cubicle. Youll have stakeholders to listen to, problems to solve, and options to present. When you answer questions like these, try to convey that you are a team player and a good communicator, in addition to being a really good Azure architect.

What Are The Roles And Responsibilities Of An Aws Certified Solutions Architect

The roles and responsibilities of an AWS Solution Architect Associate include the following:

- They are responsible for designing services and/or applications within an organization.

- Explain large and complex business problems to the management in simple terms

- Integrate systems and information that matches the business requirements

- Take care of the enterprise and architectural concerns along with programming, testing, etc.

- Scaling up the process and building infrastructure for sustainable use

- Determine the risk level associated with the third-party platforms or frameworks

Also Check: How To Conduct An Employment Interview

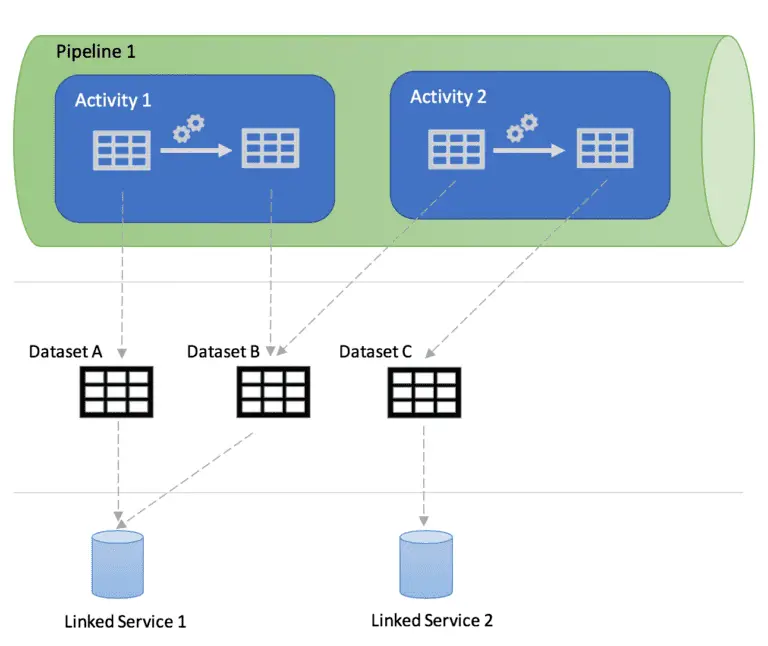

What Is The Key Difference Between The Dataset And Linked Service In Azure Data Factory

Dataset specifies a reference to the data store described by the linked service. When we put data to the dataset from a SQL Server instance, the dataset indicates the table’s name that contains the target data or the query that returns data from different tables.

Linked service specifies a description of the connection string used to connect to the data stores. For example, when we put data in a linked service from a SQL Server instance, the linked service contains the name for the SQL Server instance and the credentials used to connect to that instance.

What Is The Use Of The Adf Service

ADF is primarily used to organize the data copying between various relational and non-relational data sources hosted locally in data centers or the cloud. Moreover, you can use ADF Service to transform the ingested data to fulfill business requirements. In most Big Data solutions, ADF Service is used as an ETL or ELT tool for data ingestion.

Also Check: How To Ace Your Job Interview

Some General Interview Questions For Azure Data Factory

1. How much will you rate yourself in Azure Data Factory?

When you attend an interview, Interviewer may ask you to rate yourself in a specific Technology like Azure Data Factory, So It’s depend on your knowledge and work experience in Azure Data Factory.

2. What challenges did you face while working on Azure Data Factory?

This question may be specific to your technology and completely depends on your past work experience. So you need to just explain the challenges you faced related toAzure Data Factory in your Project.

3. What was your role in the last Project related to Azure Data Factory?

It’s based on your role and responsibilities assigned to you and what functionality you implemented using Azure Data Factory in your project. This question is generallyasked in every interview.

4. How much experience do you have in Azure Data Factory?

Here you can tell about your overall work experience on Azure Data Factory.

5. Have you done any Azure Data Factory Certification or Training?

It depends on the candidate whether you have done any Azure Data Factory training or certification. Certifications or training are not essential but good to have.

Q4 What If Any Is The Limit On The Integration Runtimes That You Can Perform

You can perform any number of integration runtime incidents in the Azure Data Factory. There is no limit here. However, there is a limit on how many VM cores can be utilised by the integration runtime for each SSIS package implementation subscription. Anyone pursuing a Microsoft Azure certification at any level should know and understand each of these terms.

Also Check: Ui Ux Design Interview Questions And Answers

What Are The Advantages Of Scaling In Azure

Azure performs scaling with the help of a feature known as Autoscaling. Autoscaling helps to deal with changing demands in Cloud Services, Mobile Services, Virtual Machines, and Websites. Below are a few of its advantages:

- Maximizes application performance

- Scale up or down based on demand

- Schedule scaling to particular time periods

- Highly cost-effective

To be doubly sure, and to prove your credibility to a potential employer you should consider getting certified and enrolling in our comprehensive Cloud Architect Masters Program today!

Which One Amongst Microsoft Azure Ml Studio And Gcp Cloud Automl Is Better

When we compare both in terms of services, Azure ML Studio wins the verdict since it has Classification, Regression, Anomaly Detection, Clustering, Recommendation, and Ranking features.

On the other hand, GCP Cloud AutoML has Clustering, Regression, and Recommendation features. Moreover, Azure has a drag and drop options that make the process easier to carry out.

Recommended Reading: How To Email References After Interview

What Is The Difference Between Azure Hdinsight And Azure Data Lake Analytics

| Azure HDInsight | |

| It is a Platform as a Service. | It is a Software as a Service. |

| Processing data in it requires configuring the cluster with predefined nodes. Further, by using languages like pig or hive, we can process the data. | It is all about passing the queries written for data processing. Data Lake Analytics further creates compute nodes to process the data set. |

| Users can easily configure HDInsight Clusters at their convenience. Users can also use Spark, Kafka, without restrictions. | It does not give that much flexibility in terms of configuration and customization. But, Azure manages it automatically for its users. |

How Are Azure Marketplace Subscriptions Priced

Explanation:

Pricing will vary based on product types. ISV software charges and Azure infrastructure costs are charged separately through your Azure subscription. Pricing models include:

Bring-your-own-license. You obtain outside of the Azure Marketplace, the right to access or use the offering and are not charged Azure Marketplace fees for use of the offering in the Azure Marketplace.

Free: Free SKU. Customers are not charged Azure Marketplace fees for use of the offering.

Free Software Trial: Full-featured version of the offer that is promotionally free for a limited period of time. You will not be charged Azure Marketplace fees for use of the offering during a trial period. Upon expiration of the trial period, customers will automatically be charged based on standard rates for use of the offering.

Usage-Based: You are charged or billed based on the extent of your use of the offering. For Virtual Machines Images, you are charged an hourly Azure Marketplace fee. For Data Services, Developer services, and APIs, you are charged per unit of measurement as defined by the offering.

Monthly Fee: You are charged or billed a fixed monthly fee for a subscription to the offering . The monthly fee is not prorated for mid-month cancellations or unused services.

Don’t Miss: What Are Your Skills Interview Questions And Answers