What Is Datamart Explain Its Types

A data mart is a simple type of data warehouse that focuses on a particular subject or business line. Teams can access information and gain insights faster with a data mart since they don’t have to spend time searching within a more complex data warehouse or manually collecting data from many sources.

There are three types of data marts:

Dependent. It collects organizational data from a single data warehouse and aids in developing additional Data Marts.

Independent – In this case, there is no dependence on a central or enterprise data warehouse, and each piece of data may be used separately for an independent analysis.

Hybrid – Used when a data warehouse has inputs from several sources and aids in ad hoc integration.

What Do You Understand By The Time Series Algorithm

Time Series algorithm is a tool of Microsoft that provides an optimized set of multiple algorithms for forecasting continuous values, such as product sales over time. Time series algorithm is better than other Microsoft algorithms such as decision trees because other Microsoft algorithms, like decision trees, require additional columns of new information as input to predict a trend. In contrast, the time series model does not need these input types. The time series model can predict trends based only on the original dataset used to create the model. It also facilitates us to add new data to the model when we make a prediction and automatically add the new data in the trend analysis.

What License Keys Exist In The Hana System

-

Temporary License key– Setting up the HANA database automatically allows you to install the temporary license keys. These keys are valid for 90 days after installation, so you should get permanent license keys from the SAP Market Place before that period expires.

-

Permanent License Key- Permanent License keys remain valid until the specified expiration date. The license keys specify the memory allotted for the intended HANA installation.

Read Also: What Happens During An Exit Interview

Q1 List The Most Common Errors That Can Occur When Doing Data Modeling

The most common errors that can occur during data modeling are:

- Creating overly-broad data models: When the tables are running higher than 200, the data model becomes more and more complex. Thus, it increases the chances of failure.

- Unrequired surrogate keys: Surrogate keys are needed only when the natural key canât fulfill its role as the primary key.

- Absence of a purpose: Many times, the user has no idea about the businessâs goal or mission. While itâs not impossible, it is difficult to create a specific business model if the modeler doesnât have the required understanding.

What Do You Understand By Surrogate Key What Are The Benefits Of Using The Surrogate Key

A surrogate key is a unique key in the database used for an entity in the client’s business or an object within the database. This is used when we cannot use natural keys to create a unique primary table key. In this case, the data modeler or architect decides to use surrogate or helping keys for a table in the LDM. That’s why surrogate keys are also known as helping keys. A surrogate key is a substitute for natural keys.

Following are some benefits of using surrogate keys:

- Surrogate keys are useful for creating SQL queries, uniquely identifying a record and good performance.

- Surrogate keys consist of numeric data types that provide excellent performance during data processing and business queries.

- Surrogate keys do not change while the row exists.

- Natural keys can be changed in the source. For example, migration to a new system, making them useless in the data warehouse. That’s why surrogate keys are used.

- If we use surrogate keys and share them across tables, we can automate the code, making the ETL process simpler.

Also Check: What Are Some Job Interview Questions

What Is An Analysis Service In Data Modeling

Analysis service is a product of Microsoft Azure used in Data Modeling. It is a fully managed platform as a service that provides enterprise-grade data models in the cloud. It provides a combined view of the data used in data mining or OLAP. The analysis services use an advanced mashup and modeling features to combine data from multiple data sources, define metrics, and secure data in a single, trusted tabular semantic data model. The biggest advantage of using an analysis service is that it provides users with an easier and faster way to perform ad hoc data analysis using Power BI and Excel tools.

Q21 What Is An Enterprise Data Model

The Enterprise data model comprises all entities required by an enterprise. The development of a common consistent view and understanding of data elements and their relationships across the enterprise is referred to as Enterprise Data Modeling. For better understanding purposes, these data models are split up into subject areas.

Recommended Reading: Sql Server Interview Questions For 10 Years Experience

Top Data Science Skills To Learn To Upskill

| SL. No |

| Linear Algebra for Analysis Online Courses |

15. Discuss hierarchical DBMS. What are the drawbacks of this data model?

A hierarchical DBMS stores data in tree-like structures. The format uses the parent-child relationship where a parent may have many children, but a child can only have one parent.

The drawbacks of this model include:

- Lack of flexibility and adaptability to changing business needs

- Issues in inter-departmental, inter-agency, and vertical communications

- Problems of disunity in data.

16. Detail two types of data modelling techniques.

Entity-Relationship and Unified Modeling Language are the two standard data modelling techniques.

E-R is used in software engineering to produce data models or diagrams of information systems. UML is a general-purpose language for database development and modelling that helps visualise the system design.

upGrads Exclusive Data Science Webinar for you

Watch our Webinar on The Future of Consumer Data in an Open Data Economy

17. What is a junk dimension?

A junk dimension is born by combining low-cardinality attributes into one dimension. These values are removed from other tables and then grouped or junked into an abstract dimension table, which is a method of initiating Rapidly Changing Dimensions within data warehouses.

18. State some popular DBMS software.

Best Data Science Certifications To Do In 2022

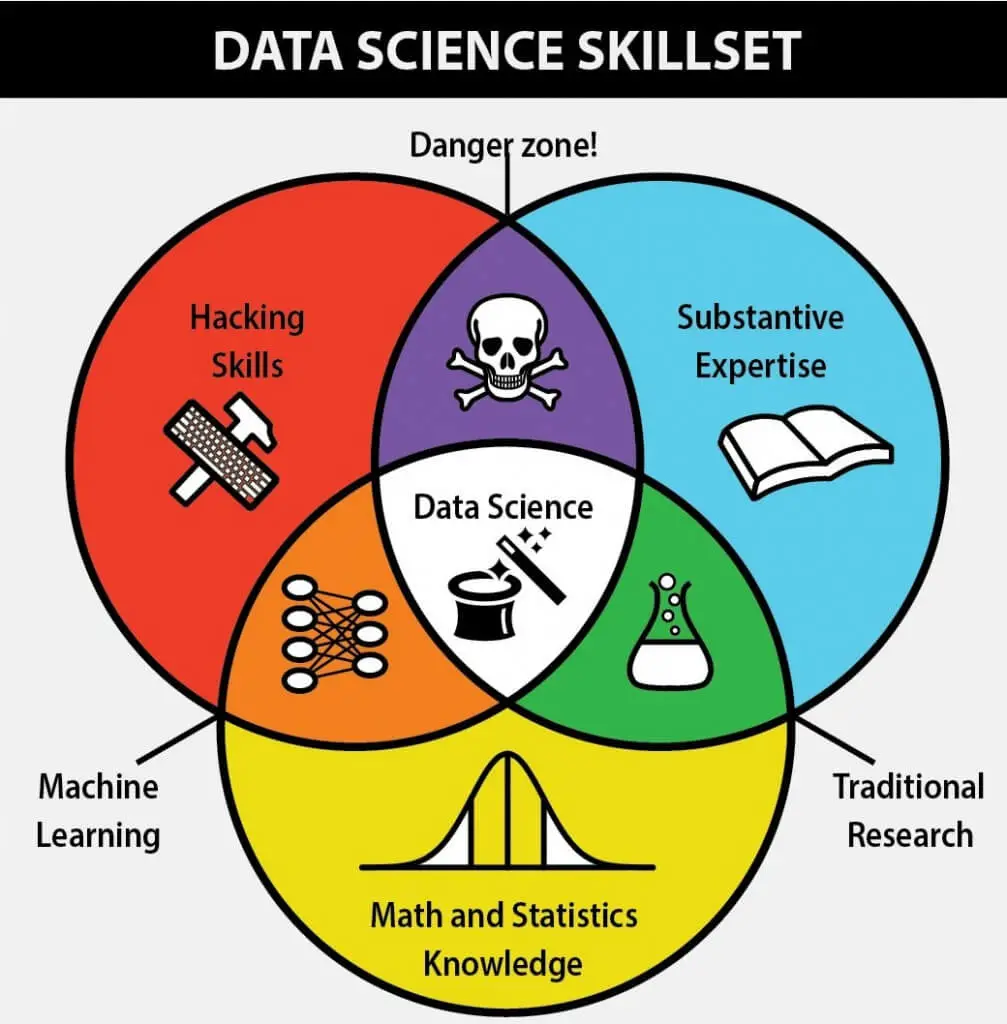

Data scientists are among the most sought-after IT professionals. Data specialists that are able to keep track of the huge volume of data collected by an organization are becoming an increasingly desirable asset for businesses. Certification is something to consider if you are interested in entering this affluent sector or if you want to differentiate yourself from the other candidates in the area.

If you’re looking to get into the data science field, obtaining a Data Science Certification will help you gain in-demand skills as well as establish your expertise to potential employers. Have a look at our rundown of the top Data Science Certifications you can get in 2022.

List Of Best Data Science Certifications

Data Scientist Course

Taking this IBM-sponsored Data Science course is a great way to jump-start your Data Science career while also receiving the top-notch support and learning you need. This course provides in-depth instruction on some of the most in-demand Data Analytics and Machine Learning abilities, as well as hands-on experience with some of the most important tools and techniques, such as Python, Tableau, R, and the fundamental ideas behind machine learning.

Master the intricacies of data analysis and interpretation, Machine Learning, and robust programming abilities to further your Data Science career with this Data Scientist course from Simplilearn.

-

Data Science with Python Certification

-

Certification Course in Data Science with R

-

Data Science Certification

Don’t Miss: How To Interview Someone Online

What Does The Complete Compare Feature Do

With the helpful tool Complete Compare, you can identify and resolve any anomalies between two data models or between a data model and a database or script file. To help you determine the variations between the models, database, or script file, the Complete Compare wizard offers a wide range of compare criteria.

What Is Dimensional Modelling

Dimensional Modeling is a technique of data structuring performed for optimizing the data storage and faster data retrieval in the data warehouse. It consists of 2 types of tables – fact tables and dimension tables. The fact tables are used for storing different transactional data along with the foreign keys from the dimension tables qualifying the data.

The purpose of Dimensional modelling is to achieve faster data retrieval and not the highest degree of normalization.

Also Check: What Type Of Questions Do Interviewers Ask

What Is Amazon Aurora

Amazon Aurora is a high-availability, automated failover relational database engine that supports MySQL and PostgreSQL. To put it another way, Amazon Aurora is a hybrid of MySQL and Postgres. Aurora is a multi-threaded, multiprocessor database engine that prioritizes performance, availability, and operational efficiency.

Amazon Aurora differs from typical MySQL and Postgres database engines in that it does not employ a Write-Ahead Log, but it does feature a crash recovery scheme. Amazon also provides the feature to duplicate data from an Amazon Aurora database to a MySQL or Postgres database on the same instance, as well as vice versa, allowing you to employ the two databases together.

Get confident to build end-to-end projects.

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Q33 What Is The Data Model Metadata

You can take a report of the entire data model, or subject, or part of the data model. The data about various objects in the data model is called data model Metadata. Data Modeling Tools have options to create reports by checking various options. Either you can create a logical data model Metadata of physical model Metadata.

Read Also: Why This Company Interview Question

What Does Forward And Reverse Engineering Mean In Terms Of A Data Model

Forward Engineering is the method for generating DDL scripts from the data model. Data modeling tools can connect to different databases and generate DDL scripts. These scripts further help in creating databases.

Reverse engineering is a method that helps to build data models from scripts or databases. Reverse engineering a database into a data model is possible using data modeling tools that enable connections to databases.

Most Watched Projects

What Is Metadata What Are Its Different Types

Metadata is data that provides information about other data. It gives information about other data but not the content of the data, for example, the text of a message or the image itself.

It describes the data about data and shows what type of data is stored in the database system.

Descriptive metadata: The descriptive metadata provides descriptive information about a resource. It is mainly used for discovery and identification. The main elements of descriptive metadata are title, abstract, author, keywords, etc. Following is a list of several distinct types of metadata:

Administrative metadata: Administrative metadata is used to provide information to manage a resource, like a resource type, permissions, and when and how it was created.

Structural metadata: The structural metadata specifies data containers and indicates how compound objects are put together. It also describes the types, versions, relationships, and other characteristics of digital materials. For example, how pages are ordered to form chapters.

Reference metadata: The reference metadata provides information about the contents and quality of statistical data.

Statistical metadata: Statistical metadata describes processes that collect, process, or produce statistical data. It is also called process data.

Legal metadata: The legal metadata provides information about the creator, copyright holder, and public licensing.

Also Check: A New York Times Poll On Women’s Issues Interviewed 1025

Can A Company Use A Data Warehouse And A Database At The Same Headquarters Is It Ok To Use One Of Them Or Must Both Be Used Simultaneously

Yes, a company may use a data warehouse and a database at the same workplace. But they do not have to be in the same physical data center. It all depends on the organization’s requirements. Databases are often used to support transactions as they occur, whereas data warehouses are used to support business intelligence.

What Are The Advantages Of Data Model

Advantages of the data model are:

- The main goal of a designing data model is to make sure that data objects offered by the functional team are represented accurately.

- The data model should be detailed enough to be used for building the physical database.

- The information in the data model can be used for defining the relationship between tables, primary and foreign keys, and stored procedures.

- Data Model helps businesses to communicate within and across organizations.

- Data model helps to documents data mappings in the ETL process

- Help to recognize correct sources of data to populate the model

Read Also: Qa Scenario Based Interview Questions

What Is The Purpose Of Data Modeling

The purpose of data modeling is to show the different types of data that are used and stored in the system, their relationships, how they might be grouped and structured, and their formats and attributes. Data modeling aims to provide high-quality, accurate, structured data that helps implement business applications and generate consistent outputs.

What Is A Composite Primary Or Foreign Key Constraint

Like a single-attribute primary key, a composite primary key is the smallest and most discrete information required to uniquely identify a row in an entity. A composite key is different in that it consists of multiple attributes within that entity.

For example, an entity that contained peoples first names and last names might require a composite primary key consisting of both first name and last name, as each of these attributes alone may be insufficient to disambiguate two rows.

Like a regular foreign key, a composite foreign key is used to refer from one entity to another. In the case of a composite foreign key, however, it consists of multiple columns or attributes, rather than just one.

Also Check: What To Write In Follow Up Email After Interview

What Is The Importance Of The Third Normal Form

The third normal form is used for preventing data duplication and data abnormalities. A relation is said to have met the third normal form when there are no transitive dependencies for non-prime attributes as present in the second normal form. A 3NF relation is said to have achieved if any one of the below conditions are met for every non-trivial functional dependency A-> B:

- B- prime attribute where every attribute is a part of candidate key

For a table to achieve 3NF form, it should first be in 1NF and achieve 2NF . The rows in the table should be dependent only on the keys. If the contents of fields apply to more than 1 primary key, then they should be put in another table.

For instance, if a patientâs records have a doctorâs phone number stored with the patientâs details, it doesnât meet the 3NF standards. Because the doctorâs number should be part of the doctor table for avoiding duplication in the patient table because a doctor can have more than one patient.

Build A Job Winning Portfolio Of Data Warehousing Projects

Ready with your interview preparation? Wait! There is one final step that will help you boost your confidence to crack the interview. Whether you are a beginner or an experienced professional, a solid hands-on experience with the tools and technologies is essential to crack the technical interview rounds. Check out these solved end-to-end data warehouse projects to solidify your knowledge in the field. ProjectPro helps you hone new data skills with over 250+ projects based on data science and big data. So, get started today to take your data warehousing skills to the next level and build a job-winning portfolio of projects to ace your next interview.

Recommended Reading: Automation Testing Interview Questions For 5 Years Experience

What Is Data Mart What Are The Key Features Of A Data Mart

A data mart is a subset of a data warehouse. It mainly focuses on a specific part of the business, department, or subject area. It provides specific data to a defined group of users within an organization. It is the best solution for a specific business area as it facilitates the users to quickly access important data without wasting time searching through the entire data warehouse. Every big organization has a data mart for a specific department in the business, such as finance, sales, marketing, etc.

Key features of a data mart:

- A data mart mainly focuses on a specific subject matter or area of the business unit.

- It is a subset of a data warehouse and works as a mini-data warehouse that holds aggregated data.

- In a data mart, data is limited in scope.

- It generally uses a star schema or similar structure to hold data. That’s why it is faster to retrieve data from it.

How Can You Relate Cap Theorem To Database Design

CAP theorem says that a distributed system cannot guarantee C, A and P simultaneously. It can at max provide any 2 of the 3 guarantees.

- Consistency: This states that the data has to remain consistent after the execution of an operation in the database. For example, post database updation, all queries should retrieve the same result.

- Availability: The databases cannot have downtime and should be available and responsive always.

- Partition Tolerance: The database system should be functioning despite the communication becoming unstable.

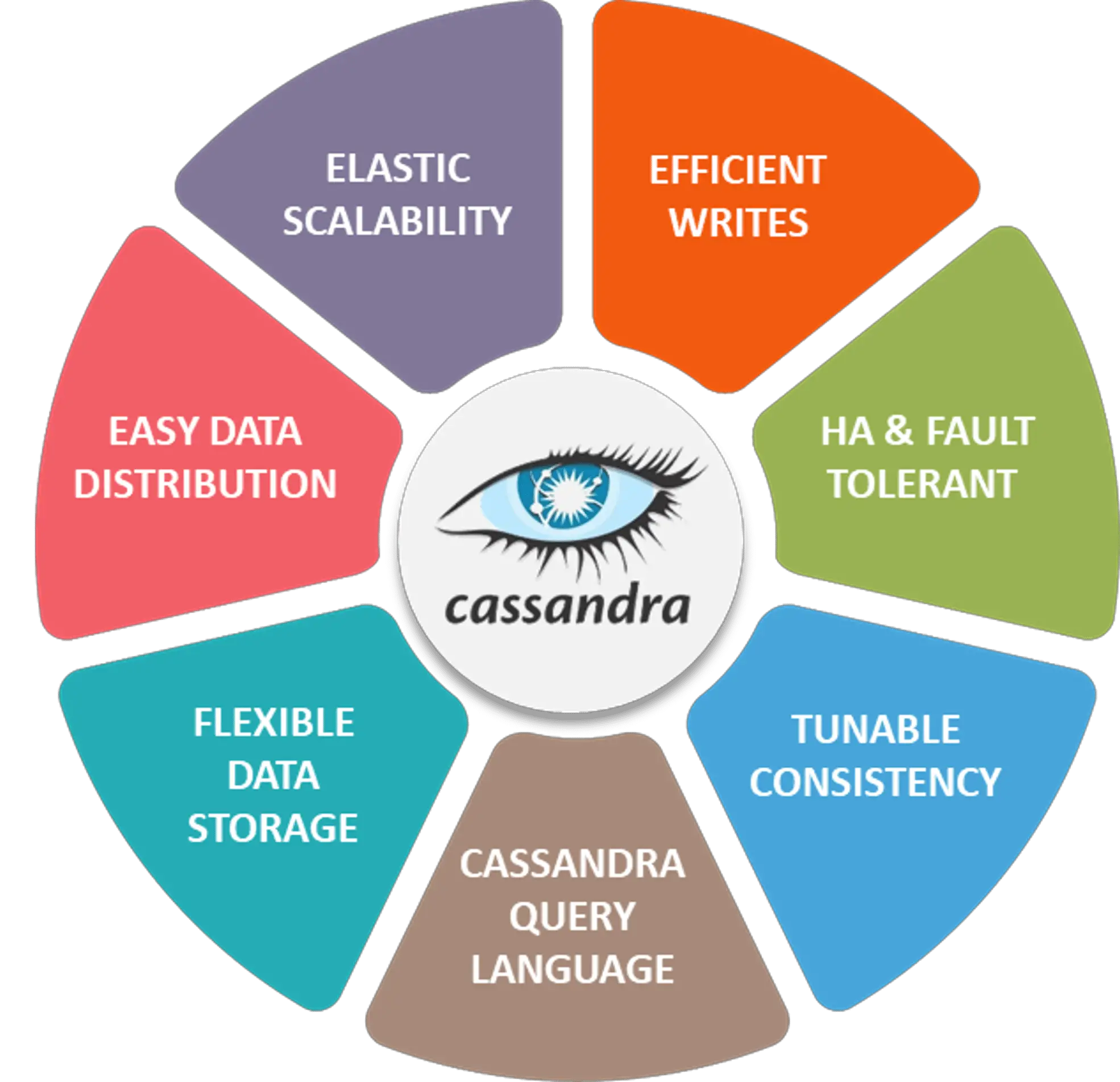

The following image represents what databases guarantee what aspects of the CAP Theorem simultaneously. We see that RDBMS databases guarantee consistency and Availability simultaneously. Redis, MongoDB, Hbase databases guarantee Consistency and Partition Tolerance. Cassandra, CouchDB guarantees Availability and Partition Tolerance.

Don’t Miss: Where To Stream Interview With The Vampire

Explain The Three Different Types Of Data Models

There are three data models-

-

Conceptual data model- The conceptual data model contains all key entities and relationships but does not contain any specific details on attributes. It is typically used during the initial planning stage. Data modelers construct a conceptual data model and pass it to the functional team for assessment. Conceptual data modeling refers to the process of creating conceptual data models.

-

Physical data model- The physical data model includes all necessary tables, columns, relationship constraints, and database attributes for physical database implementation. A physical model’s key parameters include database performance, indexing approach, and physical storage. A table, which consists of rows and columns and is connected to other tables through relationships, is the most essential or principal object in a database. Physical data modeling is the process of creating physical data models.

-

Logical data model- A logical data model is a data model that defines an organization’s business requirements . This is the process of putting a conceptual data model into action and extending it. Entity, Attribute, Super Type, Sub Type, Primary Key, Alternate Key, Inversion Key Entry, Rule, Relationship, Definition, etc., are all present in logical data models. The process of creating logical data models is known as logical data modeling.