What Is Meant By Outliers

In a dataset, an outlier is an observation that lies at an abnormal distance from the other values in a random sample from a particular data set. It is left up to the analyst to determine what can be considered abnormal. Before you classify data points as abnormal, you must first identify and categorize the normal observations. Outliers may occur due to variability in measurement or a particular experimental error. Outliers must be identified and removed before further analysis of the data not to cause any problems.

What Is Hash Partitioning

In Hash partitioning, the Informatica server would apply a hash function in order to partition keys to group data among the partitions. It is used to ensure the processing of a group of rows with the same partitioning key in the same partition.

Are you interested in learning Informatica from experts? Enroll in our Informatica Course in Bangalore now!

How Can You Deal With Duplicate Data Points In An Sql Query

Interviewers can ask this question to test your SQL knowledge and how invested you are in this interview process as they would expect you to ask questions in return. You can ask them what kind of data they are working with and what values would likely be duplicated?

You can suggest the use of SQL keywords DISTINCT & UNIQUE to reduce duplicate data points. You should also state other ways like using GROUP BY to deal with duplicate data points.

Also Check: Pre Interview Questions For Employers

Mention Some Differences Between Substitute And Replace Functions In Excel

The SUBSTITUTE function in Excel is useful to find a match for a particular text and replace it. The REPLACE function replaces the text, which you can identify using its position.

SUBSTITUTE syntax

=SUBSTITUTE

Where

text refers to the text in which you can perform the replacements

instance_number refers to the number of times you need to replace a match.

E.g. consider a cell A5 which contains Bond007

=SUBSTITUTE gives the result Bond107

=SUBSTITUTE gives the result Bond117

=SUBSTITUTE gives the result Bond117

REPLACE syntax

=REPLACE

Where start_num – starting position of old_text to be replaced

num_chars – number of characters to be replaced

E.g. consider a cell A5 which contains Bond007

=REPLACE gives the result Bond9907

What Tools Did You Use In A Recent Project

Interviewers want to assess your decision-making skills and knowledge about different tools. Therefore, use this question to explain your rationale for choosing specific tools over others.

- Walk the hiring managers through your thought process, explaining your reasons for considering the particular tool, its benefits, and the drawbacks of other technologies.

- If you find that the company works on the techniques you have previously worked on, then weave your experience with the similarities.

Also Check: What Are Common Interview Questions

Data Engineer Interview Questions On Python

Python is crucial in implementing data engineering techniques. Pandas, NumPy, NLTK, SciPy, and other Python libraries are ideal for various data engineering tasks such as faster data processing and other machine learning activities. Data engineers primarily focus on data modeling and data processing architecture but also need a fundamental understanding of algorithms and data structures. Take a look at some of the data engineer interview questions based on various Python concepts, including Python libraries, algorithms, data structures, etc. These data engineer interview questions cover Python libraries like Pandas, NumPy, and SciPy.

Point Out A Couple Of Test Cases And Describe Them

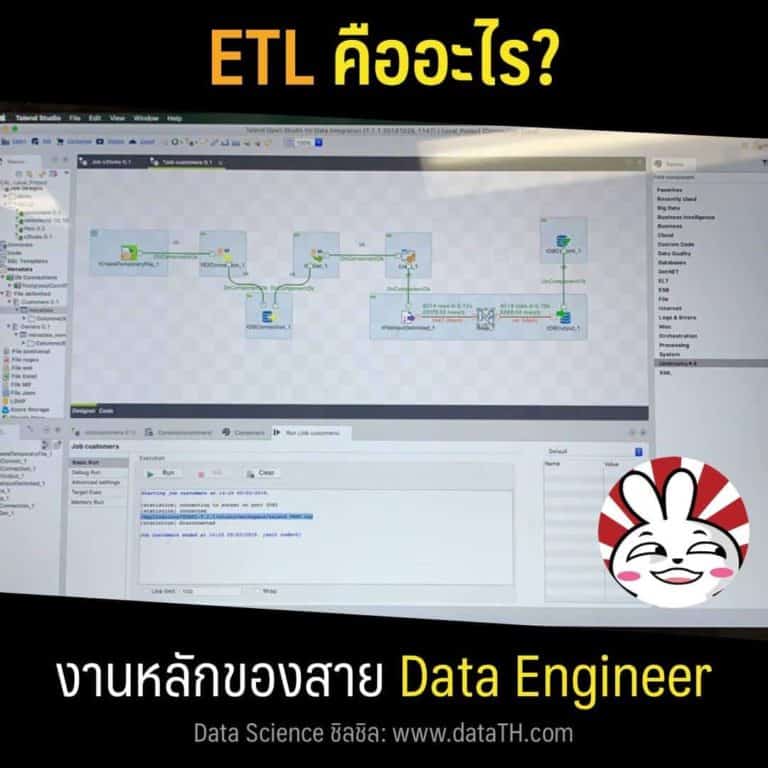

- Mapping Doc Validation: Verifying whether the ETL info is supplied inside the Mapping Doc.

- Data Check: Every element relating to the data including Data check, Number Check, and Null check is analyzed in this specific situation Correctness Issues Misspelled Data, Inaccurate data, along with null data are tested.

The interviewer can ask you these ETL Interview Questions to test your knowledge of test cases. These questions are also among ETL interview questions for data engineer professionals.

Recommended Reading: How To Reject Applicant After Interview

What Is Executor Memory In Spark

For a spark executor, every spark application has the same fixed heap size and fixed number of cores. The heap size is regulated by the spark.executor.memory attribute of the executor-memory flag, which is also known as the Spark executor memory. Each worker node will have one executor for each Spark application. The executor memory is a measure of how much memory the application will use from the worker node.

Explain Mapreduce In Hadoop

MapReduce is a programming model and software framework for processing large volumes of data. Map and Reduce are the two phases of MapReduce. The map turns a set of data into another set of data by breaking down individual elements into tuples . Second, there’s the reduction job, which takes the result of a map as an input and condenses the data tuples into a smaller set. The reduction work is always executed after the map job, as the name MapReduce suggests.

Read Also: Interview Questions For Excel Skills

Responsibilities Of A Data Engineer

The responsibilities include:

- To handle and supervise the data within the company.

- Maintain and handle the datas source system and staging areas.

- Simplify data cleansing along with subsequent building and improving the reduplication of data.

- Make available and execute both data transformation and ETL process.

- Extracting and doing ad-hoc data query building.

Differentiate Between Relational And Non

|

Relational Database Management Systems |

Non-relational Database Management Systems |

|

Relational Databases primarily work with structured data using SQL . SQL works on data arranged in a predefined schema. |

Non-relational databases support dynamic schema for unstructured data. Data can be graph-based, column-oriented, document-oriented, or even stored as a Key store. |

|

RDBMS follow the ACID properties – atomicity, consistency, isolation, and durability. |

Non-RDBMS follow the Brewers Cap theorem – consistency, availability, and partition tolerance. |

|

RDBMS are usually vertically scalable. A single server can handle more load by increasing resources such as RAM, CPU, or SSD. |

Non-RDBMS are horizontally scalable and can handle more traffic by adding more servers to handle the data. |

|

Relational Databases are a better option if the data requires multi-row transactions to be performed on it since relational databases are table-oriented. |

Non-relational databases are ideal if you need flexibility for storing the data since you cannot create documents without having a fixed schema. Since non-RDBMS are horizontally scalable, they can become more powerful and suitable for large or constantly changing datasets. |

|

E.g. PostgreSQL, MySQL, Oracle, Microsoft SQL Server. |

E.g. Redis, MongoDB, Cassandra, HBase, Neo4j, CouchDB |

You May Like: How To Send A Rejection Email For A Job Interview

What Is The Difference Between A Data Scientist And A Data Engineer

The main responsibility of a data scientist is to analyze data and produce suggestions for actions to take to improve a business metric, and then monitor the results of implementing those actions.

In contrast, a data engineer is responsible for implementing the data pipeline to gather and transform data for data scientists to analyze. While a data engineer needs to understand the business value of the data being collected and analyzed, their daily tasks will be more oriented around implementing the gathering, filtering, and transformation of data.

In Brief What Is The Difference Between A Data Warehouse And A Database

When working with Data Warehousing, the primary focus goes on using aggregation functions, performing calculations, and selecting subsets in data for processing. With databases, the main use is related to data manipulation, deletion operations, and more. Speed and efficiency play a big role when working with either of these.

You May Like: How To Nail A Job Interview

Q: Querying Data With Mongodb

Lets try to replicate the BoughtItem table first, as you did in SQL. To do this, you must append a new field to a customer. MongoDBs documentation specifies that the keyword operator set can be used to update a record without having to write all the existing fields:

# Just add "boughtitems" to the customer where the firstname is Bobbob=customers.update_many

Notice how you added additional fields to the customer without explicitly defining the schema beforehand. Nifty!

In fact, you can update another customer with a slightly altered schema:

amy=customers.update_manyprint)# pymongo.results.UpdateResult

Similar to SQL, document-based databases also allow queries and aggregations to be executed. However, the functionality can differ both syntactically and in the underlying execution. In fact, you might have noticed that MongoDB reserves the $ character to specify some command or aggregation on the records, such as $group. You can learn more about this behavior in the official docs.

You can perform queries just like you did in SQL. To start, you can create an index:

Tell Me About Yourself

What theyâre really asking: What makes you a good fit for this job?

This question is asked so often in interviews that it can seem generic and open-ended, but itâs really about your relationship with data engineering. Keep your answer focused on your path to becoming a data engineer. What attracted you to this career or industry? How did you develop your technical skills?

The interviewer might also ask:

-

Why did you choose to pursue a career in data engineering?

-

Describe your path to becoming a data engineer.

Recommended Reading: How To Ask For A Job Interview

What Is The Use Of Metastore In Hive

Metastore is used as a storage location for the schema and Hive tables. Data such as definitions, mappings, and other metadata can be stored in the metastore. This is later stored in an RDMS when required.

Next up on this compilation of top Data Engineer interview questions, let us check out the advanced set of questions.

What Are The Benefits Of Using Aws Identity And Access Management

-

AWS Identity and Access Management supports fine-grained access management throughout the AWS infrastructure.

-

IAM Access Analyzer allows you to control who has access to which services and resources and under what circumstances. IAM policies let you control rights for your employees and systems, ensuring they have the least amount of access.

-

It also provides Federated Access, enabling you to grant resource access to systems and users without establishing IAM Roles.

Don’t Miss: What To Bring To A Teacher Interview

Sql Interview Questions For Data Engineers

The SQL coding stage is a big part of the data engineering hiring process. You can practice various simple and complex scripts. The interviewer may ask you to write a query for data analytics, common table expressions, ranking, adding subtotals, and temporary functions.

What are Common Table Expressions in SQL?

These are used to simplify complex joins and run subqueries.

In the SQL script below, we are running a simple subquery to display all students with Science majors and grade A.

SELECT *FROM classWHERE id in

If we are using this subquery multiple times, we can create a temporary table temp and call it in our query using the SELECT command as shown below.

WITH temp as SELECT *FROM classWHERE id in

You can translate this example for even complex problems.

How to rank the data in SQL?

Data engineers commonly rank values based on parameters such as sales and profit.

The query below ranks the data based on sales. You can also use DENSE_RANK, which does not skip subsequent ranks if the values are the same.

SELECT id, sales, RANK OVER FROM bill

Can you create a simple Temporary Function and use it in SQL query?

Just like Python, you can create a function in SQL and use it in your query. It looks elegant, and you can avoid writing huge case statements – Better Programming.

CREATE TEMPORARY FUNCTION get_gender AS SELECT name, get_gender as genderFROM class

What Are Some Of The Issues That Data Mining Can Help With

Data mining can be applied to various sectors and industries, including product and service marketing, artificial intelligence, and government intelligence. The FBI of the United States employs data mining to filter security and intelligence for illicit and incriminating e-information spread across the internet.

The interviewer can ask you these ETL Interview Questions to test your knowledge of Data Mining. These questions are also among ETL interview questions for data engineer professionals.

Recommended Reading: How To Thank Interviewer After Interview

Briefly Explain Etl Mapping Sheets

ETL mapping sheets usually offer complete details about a source and a destination table, including each column and how to look them up in reference tables.ETL testers may have to generate big queries with several joins at any stage of the testing process to check data. When using ETL mapping sheets, writing data verification queries is much easier.

How Can You Prevent Someone From Copying The Data In Your Spreadsheet

In Excel, you can protect a worksheet, meaning that you can paste no copied data from the cells in the protected worksheet. To be able to copy and paste data from a protected worksheet, you must remove the sheet protection and unlock all cells, and once more lock only those cells that are not to be changed or removed. To protect a worksheet, go to Menu -> Review -> Protect Sheet -> Password. Using a unique password, you can protect the sheet from getting copied by others.

Also Check: How To Prepare For Amazon Data Engineer Interview

What Are The Various Design Schemas In Data Modeling

There are two fundamental design schemas in data modeling: star schema and snowflake schema.

-

Star Schema- The star schema is the most basic type of data warehouse schema. Its structure is similar to that of a star, where the star’s center may contain a single fact table and several associated dimension tables. The star schema is efficient for data modeling tasks such as analyzing large data sets.

-

Snowflake Schema- The snowflake schema is an extension of the star schema. In terms of structure, it adds more dimensions and has a snowflake-like appearance. Data is split into additional tables, and the dimension tables are normalized.

What Are The Different Kinds Of Joins In Sql

A JOIN clause combines rows across two or more tables with a related column. The different kinds of joins supported in SQL are:

-

JOIN: returns the records that have matching values in both tables.

-

LEFT JOIN: returns all records from the left table with their corresponding matching records from the right table.

-

RIGHT JOIN: returns all records from the right table and their corresponding matching records from the left table.

-

FULL JOIN: returns all records with a matching record in either the left or right table.

Don’t Miss: Human Resources Questions To Ask In An Interview

A Comprehensive Guide On Python Concepts And Questions From Top Companies For All The Budding Data Engineers To Help Them In Preparing For Their Next Interview

Today we are going to cover interview questions in Python for a Data Engineering interview. This article will cover concepts and skills needed in Python to crack a Data Engineering interview. As a Data Engineer, you need to be really good in SQL as well as Python. This blog will cover only Python, but if you are interested to know about SQL, then there is a comprehensive article Data Engineer Interview Questions.

Python ranks on top of PYPL-Popularity of Programming Language Index, based on the analysis of how often tutorials of various programming languages are searched on Google. Python is top among many other languages for Data Engineering and Data Science.

The Data Engineers typically work on various data formats and Python makes it easier to work with such formats. Also, Data Engineers are required to use APIs to retrieve data from different sources. Typically the data is in JSON format and Python makes it easier to work with JSON as well. Data Engineers not only extract the data from different sources but they are also responsible for processing the data. One of the most famous data engines is Apache Spark, if you know Python, you can very well work with Apache spark since they provide an API for it. Python has become a must have skill for a Data Engineer in recent times! Lets see what makes a good data engineer now.

What Data Security Solutions Does Azure Sql Db Provide

In Azure SQL DB, there are several data security options:

-

Azure SQL Firewall Rules: There are two levels of security available in Azure.

-

The first are server-level firewall rules, which are present in the SQL Master database and specify which Azure database servers are accessible.

-

The second type of firewall rule is database-level firewall rules, which monitor database access.

-

Azure SQL Database Auditing: The SQL Database service in Azure offers auditing features. It allows you to define the audit policy at the database server or database level.

-

Azure SQL Transparent Data Encryption: TDE encrypts and decrypts databases and performs backups and transactions on log files in real-time.

-

Azure SQL Always Encrypted: This feature safeguards sensitive data in the Azure SQL database, such as credit card details.

You May Like: Talent Acquisition Specialist Interview Questions

What Happens When Block Scanner Detects A Corrupted Data Block

It is one of the most typical and popular interview questions for data engineers. You should answer this by stating all steps followed by a Block scanner when it finds a corrupted block of data.

Firstly, DataNode reports the corrupted block to NameNode.NameNode makes a replica using an existing model. If the system does not delete the corrupted data block, NameNode creates replicas as per the replication factor.

Behavioral Data Engineer Questions

Behavioral data engineer interview questions give the interviewer a chance to see how you have handled unforeseen data engineering issues or teamwork challenges in your experience. The answers you provide should reassure your future employer that you can deal with high-pressure situations and a variety of challenges. Here are a few examples to consider in your preparation.

12. Data maintenance is one of the routine responsibilities of a data engineer. Describe a time when you encountered an unexpected data maintenance problem that made you search for an out-of-the-box solution”.

How to Answer

Usually, data maintenance is scheduled and covers a particular task list. Therefore, when everything is operating according to plan, the tasks dont change as often. However, its inevitable that an unexpected issue arises every once in a while. As this might cause uncertainty on your end, the hiring manager would like to know how you would deal with such high-pressure situations.

Answer Example

Its true that data maintenance may come off as routine. But, in my opinion, its always a good idea to closely monitor the specified tasks. And that includes making sure the scripts are executed successfully. Once, while I was conducting an integrity check, I located a corrupt index that could have caused some serious problems in the future. This prompted me to come up with a new maintenance task that prevents corrupt indexes from being added to the companys databases.

How to Answer

You May Like: How To Interview A Financial Planner