How To Get An Interview

-

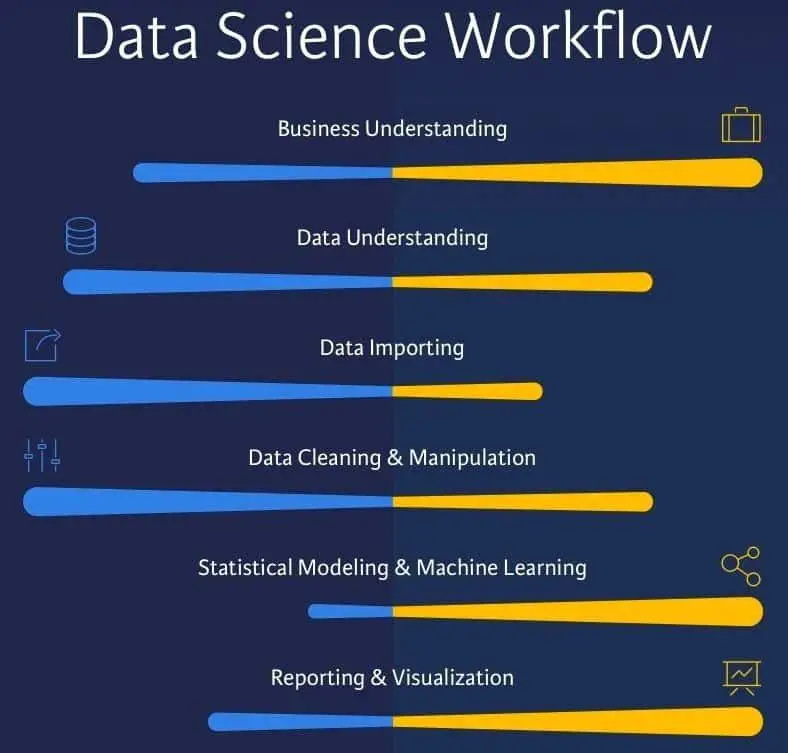

First and foremost, develop the necessary skills and be sound with the fundamentals, these are some of the horizons you should be extremely comfortable with –

- Business Understanding

- SQL and Databases

- Programming Skills

- Mathematics –

- Machine Learning and Model building

- Data Structures and Algorithms

- Domain Understanding

- Literature Review : Being able to read and understand a new research paper is one of the most essential and demanding skills needed in the industry today, as the culture of Research and Development, and innovation grows across most good organizations.

- Communication Skills – Being able to explain the analysis and results to business stakeholders and executives is becoming a really important skill for Data Scientists these days

- Some Engineering knowledge – Being able to develop a RESTful API, writing clean and elegant code, Object Oriented programming are some of the things you can focus on for some extra brownie points.

- Big data knowledge – Spark, Hive, Hadoop, Sqoop.

Build a personal Brand

Python Coding Interview Question #1: Business Name Lengths

The next question is by the City of San Francisco:

Find the number of words in each business name. Avoid counting special symbols as words . Output the business name and its count of words.

Link to the question:

When answering the question, you should first find only distinct businesses using the drop_duplicates function. Then use the replace function to replace all the special symbols with blank, so you dont count them later. Use the split function to split the text into a list, and then use the len function to count the number of words.

What Exactly Is Google Looking For

At the end of each session your interviewer will grade your performance using a standardized feedback formthat summarizes the attributes Google looks for in a candidate. That form is constantly evolving, but we have listed the main components we know of at the time of writing this article below.

A) Questions asked

In the first section of the form the interviewer fills in the questions they asked you. These questions are then shared with your future interviewers so you don’t get asked the same questions twice.

B) Attribute scoring

In the next section, each interviewer will assess you on thefour main attributesGoogle looks for when hiring:

Recommended Reading: How To Ask For Feedback From An Interview

What Are Recommender Systems

A recommender system predicts what a user would rate a specific product based on their preferences. It can be split into two different areas:

Collaborative Filtering

As an example, Last.fm recommends tracks that other users with similar interests play often. This is also commonly seen on Amazon after making a purchase customers may notice the following message accompanied by product recommendations: “Users who bought this also bought”

Content-based Filtering

As an example: Pandora uses the properties of a song to recommend music with similar properties. Here, we look at content, instead of looking at who else is listening to music.

Free Course: Python Libraries for Data Science

What Is Statistical Power

Statistical power or sensitivity of a binary hypothesis test is the probability that the test correctly rejects the null hypothesis when the alternative hypothesis is true.

It can be equivalently thought of as the probability of accepting the alternative hypothesis when it is truethat is, the ability of a test to detect an effect, if the effect actually exists.

To put in another way, Statistical power is the likelihood that a study will detect an effect when the effect is present. The higher the statistical power, the less likely you are to make a Type II error .

A type I error is the incorrect rejection of a true null hypothesis. Usually a type I error leads one to conclude that a supposed effect or relationship exists when in fact it doesn’t. Examples of type I errors include a test that shows a patient to have a disease when in fact the patient does not have the disease, a fire alarm going on indicating a fire when in fact there is no fire, or an experiment indicating that a medical treatment should cure a disease when in fact it does not.

A type II error is the failure to reject a false null hypothesis. Examples of type II errors would be a blood test failing to detect the disease it was designed to detect, in a patient who really has the disease a fire breaking out and the fire alarm does not ring or a clinical trial of a medical treatment failing to show that the treatment works when really it does.

Don’t Miss: How To Prepare For Medical Coding Interview

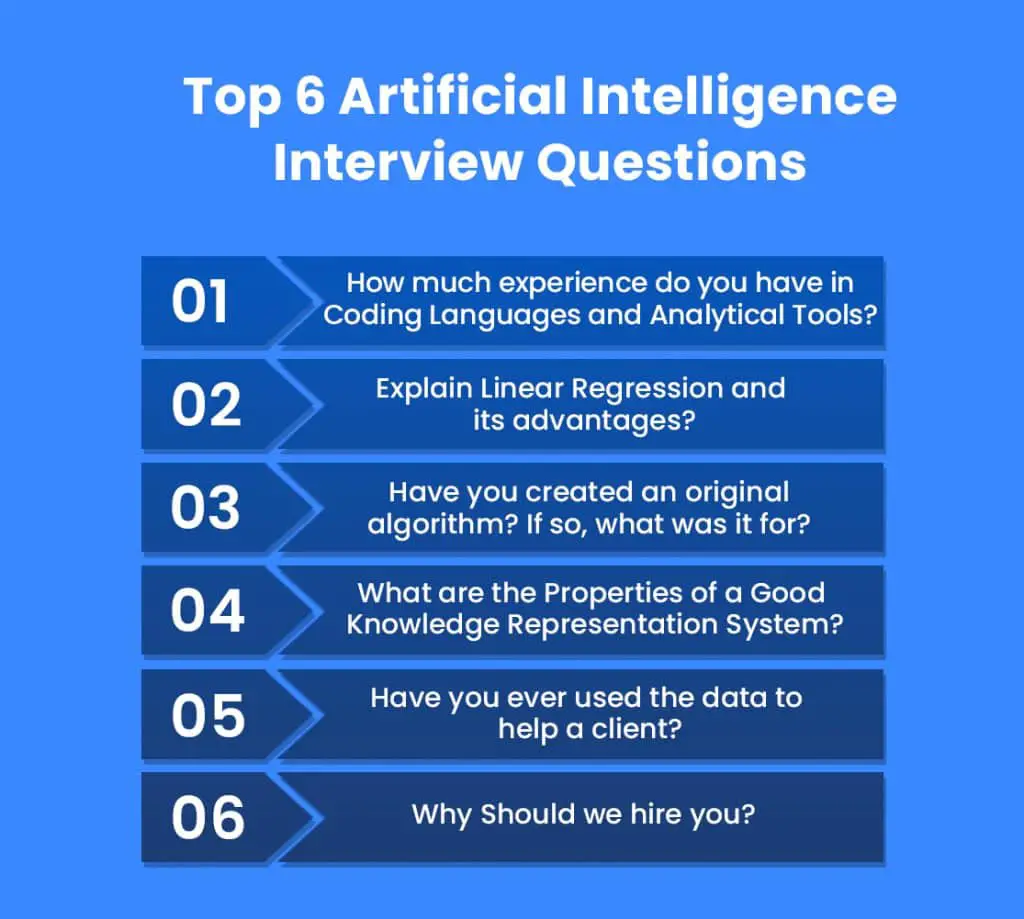

Preparing For A Data Scientist Interview

Its not uncommon for a data scientist applicant to go through three to five interviews for the role. This can include a phone interview, Zoom interview, in-person interview, and panel interview.

As you might expect, many of the interview questions will focus on your hard skills. However, you can also expect questions about your soft skills, as well as behavioral interview questions that assess both your hard and soft skills.

Heres how you can prepare for your data scientist interview.

Research The Position And The Company

If you want to know what may be asked in your data science interview, the best place to start is by researching the role to which you are applying, and the company itself. Check out company websites, social media pages, and reviews, and even speak to people who already work there, if you can. The more you can glean about the work culture, the companyâs values, and the methods and systems they use, the better you can tailor your answers, and the easier it is to show that you are fully aligned.

You May Like: Cracking The Coding Interview Com

How And By What Methods Data Visualisations Can Be Effectively Used

Ans. Data visualisation is greatly helpful while creation of reports. There are quite a few reporting tools available such as tableau, Qlikview etc. which make use of plots, graphs etc for representing the overall idea and results for analysis. Data visualisations are also used in exploratory data analysis so that it gives us an overview of the data.

What Is Deep Learning

Deep Learning is one of the essential factors in Data Science, including statistics. Deep Learning makes us work more closely with the human brain and reliable with human thoughts. The algorithms are sincerely created to resemble the human brain. In Deep Learning, multiple layers are formed from the raw input to extract the high-level layer with the best features.

Don’t Miss: Google Product Manager Interview Questions And Answers

Common Situational Data Science Interview Questions

Leadership and communication are two valuable skills for Data Scientists. Employers value job candidates who can show initiative, share their expertise with team members, and communicate data science objectives and strategies.

Here are some examples of leadership and communication data science interview questions:

Question: What do you like about working on a multi-disciplinary team?

Answer: A Data Scientist collaborates with a wide variety of people in technical and non-technical roles. It is not uncommon for a Data Scientist to work with developers, designers, product specialists, data analysts, sales and marketing teams, and top-level executives, not to mention clients.

In your answer to this question, you need to illustrate that youre a team player who relishes the opportunity to meet and collaborate with people across an organization.

Choose an example of a situation where you reported to the highest-level people in a company to show not only that you are comfortable communicating with anyone, but also to show how valuable your data-driven insights have been in the past.

What Are The Major Companies Hiring Data Science Experts In Chicago

The top firms hiring for the job title Data Science Experts are Mattersight, Uptake, and Allstate. Uptake has the highest reported compensation, with an average salary of $100,470. CCC Information Services and Allstate are firms that also pay well for this position. Doing a Data Science certification in Chicago would be a good choice to get a job in this field.

Recommended Reading: Donating Clothes For Job Interviews

Q24 Name Mutable And Immutable Objects

The mutability of a data structure is the ability to change the portion of the data structure without having to recreate it. Mutable objects are lists, sets, values in a dictionary.

Immutability is the state of the data structure that cannot be changed after its creation. Immutable objects are integers, strings, float, bool, tuples, keys of a dictionary.

Q226 What Is The Difference Between Conditionals And Control Flows

Conditionals are a set of rules performed if certain conditions are met. The purpose of the conditional flow is to control the execution of a block of code if the statements criteria match or not. These are also referred to as ternary operators. These single-line if-else statements consist of true-false as outputs on evaluating a statement.

Control Flows are the order in which the code is executed. In Python, the control flow is regulated by conditional statements, loops, and call functions.

Read Also: Servicenow Ticketing Tool Interview Questions

Bayer Data Scientist Interview Questions

- – San Andrés and Providence and

- Republic of Macedonia – All Cities

- – Autonomous Province of Kosovo and Metohija

- United Arab Emirates – All Cities

161.1K

I applied online. The process took 2 weeks. I interviewed at Bayer

Interview

It was a standard interview process with one initial interview with the hiring managers, followed by three additional interviews. The first interview was technical, and the remaining two were behavioral. In all, about 4 hours of interviews. The process was quick and not dragged out over too many weeks.

What Is Star Schema

It is a traditional database schema with a central table. Satellite tables map IDs to physical names or descriptions and can be connected to the central fact table using the ID fields these tables are known as lookup tables and are principally useful in real-time applications, as they save a lot of memory. Sometimes, star schemas involve several layers of summarization to recover information faster.

Also Check: How To Record Phone Interviews For Podcast

Most Common Data Science Interview Questions

While there are no guarantees, here are 10 interview questions youll likely encounter:

Bellassai also notes that while you should give your best answer to the question, there is no right answer. Remember that there is no perfect solution. A particular approach isnt necessarily the best just because youve used it before.

Data Science Python Interview Questions And Answers

The questions below are based on the course that is taught at ProjectPro Data Science in Python. This is not a guarantee that these questions will be asked in Data Science Interviews. The purpose of these questions is to make the reader aware of the kind of knowledge that an applicant for a Data Scientist position needs to possess.

Data Science Interview Questions in Python are generally scenario based or problem based questions where candidates are provided with a data set and asked to do data munging, data exploration, data visualization, modelling, machine learning, etc. Most of the data science interview questions are subjective and the answers to these questions vary, based on the given data problem. The main aim of the interviewer is to see how you code, what are the visualizations you can draw from the data, the conclusions you can make from the data set, etc.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

1) How can you build a simple logistic regression model in Python?

2) How can you train and interpret a linear regression model in SciKit learn?

3) Name a few libraries in Python used for Data Analysis and Scientific computations.

NumPy, SciPy, Pandas, SciKit, Matplotlib, Seaborn

4) Which library would you prefer for plotting in Python language: Seaborn or Matplotlib?

5) What is the main difference between a Pandas series and a single-column DataFrame in Python?

Scatter_matrix

numpy.loadtxt

Also Check: Python For Data Engineering Interview Questions

Difference Between An Error And A Residual Error

The difference between a residual error and error are defined below –

Error |

|

|

The difference between the actual value and the predicted value is called an error. Some of the popular means of calculating data science errors are –

|

The difference between the arithmetic mean of a group of values and the observed group of values is called a residual error. |

|

An error is generally unobservable. |

A residual error can be represented using a graph. |

|

A residual error is used to show how the sample population data and the observed data differ from each other. |

An error is how actual population data and observed data differ from each other. |

Q314 What Is The Difference Between Regplot Lmplot And Residplot

regplot plots the data and a linear regression model fit. It will show the best fit line that can be drawn across, given the set of all observations.

lmplot plots the data, and the regression model fits across a FacetGrid. It is more computationally intensive and is intended as a convenient interface to fit regression models across conditional subsets of a dataset. lmplot combines regplot and FacetGrid.

residplot plots the errors or the residuals between X and Y, creating a linear regression equation for the same.

Recommended Reading: Financial Analyst Interview Case Study

Since You Have Experience In The Deep Learning Field Can You Tell Us Why Tensorflow Is The Most Preferred Library In Deep Learning

Tensorflow is a very famous library in deep learning. The reason is pretty simple actually. It provides C++ as well as Python APIs which makes it very easier to work on. Also, TensorFlow has a fast compilation speed as compared to Keras and Torch . Apart from that, Tenserflow supports both GPU and CPU computing devices. Hence, it is a major success and a very popular library for deep learning.

Roles And Responsibilities Of A Machine Learning Engineer:

Read Also: Buyer Real Estate Agent Interview Questions

What Are Techniques Used For Sampling Advantage Of Sampling

There are various methods for drawing samples from data.

The two main Sampling techniques are

Probability sampling

Probability sampling means that each individual of the population has a possibility of being included in the sample. Probability sampling methods include

- Simple random sampling

In simple random sampling, each individual of the population has an equivalent chance of being selected or included.

- Systematic sampling

Systematic sampling is very much similar to random sampling. The difference is just that instead of randomly generating numbers, in systematic sampling every individual of the population is assigned a number and are chosen at regular intervals.

- Stratified sampling

In stratified sampling, the population is split into sub-populations. It allows you to conclude more precise results by ensuring that every sub-population is represented in the sample.

- Cluster sampling

Cluster sampling also involves dividing the population into sub-populations, but each subpopulation should have analogous characteristics to that of the whole sample. Rather than sampling individuals from each subpopulation, you randomly select the entire subpopulation.

Non-probability sampling

In non-probability sampling, individuals are selected using non-random ways and not every individual has a possibility of being included in the sample.

- Convenience sampling

- Purposive sampling

- Snowball sampling

Advantages of Sampling